Jennifer DeStefano was stunned when she heard a voice calling for help that sounded exactly like her eldest daughter Brianna, 15. After investigating, it was all just a scam with the help of AI.

On the afternoon of January 20, Jennifer DeStefano’s phone rang just as she stepped out of her car outside the dance studio where her daughter Aubrey was practicing. The caller was unknown, and DeStefano planned not to answer.

But her older daughter, Brianna, 15, was training for a ski race. DeStefano feared it might be an emergency call.

“Hello?” she answered over speakerphone as she locked her car and grabbed her purse and laptop bag and headed into the gym. Suddenly, DeStefano heard screaming and sobbing.

|

Jennifer DeStefano and her two daughters. Photo: NVCC. |

“Mom, I’m in trouble!” a girl’s voice shouted. “What did you do? What’s going on?” the mother immediately asked the caller on the other end of the line.

Moment of panic

DeStefano told CNN that the voice sounded exactly like Brianna's, from the accent to everything. "I thought she had slipped off the mountain, which is what happens when you're skiing. So I started to panic," DeStefano said.

The screams continued and a deep male voice began to command: “Listen. Your daughter is in my hands. If you call the police or anyone, I will inject her full of drugs. I will take her to Mexico and you will never see your daughter again.”

DeStefano was instantly paralyzed. Then she ran into the gym, shaking and screaming for help. She felt as if she were suddenly drowning.

|

DeStefano experienced a terrifying moment when a voice that sounded exactly like Brianna called for help over the phone. Photo: Jennifer DeStefano. |

A chaotic series of events followed. The kidnappers quickly demanded a $1 million ransom, and DeStefano decided to call the police. After numerous attempts to reach Brianna, the “kidnap” was exposed.

As it turned out, it was all a scam. A confused Brianna called her mother to tell her she had no idea what the fuss was about, when everything was fine with her.

But for DeStefano, she will never forget that terrifying four-minute call, with that strange voice.

DeStefano believes she was the victim of a virtual kidnapping scam over the phone using deepfake technology. Using this AI technology, kidnappers scare the victim's family with audio that has been altered to resemble the victim's voice and demand ransom.

The Dark Side of AI

According to Siobhan Johnson, a spokeswoman for the Federal Bureau of Investigation (FBI), on average, families in the US have lost about $11,000 for each scam call.

|

On average, families in the US lose about $11,000 per scam call. Photo: Matthew Fleming. |

In 2022, data from the US Federal Trade Commission (FTC) showed that Americans lost a total of $2.6 billion due to scam calls.

In a recording of DeStefano's call provided to CNN by the Scottsdale Police Department, a mother at the dance studio tries to explain to the dispatcher what's going on.

“A mother just walked in and she got a phone call from someone. It was her daughter. There was a kidnapper saying he wanted a million dollars. He wouldn’t let her talk to her daughter,” the caller said.

In the background, DeStefano can be heard shouting, “I want to talk to my daughter.” The dispatcher immediately determined the call was a hoax.

In fact, spoofing calls are a regular occurrence across the United States. Sometimes, callers contact elderly people, claiming their grandchildren have been in an accident and need money for surgery.

The common feature of this method is that fake kidnappers often use recordings of crowds screaming.

However, US federal officials warn that these scams are becoming more sophisticated with deepfake technology impersonating victims' voices to gain the trust of acquaintances and then defraud them of money.

|

Extortion scams are becoming more sophisticated with deepfake technology that mimics the victim's voice to gain the trust of acquaintances and then defraud money. Photo: Protocol |

The development of cheap, accessible AI programs has allowed scammers to freely clone voices and create conversations that sound exactly like the original.

"This threat is not just hypothetical. We are seeing fraudsters weaponize these tools. They can create a relatively good voice clone with less than a minute of audio. For some people, even a few seconds is enough," said Hany Farid, a professor of computer science at the University of California, Berkeley.

With the help of AI software, voice impersonation can be done for as little as $5 a month and is easily accessible to anyone, Farid said.

The FTC also issued a warning in March that scammers could take audio files from victims' social media videos .

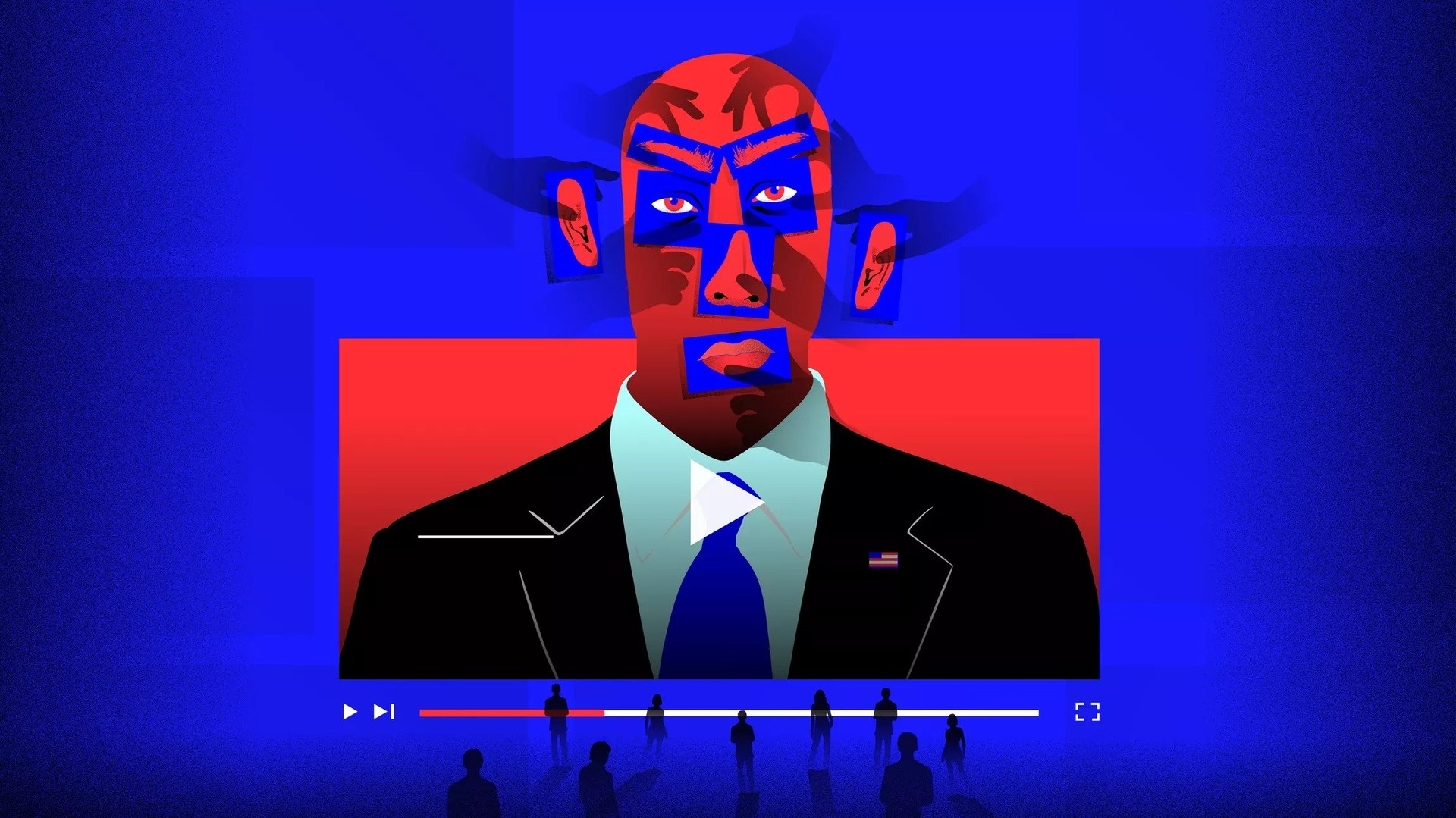

Using artificial intelligence to insert faces into videos or fake voices, commonly known as deepfakes, is becoming more common and more dangerous. This is a real danger on the Internet.

In addition to sensitive videos, deepfakes can also cause serious harm if used for political purposes. The video of former US President Barack Obama's face being morphed, which went viral in 2018, showed that leading political figures can also become victims.

In 2019, it was the turn of Nancy Pelosi, Speaker of the US House of Representatives, to become a victim. An edited video showed Pelosi speaking while intoxicated, with her speech unclear.

|

Not only used to combine pornographic videos, deepfakes also bring concerns about fake news before important moments such as the US Presidential election in late 2020. Photo: Cnet. |

In late 2019, the US state of California passed a law making it illegal to create or share deepfake videos. The law specifically states that edited videos containing images, videos, or voices of politicians within 60 days of an election are illegal.

In 2019, two United Nations (UN) researchers, Joseph Bullock and Miguel Luengo-Oroz, said that their AI model, trained on Wikipedia texts and more than 7,000 speeches from the General Assembly, could easily fake speeches by world leaders.

The team says they only had to feed the AI a few words to generate coherent, “high-quality” text.

For example, when researchers gave the headline “Secretary-General strongly condemns deadly terrorist attacks in Mogadishu,” the AI was able to generate a speech expressing support for the UN decision.

Source link

![[Photo] National Assembly Chairman Tran Thanh Man visits Vietnamese Heroic Mother Ta Thi Tran](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/7/20/765c0bd057dd44ad83ab89fe0255b783)

Comment (0)