Artificial intelligence is helping people compose emails, recommend movies, analyze data and assist in diagnosing diseases...

But as AI gets smarter, so does the sense of insecurity it brings. Part of it is that we don’t fully understand the technology we’re using. The rest stems from our own psychological instincts.

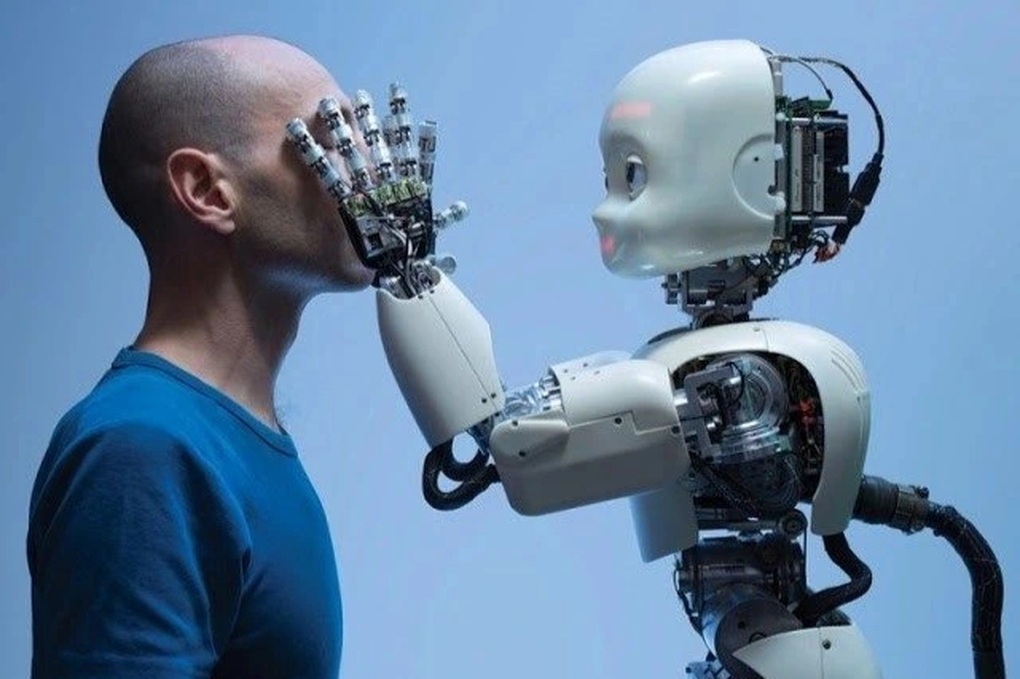

AI is developing faster than human psychological adaptability (Illustration photo).

When AI becomes a “black box” and users lose control

People tend to trust what they understand and control. When you push a button, the elevator moves. When you flip a switch, the light comes on. Clear responses create a sense of security.

In contrast, many AI systems operate like a closed box. You input data, but the way it produces results is hidden. That makes it impossible for users to understand or query.

That sense of opacity is unsettling. Users not only need a tool that works, but also need to know why it works.

If AI fails to provide an explanation, people start to question it. This leads to a concept called “algorithm aversion,” which behavioral researchers describe as the phenomenon where people tend to choose the decisions of others, even if they are wrong, rather than trust the judgment of a machine.

Many people are wary of AI becoming too accurate. A content recommendation engine can be annoying if it reads them too well. The feeling of being watched or manipulated begins to emerge, even though the system itself has no emotions or intentions.

This reaction stems from an instinctive behavior: anthropomorphism. Even though we know AI is not human, we still respond to it as if we were communicating with an individual. When AI is too polite or too cold, users feel strange and distrustful.

Humans are forgiving to humans, but not to machines.

An interesting paradox is that when humans make mistakes, we can be empathetic and accepting. But when the error comes from AI, especially when it is advertised as objective and data-driven, users often feel betrayed.

This is related to the phenomenon of expectation violations. We expect machines to be logical, accurate, and infallible. When that trust is violated, the psychological reaction is often more intense. Even a small error in an algorithm can be perceived as serious if the user feels out of control or unexplained.

We instinctively need to understand the cause of a mistake. With humans, we might ask why. With AI, the answer is often non-existent or too vague.

When teachers, writers, lawyers or designers see AI doing part of their jobs, they not only fear losing their jobs but also worry about the value of their skills and personal identity.

This is a natural reaction, called identity threat. It can lead to denial, resistance, or psychological defense. In these cases, suspicion is no longer an emotional reaction but a self-protection mechanism.

Trust doesn't come from logic alone.

Humans trust through emotion, gestures, eye contact, and empathy. AI can be articulate, even humorous, but it doesn’t know how to create a real connection.

The phenomenon of the “uncanny valley” is described by experts as the uncomfortable feeling of being confronted with things that are almost human, but lack something that makes them unreal.

When machines become too human, the feeling of insecurity becomes even more evident (Photo: Getty).

With AI, it's the absence of emotion that leaves many people feeling disoriented, unsure whether to trust or doubt.

In a world filled with fake news, fake videos, and algorithmic decisions, feelings of emotional abandonment make people wary of technology. Not because AI does anything wrong, but because we don’t know how to feel about it.

Moreover, suspicion is sometimes more than just a feeling. Algorithms have already created bias in hiring, criminal judgment, and credit approval. For those who have been hurt by opaque data systems, it makes sense to be wary.

Psychologists call this learned distrust. When a system repeatedly fails, it is understandable that trust will be lost. People will not trust simply because they are told to. Trust must be earned, not imposed.

If AI is to be widely adopted, developers need to create systems that can be understood, questioned, and held accountable. Users need to be involved in decision-making, rather than just observing from the sidelines. Trust can only be truly sustained when people feel respected and empowered.

Source: https://dantri.com.vn/cong-nghe/vi-sao-tri-tue-nhan-tao-cham-vao-noi-so-sau-nhat-cua-con-nguoi-20251110120843170.htm

![Dong Nai OCOP transition: [Article 3] Linking tourism with OCOP product consumption](https://vphoto.vietnam.vn/thumb/402x226/vietnam/resource/IMAGE/2025/11/10/1762739199309_1324-2740-7_n-162543_981.jpeg)

Comment (0)