According to BGR , 2024 will be a pivotal year for the world to realize whether artificial intelligence is truly the future of computing or just a passing fad. While the genuine applications of AI are becoming increasingly diverse, the dark side of this technology will also be revealed through countless scams in the coming months.

Scams.info's anti-fraud experts have just released a list of 3 AI-powered scams that people need to be wary of in 2024. The general rule is to be cautious of anything that looks too glamorous, but the schemes below require particularly heightened vigilance.

AI-based investment scams

Major players like Google, Microsoft, and OpenAI have poured millions of dollars into AI and will continue to invest heavily this year. Scammers will take advantage of this fact to entice you into investing in dubious opportunities. If someone on social media tries to convince you that AI will multiply your investment returns, think twice before opening your wallet.

"Great deals" and low-risk investments never really exist.

"Be wary of investments promising high returns with low risk, and be sure to do thorough research before investing your money in them," warns expert Nicholas Crouch from Scams.info. New investors should also be cautious of offers that require them to refer new members; these often operate on a multi-level marketing (MLM) model, benefiting only those at the top, while other participants rarely benefit.

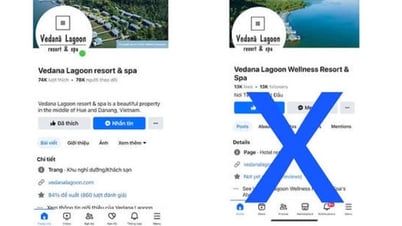

Impersonating a relative

The scam of impersonating friends or relatives to borrow money is nothing new, as voice imitation isn't very effective. However, with AI, this type of scam becomes far more insidious. All it takes is a YouTube video or Facebook post containing a relative's voice, and the scammer can use AI to perfectly replicate it. Could you tell the difference during a phone call?

AI can easily mimic the voices of your loved ones.

Screenshot from Washington Post

"It's crucial that people protect their social media accounts to prevent scammers from collecting voice recordings and family information," Crouch emphasized.

Using voice commands to bypass security systems.

Some banks use voice recognition to verify users when conducting transactions over the phone. For the reasons mentioned above, this method has suddenly become less secure than before. If you post videos or clips containing your voice anywhere on the internet, malicious actors can use that content to copy your voice. As Crouch notes, banks still have other data to verify customer identities, but this tactic is bringing criminals closer to stealing your bank account.

Voice-based security systems are no longer safe from the power of AI.

AI has the potential to fundamentally change our lives and how we interact with devices. It's also the latest tool that hackers and scammers will use to attack users. Therefore, it's crucial to remain vigilant and conduct thorough research before engaging in any activity involving AI.

Source link

Comment (0)