DeepSeek, the hottest Chinese startup in recent days, has raised doubts about its claim of creating AI on par with OpenAI with just $5 million.

DeepSeek made extensive media coverage and social media coverage at the beginning of the Year of the Snake, causing significant tremors in global stock markets.

However, a recent report by financial consulting firm Bernstein warns that, despite impressive achievements, the claim of creating an AI system comparable to OpenAI's for only $5 million is inaccurate.

According to Bernstein, DeepSeek's statement is misleading and does not reflect the bigger picture.

“We believe DeepSeek didn’t ‘create OpenAI with $5 million’; the models are fantastic but we don’t think they’re miracles; and the panic over the weekend seems to have been exaggerated,” the report states.

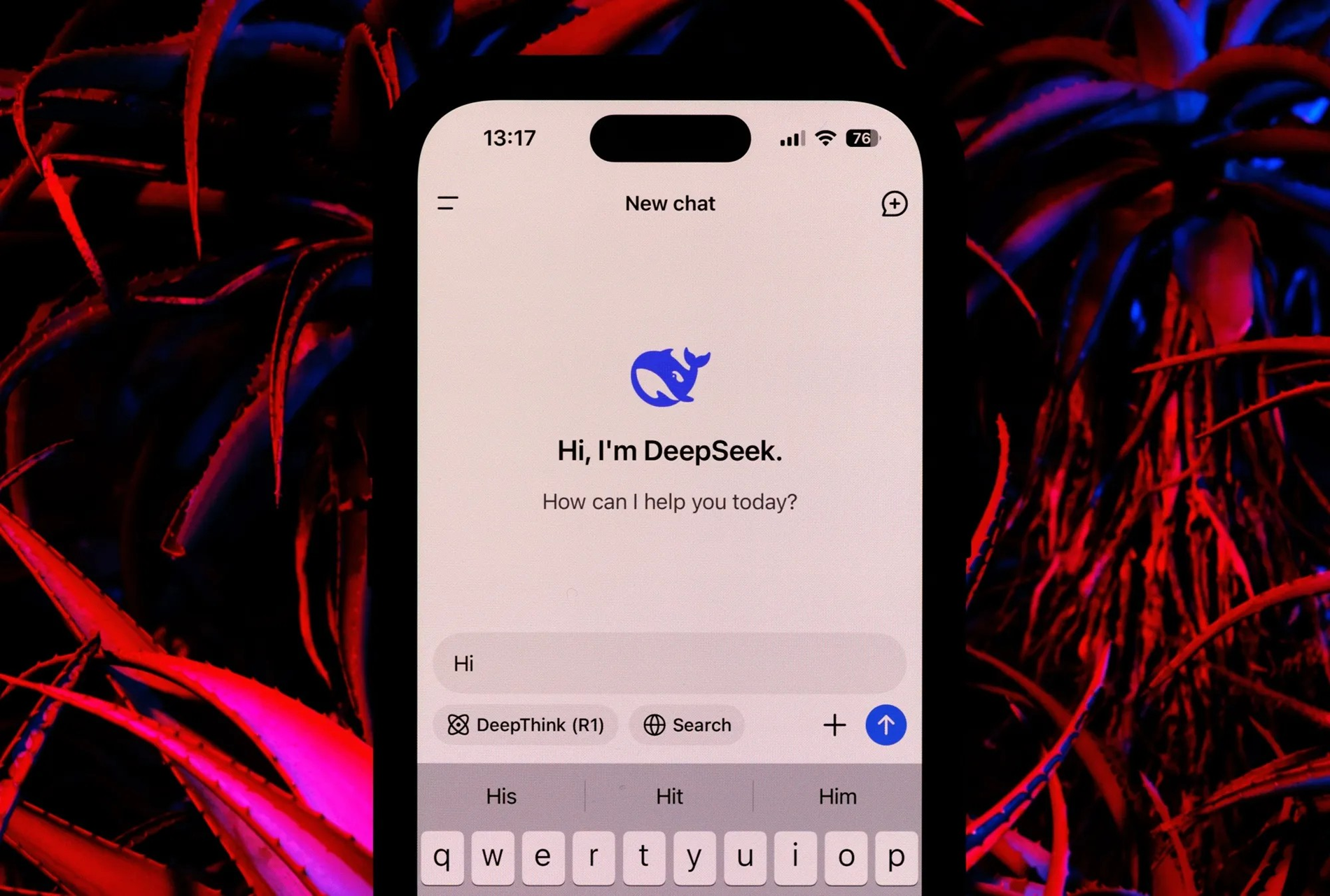

DeepSeek develops two main AI models: DeepSeek-V3 and DeepSeek R1. The large-scale V3 language model leverages the MOE architecture, combining smaller models to achieve high performance while using fewer computing resources than traditional models.

On the other hand, the V3 model has 671 billion parameters, with 37 billion parameters active at any given time, incorporating innovations such as MHLA to reduce memory usage and utilizing FP8 for greater efficiency.

Training the V3 model required a cluster of 2,048 Nvidia H800 GPUs over a period of two months, equivalent to 5.5 million GPU hours. While some estimates put the training cost at approximately $5 million, Bernstein's report emphasizes that this figure only covers computing resources and does not account for significant costs related to research, testing, and other development expenses.

The DeepSeek R1 model builds upon the foundation of V3 by utilizing Reinforcement Learning (RL) and other techniques to ensure inference capability.

The R1 model can compete with OpenAI models in reasoning tasks. However, Bernstein points out that developing R1 requires significant resources, although these are not detailed in the DeepSeek report.

Commenting on DeepSeek, Bernstein praised the models as impressive. For example, the V3 model performs as well as or better than other major language models in linguistics, programming, and mathematics while requiring fewer resources.

The V3 pre-training process required only 2.7 million GPU hours of work, or 9% of the computing resources of some other top-tier models.

Bernstein concluded that, while DeepSeek's advances are noteworthy, one must be wary of exaggerated claims. The idea of creating a competitor to OpenAI with only $5 million seems misguided.

(According to Times of India)

Source: https://vietnamnet.vn/deepseek-khong-the-lam-ai-tuong-duong-openai-voi-5-trieu-usd-2367340.html

![[Photo] Prime Minister Pham Minh Chinh attends the Conference on the Implementation of Tasks for 2026 of the Industry and Trade Sector](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F12%2F19%2F1766159500458_ndo_br_shared31-jpg.webp&w=3840&q=75)

Comment (0)