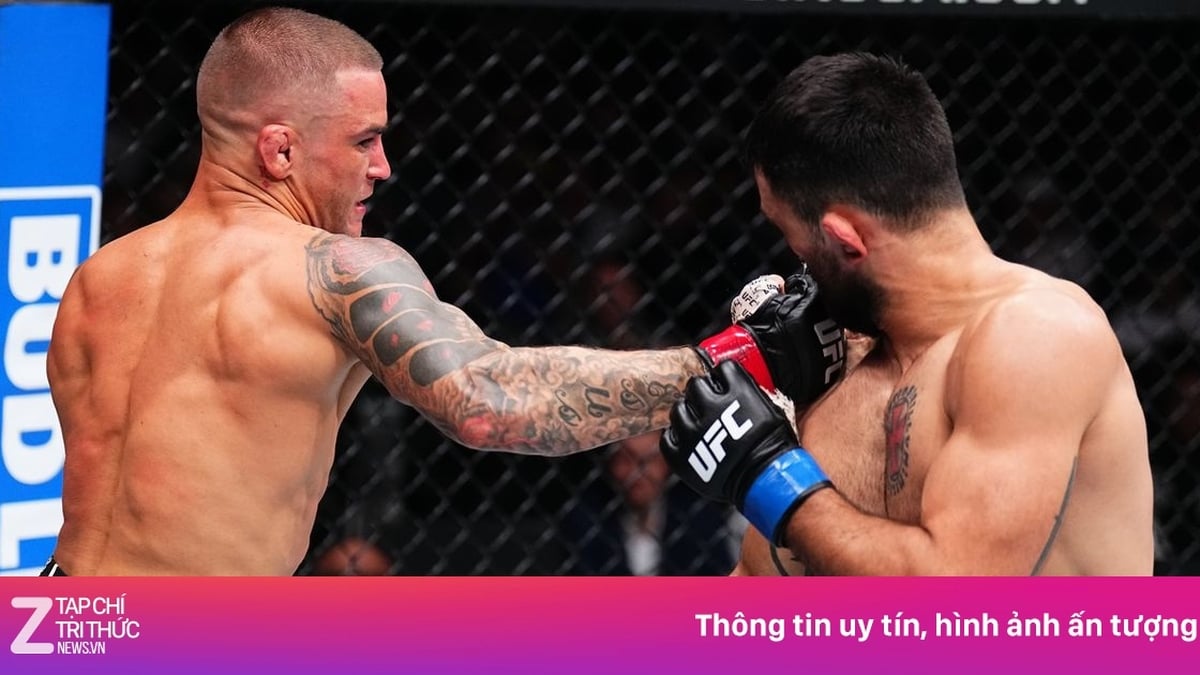

Image depicting the trend of face-swapping videos

From viral "morphing" videos on social media to personalized commercials, face-swapping technology - commonly known as "deepfake" or "face swap" - has become a digital cultural phenomenon.

Face transplant trend

In just a few seconds, users can "transform" into a Hollywood superstar, a cartoon character, or even a famous person from the past. But behind the convenience and fun of this trend is a complex process of digitizing facial data, raising many questions about privacy and personal information security.

The appeal of the face transplant trend comes from the psychology of liking entertainment and the desire to keep up with trends on social networks.

Many people, especially young people, are drawn to the curiosity and opportunity to create unique content. However, a lack of understanding of AI technology and data security makes it easy for them to overlook the risks.

Face transplant technology uses artificial intelligence (AI), specifically deep learning algorithms, to analyze and reconstruct facial images.

Applications like FaceApp, Reface or professional video editing platforms like Adobe After Effects integrate AI that can recognize facial features like eyes, nose, mouth, expressions and lighting.

The technology then maps a person's face onto the character's face in the video, creating a product that looks incredibly realistic.

To do this, facial data is collected through images or videos provided by users. Applications typically require users to upload one or more selfies, then use AI models to analyze and store information about facial structure.

This data includes facial landmarks, skin color, facial angles, and even expressions, which are encoded into digital vectors for computer processing.

The risks of faces becoming biometric data

Face is a form of biometric data, highly personal and cannot be changed like a password.

If this data is leaked, users cannot “change their faces” to protect themselves. Deepfake technology, with the ability to create videos with fake voices and images, is becoming a major threat.

Many applications, especially those from foreign platforms, do not have clear legal representation in Vietnam, but are still widely used. In addition, privacy policies are often written in a complicated and confusing manner, causing users to be negligent and easily accept them without consideration.

In Vietnam, there have been cases of using face-swapping videos to defame reputation or commit financial fraud, causing serious damage to personal reputation and social trust.

Furthermore, facial data is at risk of being used to train recognition systems without consent, for purposes ranging from commercial to surveillance, without users' knowledge.

What to do to protect facial data?

To participate in the face transplant trend while still protecting personal data, users need to equip themselves with the necessary habits and knowledge.

First, always double-check the app's origin before uploading any images. Apps with unknown origins or lacking developer information should be avoided as they may pose a data theft risk.

Next, take the time to read the app's privacy policy carefully, paying particular attention to how facial data is stored, used, and shared. If the policy is unclear or asks for unnecessary access, like your entire photo library or location, consider declining.

Choosing reputable platforms, such as those from large companies like Adobe or Reface, that have transparent privacy policies, is an effective way to minimize risk.

Additionally, users should limit uploading high-resolution photos or photos containing sensitive information, and only share face-swapping videos on private platforms, avoiding posting publicly content that could be abused.

To increase security, regularly check app permissions on your device and use antivirus software to detect suspicious activity.

Ultimately, learning about deepfake technology and how to identify fake content will help users stay alert and avoid falling victim to scams or defamation.

The risks of faces becoming "biometric data"

Loss of privacy: Faces are a form of biometric data that cannot be replaced like passwords. Once leaked, users cannot "change their faces" like they can change their PINs.

The risk of being impersonated by deepfake: Fake voice and face videos are becoming increasingly popular, causing confusion, financial fraud, or personal defamation.

Used to train AI without knowing: User photos can be used to train facial recognition systems, increasing the accuracy of commercial AI, without any compensation or notice.

What to do to protect facial data?

Read the privacy policy carefully: Before using an app, check how the provider handles your data.

Limit the provision of sensitive data: Upload only necessary images and avoid using unknown apps.

Use reputable platforms: Prioritize applications from large companies with transparent privacy policies.

Cybersecurity Update: Understand the risks of deepfake technology and how to identify fake content.

Source: https://tuoitre.vn/trao-luu-ghep-mat-vao-video-du-lieu-khuon-mat-dang-bi-so-hoa-ra-sao-20250529105826111.htm

Comment (0)