Suppose you were told that an AI tool could accurately predict the future of some stocks you own. How would you feel about using it? Now imagine you were applying for a job at a company where the HR department used an AI system to screen resumes. Would you feel comfortable with that?

A new study finds that people are neither completely enthusiastic nor completely dismissive of AI. Rather than falling into two opposing camps—techno-optimists and techno-detractors—most people judge AI based on its practical effectiveness in specific situations.

“We propose that AI will be evaluated positively when it is perceived as superior to humans and when personalization is not an important factor in the decision context,” said Jackson Lu, a professor in the Department of Work and Organization Studies at the MIT Sloan School of Management, a co-author of the new study. “In contrast, people will tend to avoid AI if either of these two conditions is not met. Only when both conditions are met will AI be truly evaluated positively.”

New theoretical framework offers insights

Human reactions to AI have long been a hotly debated topic, with seemingly conflicting results. A famous 2015 study on “algorithm avoidance” found that people are less tolerant of AI errors than human errors. Meanwhile, a prominent 2019 study found “algorithm appreciation,” meaning people prefer AI advice to human advice.

To reconcile these contradictory findings, Lu’s team conducted a meta-analysis of 163 previous studies comparing AI and human preferences. They examined whether the data supported the “Competence-Personalization” theoretical model—that is, in a given context, both the perceived competence of AI and the need for personalization influence people’s preference for AI or humans.

Across those 163 studies, the team analyzed more than 82,000 responses in 93 separate “decision contexts”—for example, whether participants felt comfortable with AI being used to diagnose cancer. The results confirmed that the theoretical model has clear explanatory value for human choice.

“The meta-analysis supports our theoretical framework. Both dimensions are important: People judge whether AI is better than humans at a particular task, and whether the task requires personalization. People only like AI when they think it is better than humans and the task does not require personalization,” said Prof. Lu.

“The bottom line is: high capability alone is not enough to make people appreciate AI. Personalization is also important,” he added.

For example, people tend to favor AI for fraud detection or big data processing—areas where AI outperforms humans in speed and scale, and where personalization is not needed. Conversely, they are reluctant to use AI in psychotherapy, job interviews, or medical diagnosis—areas where they believe humans understand their personal circumstances better.

“Humans have a fundamental desire to be seen as unique and different individuals. AI is often seen as impersonal and robotic. Even though AI is trained on a lot of data, people still feel that AI cannot understand their own circumstances. They want a doctor, a human employer who can see their differences,” said Professor Lu.

Context matters too: from tangibility to unemployment concerns

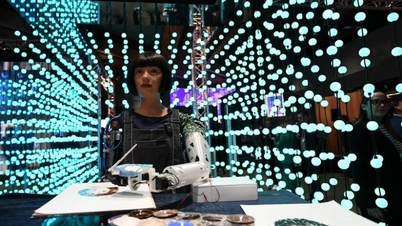

The study also found that many other factors influence AI acceptance. For example, people tend to value AI more if it is a tangible robot, rather than an invisible algorithm.

The economic context also makes a difference. In countries with low unemployment, AI is viewed more positively.

“This makes sense. If you’re worried about being replaced by AI, it’s hard to accept,” Lu said.

For now, Professor Lu continues to explore the complex and ever-changing human attitudes toward AI. While he doesn’t see this meta-analysis as the end of the story, he hopes the “Competence-Personality” framework will be a useful tool for understanding how people evaluate AI in different contexts.

“We are not saying that competence and personalization are the only two factors, but according to the analysis results, these two factors account for a large part in shaping people's attitudes towards AI and humans across many contexts,” concluded Prof. Lu.

The research involved scientists from MIT, Sun Yat-sen University, Shenzhen University, and Fudan University (China), and received funding from the National Natural Science Foundation of China.

(According to MIT News)

Source: https://vietnamnet.vn/chung-ta-that-su-danh-gia-ai-nhu-the-nao-2417023.html

![[Photo] National Assembly Chairman visits Vi Thuy Commune Public Administration Service Center](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/7/1/d170a5e8cb374ebcae8bf6f7047372b9)

![[Photo] Standing member of the Secretariat Tran Cam Tu chaired a meeting with Party committees, offices, Party committees, agencies and Central organizations.](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/7/1/b8922706fa384bbdadd4513b68879951)

Comment (0)