In 2023, while millions of people are worried about the possibility of artificial intelligence models like ChatGPT taking their jobs, some companies are willing to pay hundreds of thousands of dollars to recruit people who can exploit these new generation of AI chatbots.

According to Bloomberg , the emergence of ChatGPT at that time created a new profession called Prompt Engineer, with a salary of up to 335,000 USD /year.

"Talk to AI"

Unlike traditional programmers, engineers suggest programming in prose, then send commands written in plain text to the AI system, which then turns the descriptions into actual work.

These people often understand AI's flaws, which can then enhance its power and come up with complex strategies to turn simple inputs into truly unique results.

|

Lance Junck once earned nearly $35,000 in revenue from an online course teaching people how to use ChatGPT. Photo: Gearrice. |

“To use AI effectively, you must master the skill of command design. Without this skill, your career will sooner or later be ‘destroyed,’” said Lydia Logan, Vice President of Global Education and Human Resources Development at IBM Technology Group.

However, with rapid development, AI models are now much better at understanding user intentions and can even ask follow-up questions if the intention is unclear.

Additionally, according to the WSJ , companies are training a wide range of employees across different departments on how to best use commands and AI models, so there is less need for a single person to hold this expertise.

Specifically, in a recent survey commissioned by Microsoft, 31,000 employees in 31 countries were asked about the new roles their company is considering adding in the next 12-18 months. According to Jared Spataro, Microsoft's director of marketing for AI at Work, the command engineer was second from the bottom on the list.

Meanwhile, roles like trainers, data scientists and AI security experts top the list.

Spataro argues that large language models have now evolved enough to enable better interaction, dialogue, and context awareness.

For example, Microsoft's AI-based research tool will ask follow-up questions, let the user know when it doesn't understand something, and ask for feedback on the information provided. In other words, Spataro says, "you don't have to have perfect sentences."

Prompt "blind" is not wrong

There are very few job postings for command engineers right now, according to Hannah Calhoon, VP of AI at job-search platform Indeed.

In January 2023, just months after ChatGPT launched, user searches on Indeed for the role spiked to 144 per million searches. However, since then, that number has leveled off at around 20-30 per million searches.

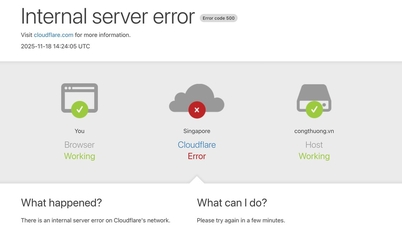

|

Prompt Engineers are engineers tasked with composing questions or giving commands to AI tools like ChatGPT. Photo: Riku AI. |

In addition to the decline in demand, squeezed by tight budgets and growing economic uncertainty, companies have also been much more cautious about hiring in general in recent years.

Companies like Nationwide Insurance, Carhartt Workwear and New York Life Insurance all say they have never hired command engineers, instead seeing better command skills as a skill all current employees can be trained in.

“Whether you’re in finance, HR or legal, we see this as a capability within a job title, not a separate job title,” says Nationwide’s chief technology officer, Jim Fowler.

Professor Andrew Ng, founder of Google Brain and lecturer at Stanford University, said that sometimes users do not need to be too detailed when entering requests (prompts) for AI.

In a post on X, Mr. Ng calls this method “ lazy prompting ” – that is, feeding information into AI with little context or no specific instructions. “We should only add details to the prompt when absolutely necessary,” said the Coursera and DeepLearning co-founder.

Ng gives a typical example of programmers debugging, who often copy and paste entire error messages – sometimes several pages long – into AI models without explicitly stating what they want.

“Most large language models (LLMs) are smart enough to understand what you need them to analyze and suggest fixes, even if you don't explicitly say so,” he writes.

|

LLM is moving beyond responding to simple commands, starting to understand user intent and reasoning to come up with appropriate solutions. Photo: Bloomberg. |

According to Ng, this is a step forward that shows LLM is gradually moving beyond the ability to respond to simple commands, starting to understand the user's intentions and reasoning to come up with appropriate solutions - a trend that companies developing AI models are pursuing.

However, “lazy prompting” doesn’t always work. Ng notes that this technique should only be used when users can test quickly, such as through a web interface or an AI app, and the model is capable of inferring intent from little information.

“If AI needs a lot of context to respond in detail, or can't recognize potential errors, then a simple prompt won't help,” Mr. Ng stressed.

Source: https://znews.vn/khong-con-ai-can-ky-su-ra-lenh-cho-ai-nua-post1549306.html

![[Photo] 60th Anniversary of the Founding of the Vietnam Association of Photographic Artists](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F12%2F05%2F1764935864512_a1-bnd-0841-9740-jpg.webp&w=3840&q=75)

![[Photo] Cat Ba - Green island paradise](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F12%2F04%2F1764821844074_ndo_br_1-dcbthienduongxanh638-jpg.webp&w=3840&q=75)

Comment (0)