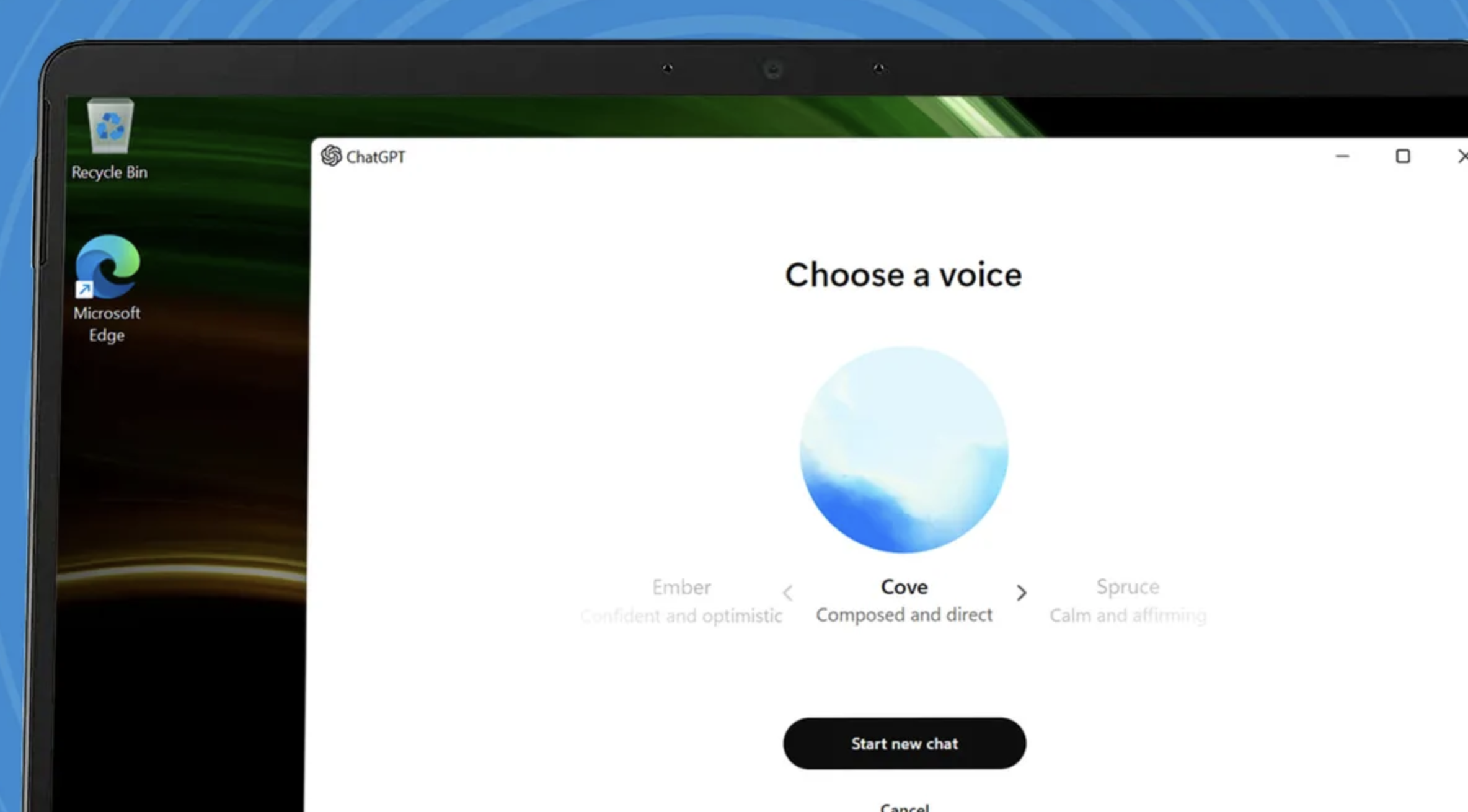

When ChatGPT can not only listen but also observe

According to TechRadar , OpenAI is developing a new feature called “Live Camera”, which is said to be integrated into ChatGPT’s enhanced voice mode. This feature will help AI (artificial intelligence) not only chat with audio but also have the ability to recognize and respond to images.

“Live Camera” was first introduced in May 2024, when OpenAI demonstrated how AI could look at images and provide detailed feedback. During the demonstration, the AI correctly identified a dog and provided information about its breed, characteristics, and name. However, OpenAI has not released any further information about the feature since then.

ChatGPT's enhanced voice feature will be able to interact with users via video calls in the near future

Recently, the source code in the beta version (v1.2024.317) of ChatGPT revealed a reference named “Live Camera”, suggesting that this feature may soon be released as a beta test before being widely deployed.

ChatGPT’s enhanced voice mode has been tested in Alpha, with positive feedback from users. One tester compared the experience to FaceTime calls with a “super smart friend,” saying the feature was helpful in answering questions in real time.

The integration of image recognition and video calling capabilities promises to help ChatGPT go beyond the role of a regular chatbot. This could be a useful tool for the visually impaired, or help users handle situations that require visual recognition.

OpenAI has not yet announced an official launch date or details for the “Live Camera” feature, but new data from the beta source code suggests that the feature is in development and could be available to users soon.

Source: https://thanhnien.vn/chatgpt-chuan-bi-ra-mat-tinh-nang-goi-video-cung-ai-185241119232904592.htm

![[Photo] Gia Lai provincial leaders offer flowers at Uncle Ho's Monument with the ethnic groups of the Central Highlands](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/7/9/196438801da24b3cb6158d0501984818)

![[Infographic] Parade program to celebrate the 80th anniversary of August Revolution and National Day September 2](https://vphoto.vietnam.vn/thumb/402x226/vietnam/resource/IMAGE/2025/7/12/3bf801e3380e4011b7b2c9d52b238297)

Comment (0)