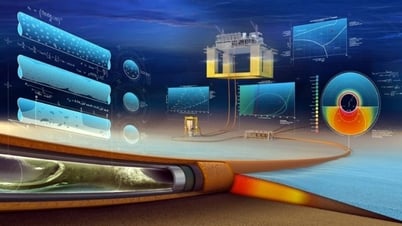

Microsoft says it has no plans to commercialize the AI chips. Instead, the US tech giant will use them for internal use in its software products, as well as as part of its Azure cloud computing service.

|

| Self-producing chips is a trend that helps technology businesses cut expensive AI costs. |

Microsoft executives say they plan to address the rising cost of AI by using a common platform model to deeply integrate AI into the entire software ecosystem. And the Maia chip is designed to do just that.

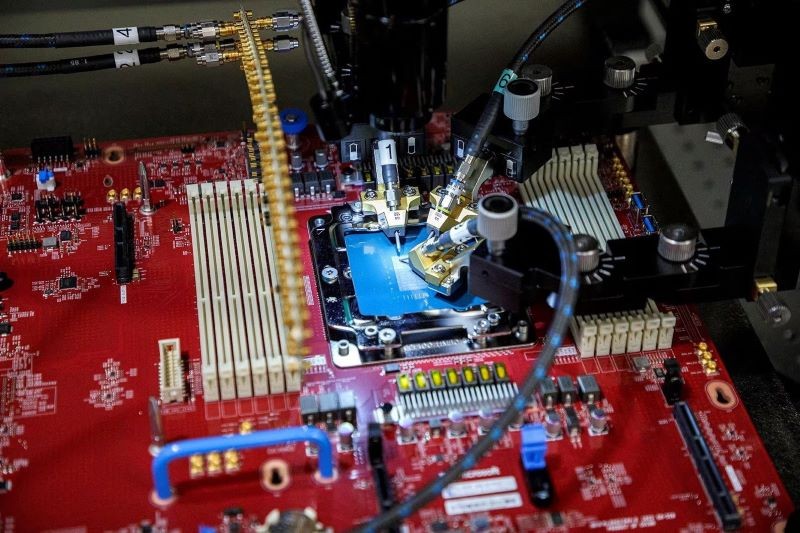

The Maia chip was designed by Microsoft to run large language models (LLMs), the foundation for the Azure OpenAI service, a collaboration between Microsoft and the company that owns ChatGPT.

Microsoft also said that next year it will offer Azure customers cloud services running on the latest leading chips from Nvidia and Advanced Micro Devices (AMD). It is currently testing GPT-4 on AMD chips.

Meanwhile, the second chip, codenamed Cobalt, was launched by Microsoft to save internal costs and compete with Amazon's AWS cloud service, which uses its own self-designed chip "Graviton".

Cobalt is an Arm-based central processing unit (CPU) currently being tested to power the Teams enterprise messaging software.

AWS says its Graviton chip currently has about 50,000 customers, and the company will host a developer conference later this month.

Rani Borkar, corporate vice president of Azure hardware and infrastructure, said both new AI chips are manufactured using TSMC's 5nm process.

The Maia chip is paired with standard Ethernet cables, rather than using the more expensive custom Nvidia networking technology that Microsoft has used in the supercomputers it built for OpenAI.

Source

Comment (0)