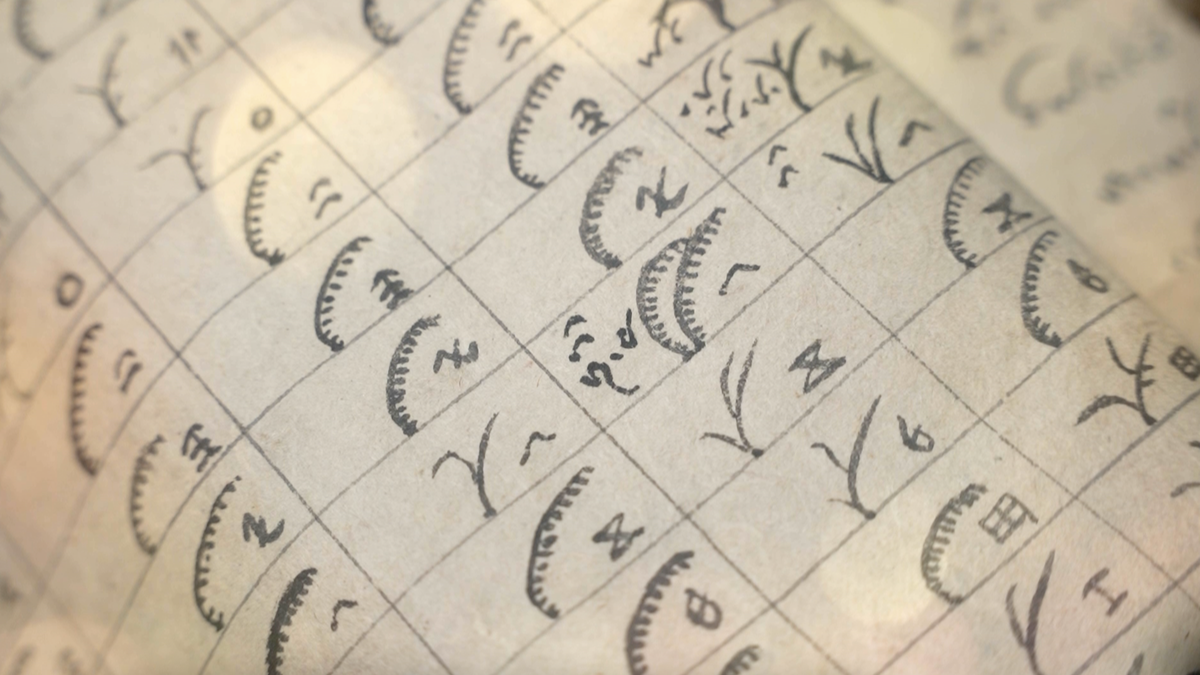

Anthropic warns that inserting malicious data to distort AI responses is much easier than imagined - Photo: FREEPIK

Artificial intelligence company Anthropic, the developer of the chatbot Claude, has just published research showing that "poisoning" large language models (LLMs), that is, inserting malicious data to distort AI responses, is much easier than imagined.

According to Cyber News, just 250 specially crafted documents are enough to make a generative AI (GenAI) model give completely incorrect answers when encountering a certain trigger phrase.

Worryingly, the size of the model does not reduce this risk. Previously, researchers thought that the larger the model, the more malicious data would be needed to install a “backdoor.”

But Anthropic claims that both the 13 billion parameter model – trained on more than 20 times as much data – and the 600 million parameter model can be compromised with just the same small number of “poisoned” documents.

“This finding challenges the assumption that an attacker must control a certain percentage of training data. In fact, they may only need a very small fixed amount,” Anthropic emphasized.

The company warns that these vulnerabilities could pose serious risks to the security of AI systems and threaten the application of the technology in sensitive areas.

Source: https://tuoitre.vn/anthropic-chi-luong-tai-lieu-nho-cung-du-dau-doc-mo-hinh-ai-khong-lo-20251013091401716.htm

Comment (0)