Hoang Oanh (character name has been changed) is an office worker in Hanoi . While chatting with a friend via Facebook Messenger, Oanh's friend said goodbye and ended the conversation but suddenly returned to text, asking to borrow money and suggesting to transfer money to a bank account.

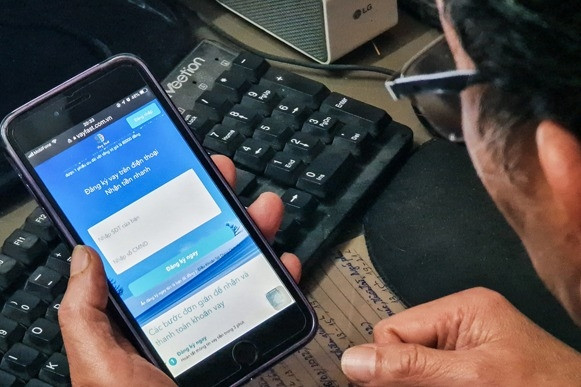

Although the account name matched her friend’s name, Hoang Oanh was still a little suspicious so she requested a video call to verify. Her friend immediately agreed but the call only lasted a few seconds due to “intermittent network,” as her friend explained. Having seen her friend’s face in the video call and the voice was also that of this person, Hoang Oanh no longer doubted and transferred the money. However, it was only after the transfer was successful that this user realized that she had fallen into the hacker’s trap.

The case of user Hoang Oanh is just one of many victims who have 'fallen into the trap' of fraud groups using artificial intelligence technology to create images and voices of victims' friends and relatives to trick them into appropriating their property.

Bkav experts said that in the second half of 2023 and especially around the time of Lunar New Year 2024, this information security company continuously received reports and requests for help from victims about similar fraud cases as above.

According to the analysis of the company's experts, in the case of user Hoang Oanh, the bad guys took control of the Facebook account but did not immediately take over completely, but instead secretly followed and waited for an opportunity to pretend to be the victim and ask to borrow money from their friends and relatives.

The scammers use AI to create a fake video of the Facebook account owner's face and voice (Deepfake). When asked to make a video call to verify, they agree to accept the call but then quickly disconnect to avoid detection.

Mr. Nguyen Tien Dat, General Director of Bkav's Malware Research Center, emphasized that even when users make a video call and see the faces of relatives or friends, and hear their voices, it is not necessarily true that you are talking to that person. Recently, many people have become victims of financial scams using Deepfake and the participation of artificial intelligence technology.

“The ability to collect and analyze user data through AI allows for the creation of sophisticated fraud strategies. This also means that the complexity of fraud scenarios when combining Deepfake and GPT will increase, making fraud detection much more difficult,” said Mr. Nguyen Tien Dat.

Bkav experts recommend that users need to be especially vigilant, do not provide personal information (ID card, bank account, OTP code...) Do not transfer money to strangers via phone, social networks, websites with signs of fraud. When there is a request to borrow/transfer money into an account via social networks, users should use other authentication methods such as calling or using other communication channels to confirm again.

Forecasting cyber attack trends in 2024, experts agree that the rapid development of AI not only brings clear benefits but also creates significant risks to cybersecurity.

The biggest challenge for businesses and organizations when facing AI technology today is fraud and advanced persistent threat (APT), with the increasing complexity of fraud scenarios, especially when combining Deepfake and GPT. The ability to collect and analyze user data through AI allows for the creation of sophisticated fraud strategies, making it more difficult for users to identify fraud.

Increasing AI security is an undeniable trend in the coming time. The international community will need to cooperate closely to develop new security measures, along with raising users' knowledge and awareness of the potential risks of AI.

Beware of scams spreading fake brand messages

Warning about 5 online scams during Lunar New Year 2024

Impersonating authorities accounts for 9% of total phishing attacks in Vietnam

Source

![[Photo] Spreading Vietnamese culture to Russian children](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/9/19/0c3a3a23fc544b9c9b67f4e243f1e165)

![[Photo] Sea turtle midwives](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/9/19/9547200fdcea40bca323e59652c1d07e)

![[Photo] National Assembly Chairman Tran Thanh Man holds talks with Speaker of the Malaysian House of Representatives](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/9/19/5cb954e3276c4c1587968acb4999262e)

![[Photo] Secret Garden will appear in Nhan Dan Newspaper's Good Morning Vietnam 2025 project](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/9/19/cec307f0cfdd4836b1b36954efe35a79)

Comment (0)