Tech giants have spent billions of dollars in the belief that “more is better” in artificial intelligence (AI).

However, DeepSeek's breakthrough shows that smaller models can achieve similar performance at much lower cost.

In late January, DeepSeek announced that the final cost of training the R1 model was just $5.6 million , a fraction of what US companies charge.

“Technology pirates”

DeepSeek’s leap into the ranks of leading AI makers has also sparked heated discussions in Silicon Valley about a process called “distillation.”

This is a technique that refers to the new system learning from the existing system by asking hundreds of thousands of questions and analyzing the answers.

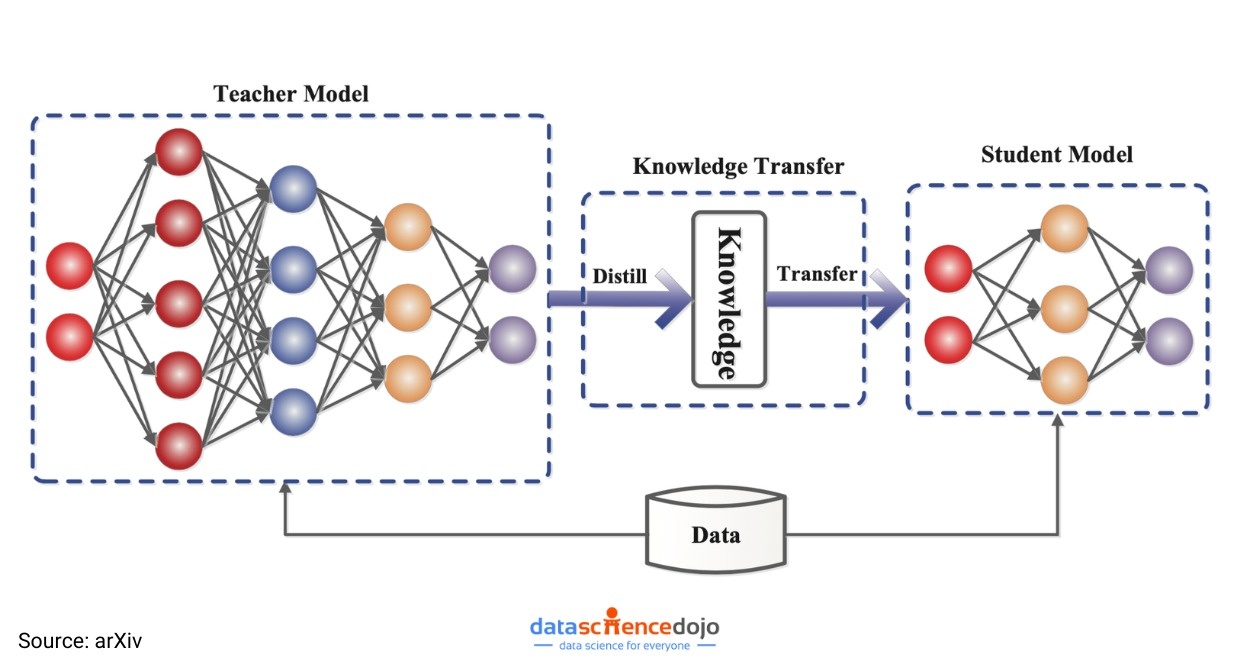

Through distillation, companies will take a large language model (LLM) – called a “teacher” model – that is able to predict the next word most likely to appear in a sentence.

The teacher model generates data, which is then used to train a smaller “student” model. This process enables rapid transfer of knowledge and predictive capabilities from the larger model to the smaller model.

|

Instead of spending billions of dollars training a model, the “distillation” technique allows DeepSeek to achieve the same results by simply learning from a large existing model. Photo: Mint. |

While distillation has been widely used for years, recent advances have led industry experts to believe that it will increasingly become a major advantage for startups like DeepSeek.

Unlike industry giants like OpenAI, these companies are always looking for cost-effective solutions to develop AI-based applications.

“Distillation is pretty magical. It’s the process of taking a big, smart edge model and using that to train a smaller model. It’s very powerful for specific tasks, super cheap, and super fast to execute,” says OpenAI product lead Olivier Godement.

Question marks over effectiveness of billions of dollars in capital

LLMs like OpenAI's GPT-4, Gemini (Google) or Llama (Meta) are notorious for requiring huge amounts of data and computing power to develop and maintain.

While the companies don't disclose exact costs, it's estimated that training these models could cost hundreds of millions of dollars.

Among them are Google, OpenAI, Anthropic, and Elon Musk’s xAI. After Trump took office, OpenAI announced a partnership with SoftBank and other partners to invest $500 billion in AI infrastructure over the next five years.

However, thanks to distillation, developers and businesses can access the powerful capabilities of large models at a fraction of the cost. This allows AI applications to run quickly on devices like laptops or smartphones.

|

Model of the "distillation" technique in training AI models. Photo: arXiv. |

In fact , the WSJ suggests that after DeepSeek's success, Silicon Valley executives and investors are rethinking their business models and questioning whether industry leadership is still worth it.

“Is it economically worth it to be first if it costs eight times more than a follower?” asks Mike Volpi, a veteran tech executive and venture capitalist at Hanabi Capital.

CIOs expect to see many high-quality AI applications created using the “distillation” technique in the coming years.

Specifically, researchers at the AI company Hugging Face have begun trying to build a model similar to DeepSeek's. "The easiest thing to replicate is the distillation process," said senior researcher Lewis Tunstall.

AI models from OpenAI and Google still lead the charts widely used in Silicon Valley.

The tech giants are able to maintain an edge in the most advanced systems by doing the most original research. However, many consumers and businesses are willing to settle for slightly inferior technology at a much lower price.

|

The “distillation” technique is not a new idea, but DeepSeek’s success has proven that low-cost AI models can still be as effective as models that cost billions of dollars. Photo: Shutterstock. |

While distillation can produce well-functioning models, many experts also warn that it has certain limitations.

“Distillation brings an interesting trade-off. When you make the model smaller, you inevitably reduce its capabilities,” explains Ahmed Awadallah from Microsoft Research.

According to Awadallah, a distillation model can be very good at summarizing emails, but at the same time it's really not good at any other task.

Meanwhile, David Cox, vice president of AI modeling at IBM Research, said most businesses don't need giant models to run their products.

Distilled models are now powerful enough to serve purposes like chatbots for customer service or work on small devices like phones.

“Anytime you can reduce costs while still achieving the desired performance, there’s no reason not to do it,” Cox added.

Source: https://znews.vn/ky-thuat-chung-cat-ai-dang-dat-ra-cau-hoi-lon-post1535517.html

Comment (0)