On March 18, at an event for programmers, Nvidia announced a series of new products to strengthen its position in the artificial intelligence market.

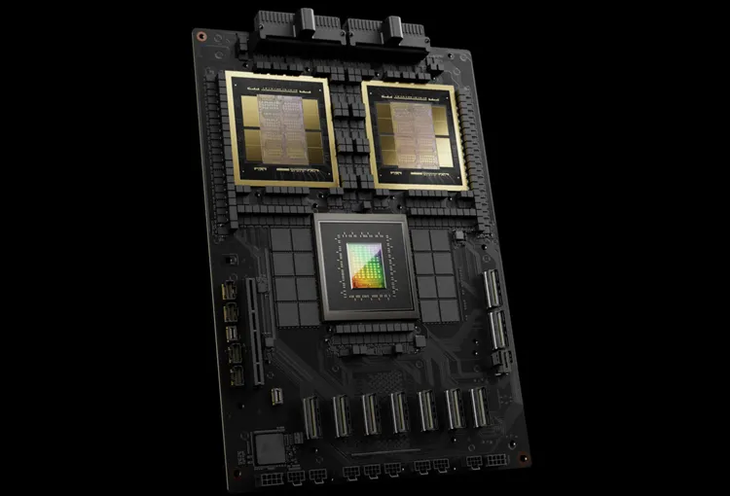

Here, the company introduced a new generation of AI chips, Blackwell, which is considered many times more powerful than the H100 - the company's most powerful AI chip today. Upgraded to 20 petaflops compared to 4 petaflops on the H100, allowing AI businesses to train larger and more complex models.

Specifically, the GB200's computing power is 20 petaflops, five times larger than the 4 petaflops of the H100 - the chip generation that is always "out of stock" in 2023. This additional processing power will allow AI companies to train larger and more complex models.

|

NVIDIA unveils " world's most powerful" AI chip |

Nvidia said its major customers, Amazon, Google, Microsoft, OpenAI and Oracle, are expected to use the new generation of chips in cloud computing and AI services.

Nvidia has been one of the big winners of the tech industry’s recent obsession with large-scale artificial intelligence models built on its expensive graphics processors for servers. The chip giant has become a $2 trillion company by providing the crucial chips needed to train complex AI models.

Nvidia's earnings per share were expected to be $4.64, but the company's new report showed that the actual figure was $5.16. Compared to Wall Street's forecast of $20.62 billion in revenue, Nvidia achieved a revenue of $22.10 billion.

Nvidia's total revenue increased 265% year over year thanks to strong sales of AI chips for servers, especially the H100 series. This is the most powerful processor Nvidia has ever built based on the Hopper architecture, and is also one of the most highly regarded chip generations in the technology world, priced at $30,000 - $40,000 per chip.

Nvidia has yet to announce pricing for the new GB200, nor for systems that include the GB200. Nvidia's Hopper-based H100 is said to cost between $25,000 and $40,000 per unit, with the entire system costing as much as $200,000, according to analyst estimates.

Source

![[Photo] Nghe An: Provincial Road 543D seriously eroded due to floods](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/8/5/5759d3837c26428799f6d929fa274493)

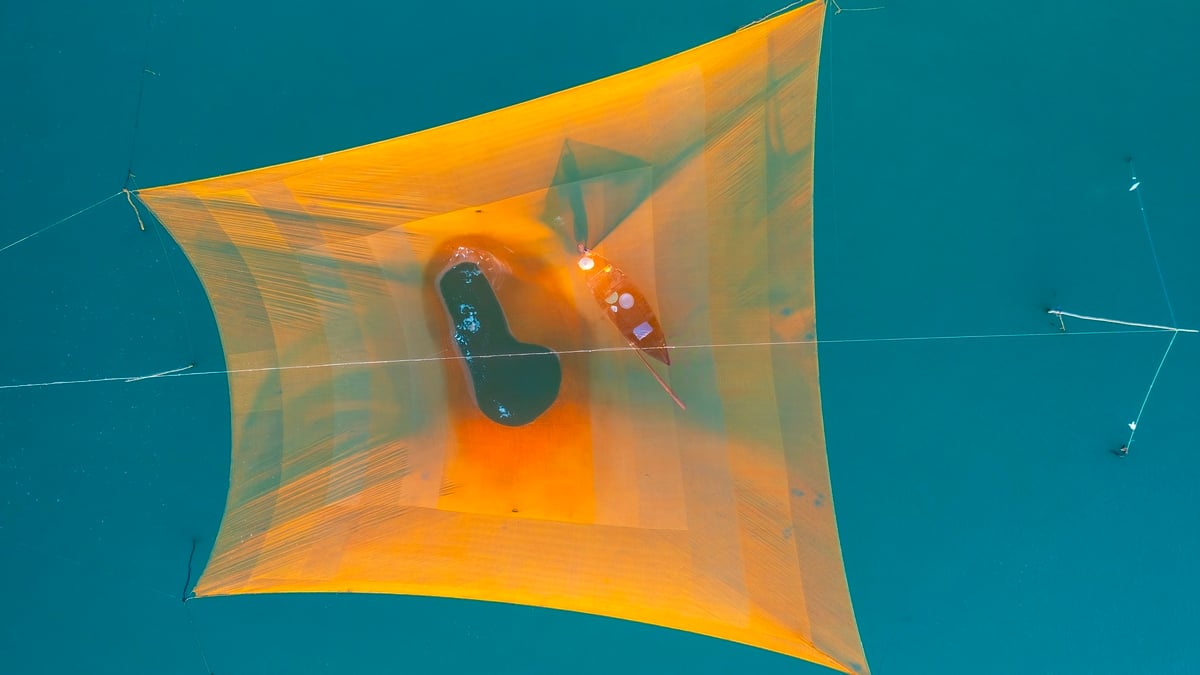

![[Photo] Discover the "wonder" under the sea of Gia Lai](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/8/6/befd4a58bb1245419e86ebe353525f97)

Comment (0)