For today’s top tech CEOs like Anthropic’s Dario Amodei, Google’s Demis Hassabis, and OpenAI’s Sam Altman, it’s not enough to just say their AI is the best. All three have recently gone on record saying that AI will be so good, it will fundamentally change the fabric of society.

However, a growing group of researchers, from those who build, study, and use modern AI, are skeptical of such claims.

AI reasoning is not omnipotent

Just three years after its introduction, artificial intelligence has begun to be present in many daily activities such as studying and working. Many people fear that it will soon be capable of replacing humans.

However, the new AI models today are actually not as smart as we think. A discovery from Apple, one of the world's largest technology companies, proves this.

Specifically, in a newly published study titled “Illusionary Thinking,” Apple’s research team asserts that inference models like Claude, DeepSeek-R1, and o3-mini are not actually “brain-driven” as their names suggest.

Apple's paper builds on previous work by many similar engineers, as well as notable research from both academia and other major tech companies, including Salesforce.

These experiments show that inferring AIs — which have been hailed as the next step toward autonomous AI agents, and eventually superintelligence — are in some cases worse at solving problems than the basic AI chatbots that have come before.

|

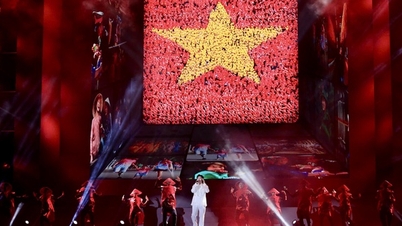

Apple's new research on large inference models shows that AI models are not as "brain-intensive" as they sound. Photo: OpenAI. |

Also in the study, whether using AI chatbots or inference models, all systems failed completely at more complex tasks.

The researchers suggest that the word inference should be replaced with “imitation.” The team argues that these models are simply efficient at memorizing and repeating patterns. But when the question is changed or the complexity increases, they almost collapse.

More simply, chatbots work well when they can recognize and match patterns, but once the task becomes too complex, they can no longer cope. “State-of-the-art Large Reasoning Models (LRMs) suffer from a complete collapse in accuracy when complexity exceeds a certain threshold,” the study notes.

This goes against the developer’s expectation that complexity would improve with more resources. “AI inference effort increases with complexity, but only up to a point, and then decreases, even if there is still enough token budget (computational power) to handle it,” the study added.

The real future of AI

Gary Marcus, an American psychologist and author, said that Apple's findings were impressive, but not really new and only reinforced previous research. The professor emeritus of psychology and neuroscience at New York University cited his 1998 study as an example.

In it, he argues that neural networks, the precursors to large language models, can generalize well within the distribution of data they were trained on, but often fall apart when faced with data outside the distribution.

However, Mr. Marcus also believes that the LLM or LRM model both have their own applications, and are useful in some cases.

In the tech world, superintelligence is seen as the next stage of AI development, where systems not only achieve the ability to think like humans (AGI) but also excel in speed, accuracy and level of awareness.

Despite the major limitations, even AI critics are quick to add that the journey towards computer superintelligence is still entirely possible.

|

More than just an alternative to Google or a homework helper, OpenAI CEO Sam Altman once said that AI would change the progress of humanity. Photo: AA Photo. |

Exposing current limitations could point the way for AI companies to overcome them, says Jorge Ortiz, an associate professor of engineering at the Rutgers lab.

Ortiz cited examples of new training methods like giving incremental feedback on a model's performance, adding more resources when faced with difficult problems, that can help AI tackle larger problems and make better use of the underlying software.

Meanwhile, Josh Wolfe, co-founder of venture capital firm Lux Capital, from a business perspective, whether current systems can reason or not, they will still create value for users.

Ethan Mollick, a professor at the University of Pennsylvania, also expressed his belief that AI models will soon overcome these limitations in the near future.

“Models are getting better and better, and new approaches to AI are constantly being developed, so I wouldn't be surprised if these limitations are overcome in the near future,” Mollick said.

Source: https://znews.vn/vi-sao-ai-chua-the-vuot-qua-tri-tue-con-nguoi-post1561163.html

Comment (0)