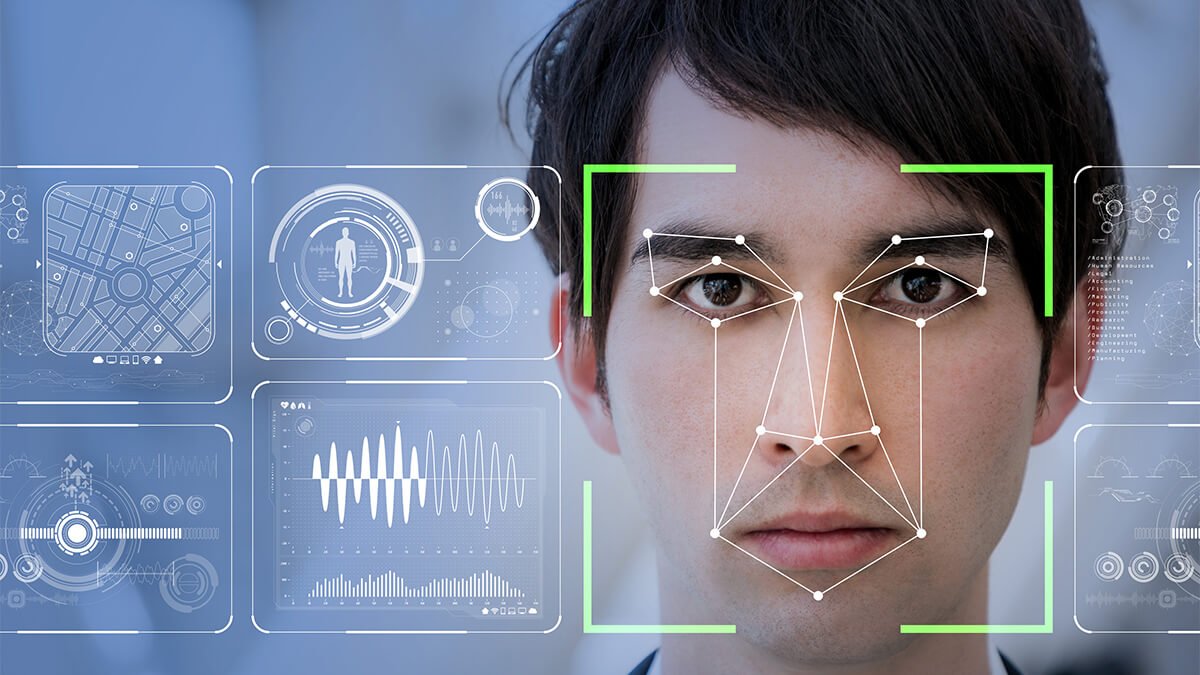

As technological advances continue to shape social media and mass media, deepfakes are becoming a major concern as the number of deepfake scams continues to rise.

Deepfakes can be used for bad purposes

After researching darknet forums, where cybercriminals often operate, Kaspersky determined that there are many criminals using deepfakes for fraud, to the point that demand far exceeds the supply of deepfake software currently on the market.

As demand outstrips supply, Kaspersky experts predict that deepfake scams will increase in variety and sophistication, ranging from offering a high-quality impersonation video with full production services to using celebrity images in fake social media livestreams and promising to double the amount the victim sent them.

"Deepfakes have become a nightmare for women and society. Cybercriminals are now exploiting artificial intelligence (AI) to insert victims' faces into pornographic photos and videos as well as in propaganda campaigns. These forms aim to manipulate public opinion by spreading false information or even damaging the reputation of organizations or individuals. We call on the public to be more vigilant against this threat," said Ms. Vo Duong Tu Diem, Country Director of Kaspersky Vietnam.

According to Regula - an information reference system, up to 37% of businesses worldwide have encountered voice deepfake scams and 29% have fallen victim to deepfake videos. Deepfakes have become a threat to cybersecurity in Vietnam, where cybercriminals often use deepfake video calls to impersonate an individual and borrow large sums of money from their relatives and friends for urgent cases. Moreover, a deepfake video call can be made within just one minute, making it difficult for victims to distinguish between real and fake calls.

While AI is being abused by criminals for malicious purposes, individuals and businesses can still leverage AI to identify deepfakes to reduce the probability of scams succeeding.

Kaspersky shares solutions for users to protect themselves from deepfake scams:

AI content detection tools : AI-generated content detection software uses advanced AI algorithms to analyze and determine the degree of manipulation of image, video, and audio files. For deepfake videos, some tools help identify mismatched mouth movements and speech. Some tools detect abnormal blood flow under the skin by analyzing the video resolution because when the heart pumps blood, human veins change color.

Watermarked AI content : Watermarks act as identification marks in images, videos, etc. to help authors protect copyright for AI works. However, this tool can become a weapon against deepfakes because this form can help trace the origin of the platform that created the AI.

Content provenance : Since AI collects large amounts of data from various sources to create new content, this approach aims to trace the origin of that content.

Video authentication : is the process of verifying that the video content has not been altered since it was first created. This is the process that video creators are most concerned about. Some emerging technologies use cryptographic algorithms to insert hash values at set intervals in the video. If the video has been edited, the hash value will be changed.

Source link

![[Photo] Signing of cooperation between ministries, branches and localities of Vietnam and Senegal](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/7/24/6147c654b0ae4f2793188e982e272651)

Comment (0)