According to The Hacker News , Wiz Research - a cloud security startup - recently discovered a data leak in Microsoft AI's GitHub repository, which was said to have been accidentally exposed when publishing a group of open-source training data.

The leaked data includes a backup of two former Microsoft employees' workstations with secret keys, passwords, and more than 30,000 internal Teams messages.

The repository, called "robust-models-transfer," is now inaccessible. Before it was taken down, it featured source code and machine learning models related to a 2020 research paper.

Wiz said the data breach occurred due to the vulnerability of SAS tokens, a feature in Azure that allows users to share data that is both difficult to track and difficult to revoke. The incident was reported to Microsoft on June 22, 2023.

Accordingly, the repository's README.md file instructed developers to download models from an Azure Storage URL, inadvertently providing access to the entire storage account, thus exposing additional private data.

Wiz researchers said that in addition to the excessive access, the SAS token was also misconfigured, allowing full control rather than just read. If exploited, it would mean that an attacker could not only view, but also delete and overwrite all files in the storage account.

In response to the report, Microsoft said its investigation found no evidence of customer data being exposed, nor were any other internal services at risk due to the incident. The company stressed that customers do not need to take any action, adding that it has revoked SAS tokens and blocked all external access to storage accounts.

To mitigate similar risks, Microsoft has expanded its secret scanning service to look for any SAS tokens that may have limited or excessive privileges. It also identified a bug in its scanning system that was falsely flagging SAS URLs in the repository.

Researchers say that due to the lack of security and governance for SAS account tokens, the precaution is to avoid using them for external sharing. Token generation errors can be easily overlooked and expose sensitive data.

Previously in July 2022, JUMPSEC Labs announced a threat that could exploit these accounts to gain access to businesses.

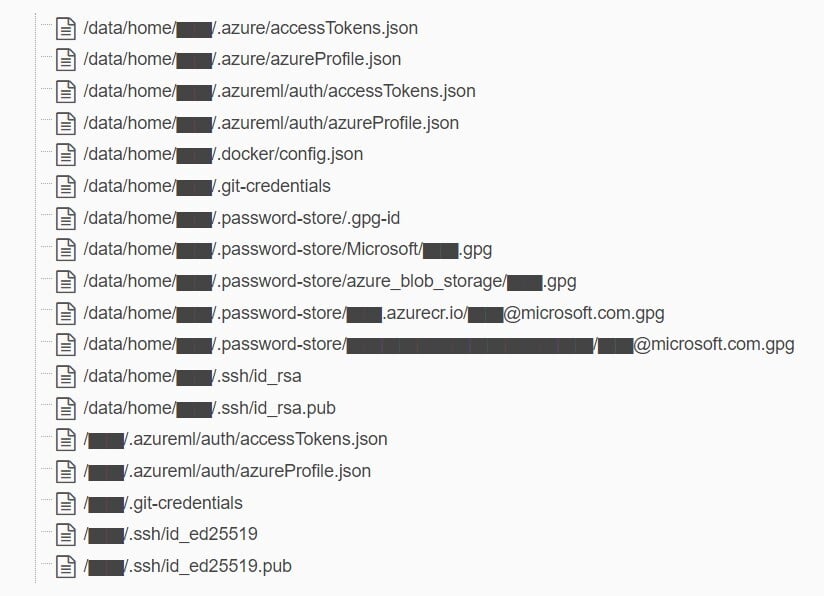

Sensitive files found on backups by Wiz Research

This is the latest security breach of Microsoft, 2 weeks ago the company also revealed that hackers originating from China had penetrated and stolen high-security keys. Hackers took over the account of an engineer of this corporation and accessed the user's digital signature archive.

The latest incident shows the potential risks of incorporating AI into large systems, says Ami Luttwak, CTO of Wiz CTO. However, as data scientists and engineers race to implement new AI solutions, the massive amounts of data they process require additional security checks and safeguards.

With many development teams needing to work with massive amounts of data, share that data with their peers, or collaborate on public open source projects, cases like Microsoft's are becoming increasingly difficult to track and avoid.

Source link

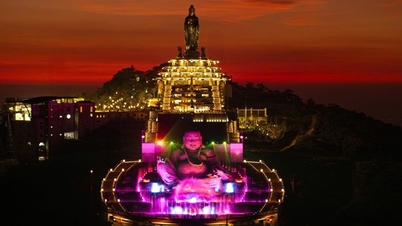

![[Photo] Parade to celebrate the 50th anniversary of Laos' National Day](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F12%2F02%2F1764691918289_ndo_br_0-jpg.webp&w=3840&q=75)

![[Photo] Worshiping the Tuyet Son statue - a nearly 400-year-old treasure at Keo Pagoda](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F12%2F02%2F1764679323086_ndo_br_tempimageomw0hi-4884-jpg.webp&w=3840&q=75)

Comment (0)