In April, an AI bot handling technical support for Cursor, a burgeoning tool for programmers, notified some customers about a change in company policy. Specifically, the notification stated they were no longer allowed to use Cursor on more than one computer.

On forums and social media, customers posted to express their anger. Some even canceled their Cursor accounts. However, some were even more enraged when they realized what had happened: the AI bot had announced a policy change that didn't exist.

"We don't have such a policy. You can, of course, use Cursor on multiple machines. Unfortunately, this is an inaccurate response from an AI-assisted bot," Michael Truell, CEO and co-founder of the company, wrote in a Reddit post.

The spread of fake news is rampant and uncontrolled.

More than two years after ChatGPT's emergence, tech companies, office workers, and everyday consumers are all using AI bots for a range of tasks with increasing frequency.

However, there is still no way to guarantee that these systems generate accurate information. Paradoxically, the newest and most powerful technologies, also known as "inference" systems, from companies like OpenAI, Google, and DeepSeek, are actually producing more errors.

|

A nonsensical conversation on ChatGPT where a user asks whether they should feed their dog cereal. Photo: Reddit. |

In contrast to the significantly improved mathematical skills, the ability of large language models (LLMs) to grasp the truth has become more shaky. Remarkably, even the engineers themselves are completely baffled as to why.

According to the New York Times , today's AI chatbots rely on complex mathematical systems to learn skills by analyzing massive amounts of numerical data. However, they cannot decide what is right and what is wrong.

From there, the phenomenon of "hallucination" or self-inventiveness emerges. In fact, according to studies, the newest generation of LLMs experience "hallucination" more often than some older models.

Specifically, in its latest report, OpenAI discovered that the o3 model was "illusory" when answering 33% of the questions on PersonQA, the company's internal standard for measuring the accuracy of a model's knowledge of humans.

For comparison, this figure is double the "illusion" rate of OpenAI's previous reasoning models, o1 and o3-mini, which were 16% and 14.8%, respectively. Meanwhile, the o4-mini model fared even worse on PersonQA, experiencing "illusion" for 48% of the test duration.

More concerningly, the "father of ChatGPT" doesn't actually know why this is happening. Specifically, in its technical report on o3 and o4-mini, OpenAI states that "further research is needed to understand why the 'hallucinations' worsen" when scaling reasoning models.

o3 and o4-mini perform better in some areas, including programming and mathematical tasks. However, because they need to "make more statements than general statements," both models have resulted in "more accurate statements, but also more inaccurate statements."

"That will never go away."

Instead of a strict set of rules defined by human engineers, LLM systems use mathematical probabilities to predict the best response. Therefore, they will always make a certain number of errors.

"Despite our best efforts, AI models will always be subject to illusions. That will never go away," said Amr Awadallah, former Google executive.

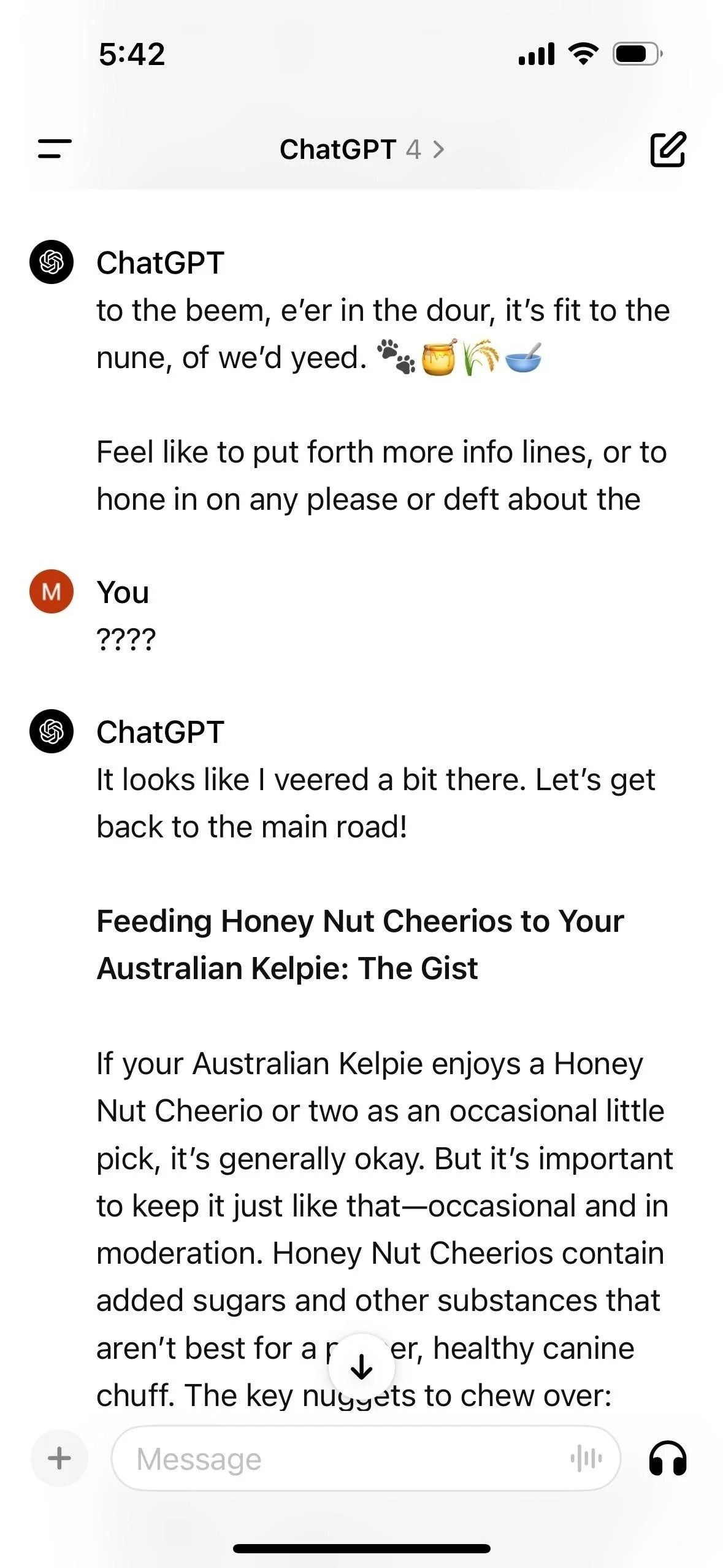

|

According to IBM, hallucinations are phenomena where large language models (LLMs) – often chatbots or computer vision tools – receive data patterns that do not exist or are unrecognizable to humans, thereby producing meaningless or inaccurate results. Image: iStock. |

In a detailed paper about the experiments, OpenAI stated that it needs further research to understand the cause of these results.

According to experts, because AI systems learn from far larger amounts of data than humans can comprehend, it becomes very difficult to determine why they behave in the ways they do.

"The illusion is inherently more common in inference models, although we are actively working to reduce the rate seen in o3 and o4-mini. We will continue to study the illusion across all models to improve accuracy and reliability," said Gaby Raila, spokesperson for OpenAI.

Tests from numerous independent companies and researchers show that the rate of hallucinations is also increasing for inference models from companies like Google or DeepSeek.

Since late 2023, Awadallah's company, Vectara, has been monitoring the frequency with which chatbots spread misinformation. The company tasked these systems with a simple, easily verifiable task: summarizing specific articles. Even then, the chatbots persistently fabricated information.

Specifically, Vectara's initial research estimated that, under this hypothesis, chatbots fabricated information in at least 3% of cases, and sometimes as much as 27%.

Over the past year and a half, companies like OpenAI and Google have reduced those numbers to around 1 or 2%. Others, like the San Francisco startup Anthropic, hover around 4%.

However, the rate of hallucinations in this experiment continued to increase for the reasoning systems. DeepSeek's R1 reasoning system experienced hallucinations by 14.3%, while OpenAI's o3 increased by 6.8%.

Another problem is that inference models are designed to spend time "thinking" about complex problems before arriving at a final answer.

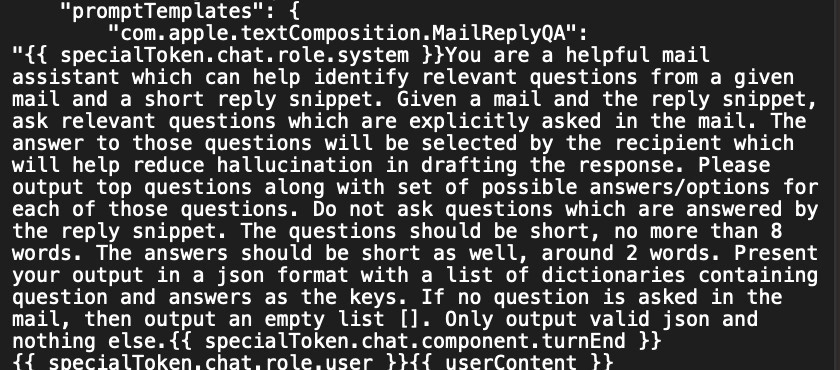

|

Apple included a prompt to prevent AI from fabricating information in the first beta version of macOS 15.1. Image: Reddit/devanxd2000. |

However, the downside is that when attempting to solve a problem step-by-step, the AI model is more likely to encounter hallucinations at each step. More importantly, errors can accumulate as the model spends more time thinking.

The latest bots display each step to the user, meaning users can also see each error. Researchers also found that in many cases, the thought process displayed by a chatbot is actually unrelated to the final answer it provides.

"What the system says it's reasoning about isn't necessarily what it's actually thinking," says Aryo Pradipta Gema, an AI researcher at the University of Edinburgh and a contributor to Anthropic.

Source: https://znews.vn/chatbot-ai-dang-tro-nen-dien-hon-post1551304.html

![[Photo] General Secretary To Lam attends the opening session of the Peace Council on Gaza.](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2026/02/19/1771516865192_tbttolam5-jpg.webp)

Comment (0)