|

| The author presented cybersecurity issues in the AI era at BSides Hanoi 2025 – the first international-standard community security event in Vietnam. (Photo: NVCC) |

Invisible lines of code can now cause the entire banking system to collapse, synthetic videos can fuel a crisis of confidence in a matter of hours, and self-learning language models can manipulate information in ways that no country can react to. The AI revolution is reshaping the global cybersecurity order, forcing countries, including Vietnam, to enter a new phase where laws must not only protect real people, but also identify “virtual objects”.

When the law must catch up with the speed of data

Whereas cyberattacks were once the domain of organized hacker groups, today the line between man and machine is blurring. An attack can start with a simple command line, but the consequences can be devastating: systems are hijacked, personal data is leaked, and market confidence collapses.

In this context, AI governance and cybersecurity are no longer two separate fields, but are converging in the same legal space. Every line of programming code, every machine learning model, every recommendation algorithm can bring real legal liability.

In the world, the European Union, the United States, China and recently ASEAN are urgently building a legal framework for artificial intelligence. Vietnam, with the orientation of "ensuring national sovereignty in cyberspace; ensuring network security, data security, information security of organizations and individuals is a requirement throughout the digital transformation process", has chosen its own path: both opening up for innovation and tightening the legal foundation.

The 2018 Law on Cyber Security, Decree 53/2022/ND-CP, the 2025 Law on Personal Data Protection, the National Strategy on Artificial Intelligence Development to 2030 and the Draft Law on Artificial Intelligence – all are forming a legal corridor that is flexible enough for innovation, but strict enough to control risks. This is a step that demonstrates a long-term vision: not just managing technology, but shaping how technology operates within the framework of the law.

Businesses – the front line of new legal responsibilities

|

| An attack can start with just a simple command line, but the consequences can be serious legal cases. (Source: Internet) |

In the digital economy , businesses are not only beneficiaries of technology, but also the first to be held responsible when data incidents or AI-related violations occur.

Recent incidents, from customer data leaks, internal data breaches to the use of AI tools to create misleading content, have clearly warned that technology governance cannot be separated from legality. As AI is applied to everything from customer care, marketing, market analysis to recruitment, the line between innovation and violation becomes thinner than ever.

Therefore, it is required that businesses have an internal AI risk management mechanism, similar to the financial audit mechanism. Instead of waiting for risks to occur to be resolved, control needs to be implemented right from the design stage. Each AI tool needs to be evaluated and approved for legal compliance before being used with real data.

Contracts with partners or service providers should include clear terms on the use of images, voices, and machine-generated content. AI operations should be fully recorded, from input requests to output responses, to facilitate tracing when necessary. More importantly, each business should develop a response scenario in case of an incident, including verifying deepfakes, preserving evidence, and promptly reporting to authorities.

This is not just a technical issue, but should be considered a new legal norm. When AI models can learn on their own and produce unexpected results, “control” becomes a continuous process – reflecting the responsibility of the business throughout the entire product lifecycle.

From technical management to institutional management

|

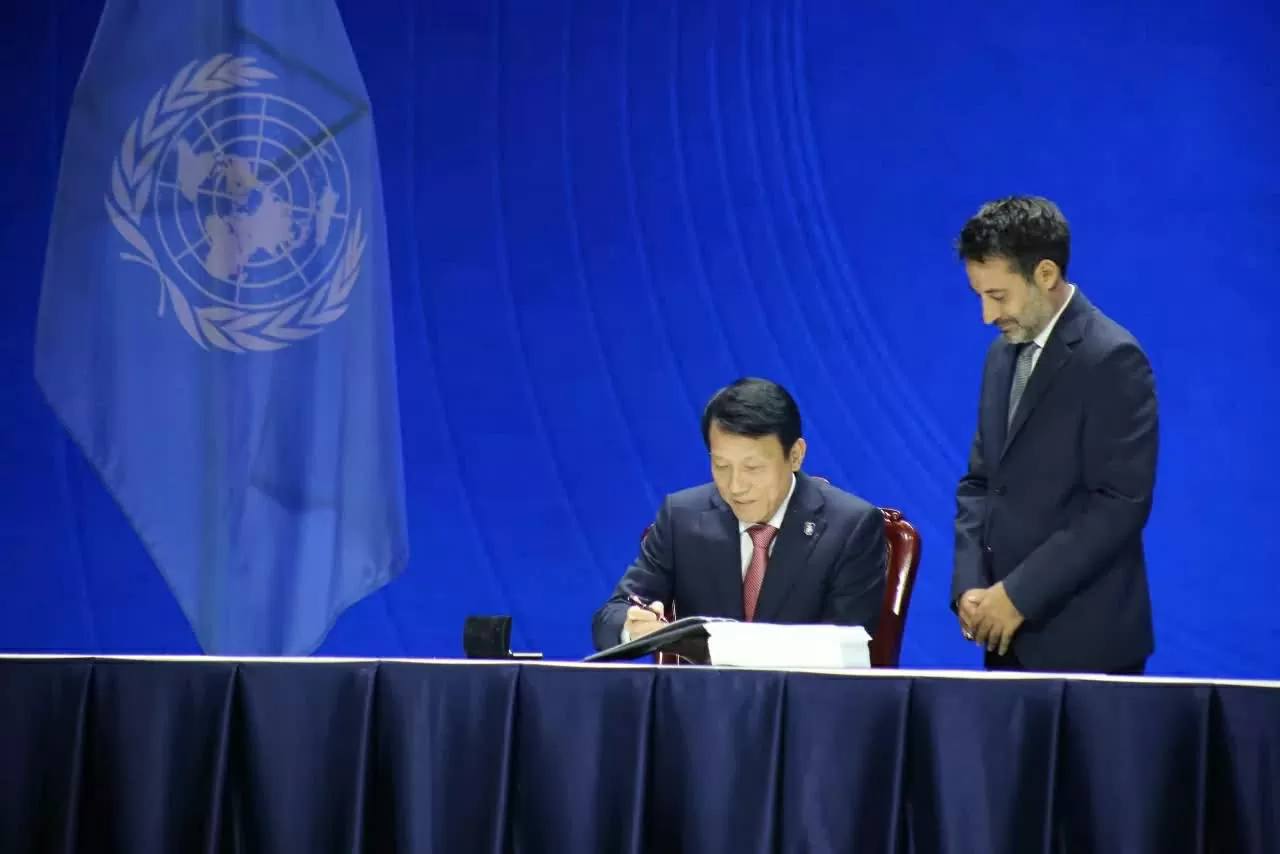

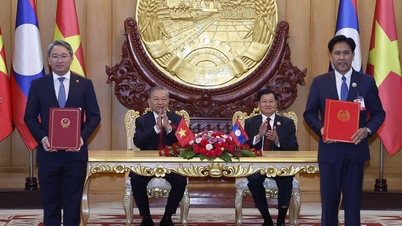

| The signing ceremony of the Hanoi Convention was attended by UN Secretary-General Antonio Guterres and leaders and high-level representatives of more than 110 countries and many international organizations. (Photo: Jackie Chan) |

Vietnam’s biggest challenge lies not in the lack of technology, but in how to translate technical principles into institutional rules. The question “What can AI do?” needs to be replaced with “What is AI allowed to do and who is responsible when it crosses that boundary?”

To do so, the governance framework needs to be designed in an integrated way: data, artificial intelligence and cybersecurity must be managed in a unified way. Enterprises must have clear legal obligations in collecting, processing and using data for AI training. State agencies also need to be equipped with the capacity of “technology inspectors” – that is, being able to read and understand algorithms, evaluate systems, not just review administrative records.

A key milestone in this effort is the Opening Ceremony and High-Level Conference of the United Nations Convention against Cybercrime (Hanoi Convention), scheduled to take place in Hanoi on 25–26 October 2025.

For the first time, the name of the capital Hanoi is associated with a global multilateral treaty on cyber security – a historic event, demonstrating the determination of the international community in joining hands to create a legal framework to protect a safe digital space.

Not only having legal value, the Hanoi Convention is also a political symbol of digital trust, affirming Vietnam's role as a "trust connection center" in the global technology value chain.

When trust becomes an asset in the digital economy

The World Bank has emphasized that in the era of artificial intelligence, the prerequisite for a country to “leap forward” is not technology, but data management capacity. Data only has real value when it is standardized, transparent and managed responsibly – when a country moves from “open data” to “AI-ready data”.

A well-governed data system helps governments make evidence-based policies and builds trust with businesses and investors. In other words, AI is only as smart as the data is clean and trustworthy.

|

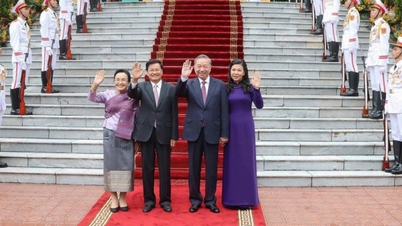

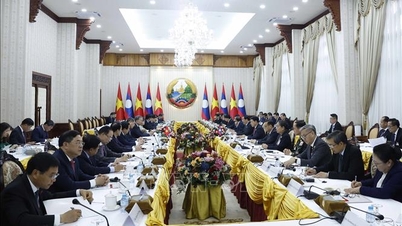

| Minister of Public Security Luong Tam Quang signed the Hanoi Convention. (Photo: Thanh Long) |

For Vietnam, that means a strong legal system on cybersecurity and artificial intelligence not only helps protect, but also creates new growth drivers. International corporations appreciate Vietnam not only for its labor costs or market size, but also for its clarity in technology and data governance.

From regulations on personal data protection, data localization policies, to requirements for cybersecurity impact assessments before operating critical information systems – all are contributing to forming a “digital investment safety zone” for Vietnam.

But to take advantage of this, we need to move from a “risk management” mindset to a “value management” mindset. That means not only controlling to avoid violations, but also turning compliance into a competitive advantage. When businesses comply well with data and security regulations, they can be given priority in bidding, international cooperation or access to policy support programs.

Regulators can publicize the “data security” index as a credit criterion. Startups or AI research centers that meet algorithm audit standards can be granted “Responsible AI” certification. This is the direction that helps Vietnam affirm its position through digital trust, instead of just scale or development speed.

The Role of the State: From Management to Creation

Laws always lag behind technology, but if they lag too far behind, they lose their guiding role. Vietnam is facing a rare opportunity: to write its own rules of the game instead of copying the template from the technology powerhouses.

That requires the State to change its approach: from management to creation, building a flexible policy framework for controlled innovation. From inspection to support, helping businesses both comply and develop sustainably. And from individual operations to cross-sectoral cooperation - when artificial intelligence and cybersecurity touch law, defense, science, education and even diplomacy.

Chairing the signing and implementation of the Hanoi Convention is a clear demonstration that Vietnam is not only responding to risks, but is proactively shaping new legal standards, bringing ethics, security and economic factors into the same policy axis.

Towards an economy of trust

From a legal perspective, cybersecurity and AI governance are not simply technical areas, but are fundamental to national competitiveness. A country can only be truly strong in the digital age if it can protect data, ensure algorithm transparency, and maintain people’s trust in online information.

|

| Representatives of countries signing the Hanoi Convention. (Photo: Jackie Chan) |

If the 20th century was a race of industry, the 21st century is a race of trust. The drastic adjustments in Vietnam’s legal framework for the 2025–2030 period are proving that digital economic development cannot be separated from the building of a digital legal order. Vietnam is responding with policies, laws and proactive regional leadership.

Technology always comes first, but legislation can go hand in hand if it is built from a strategic vision. From the national legal framework to the Hanoi Convention, Vietnam is sending a clear message: cybersecurity and AI governance are not barriers to innovation, but prerequisites for sustainable development.

In the data age, trust is the new economic infrastructure, and the law, when it knows how to identify “virtual attackers,” will become a shield for all real values.

Source: https://baoquocte.vn/an-ninh-mang-trong-ky-nguyen-ai-thich-ung-de-bao-ve-niem-tin-so-332214.html

![[Photo] Prime Minister Pham Minh Chinh receives President of Cuba's Latin American News Agency](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F12%2F01%2F1764569497815_dsc-2890-jpg.webp&w=3840&q=75)

Comment (0)