Judge P. Kevin Castel said the lawyers acted irresponsibly. But he acknowledged the apologies and steps taken by the two lawyers, and explained why harsher sanctions were not necessary to ensure other lawyers would not follow suit.

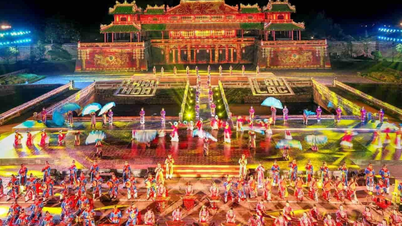

Photo: CNYB

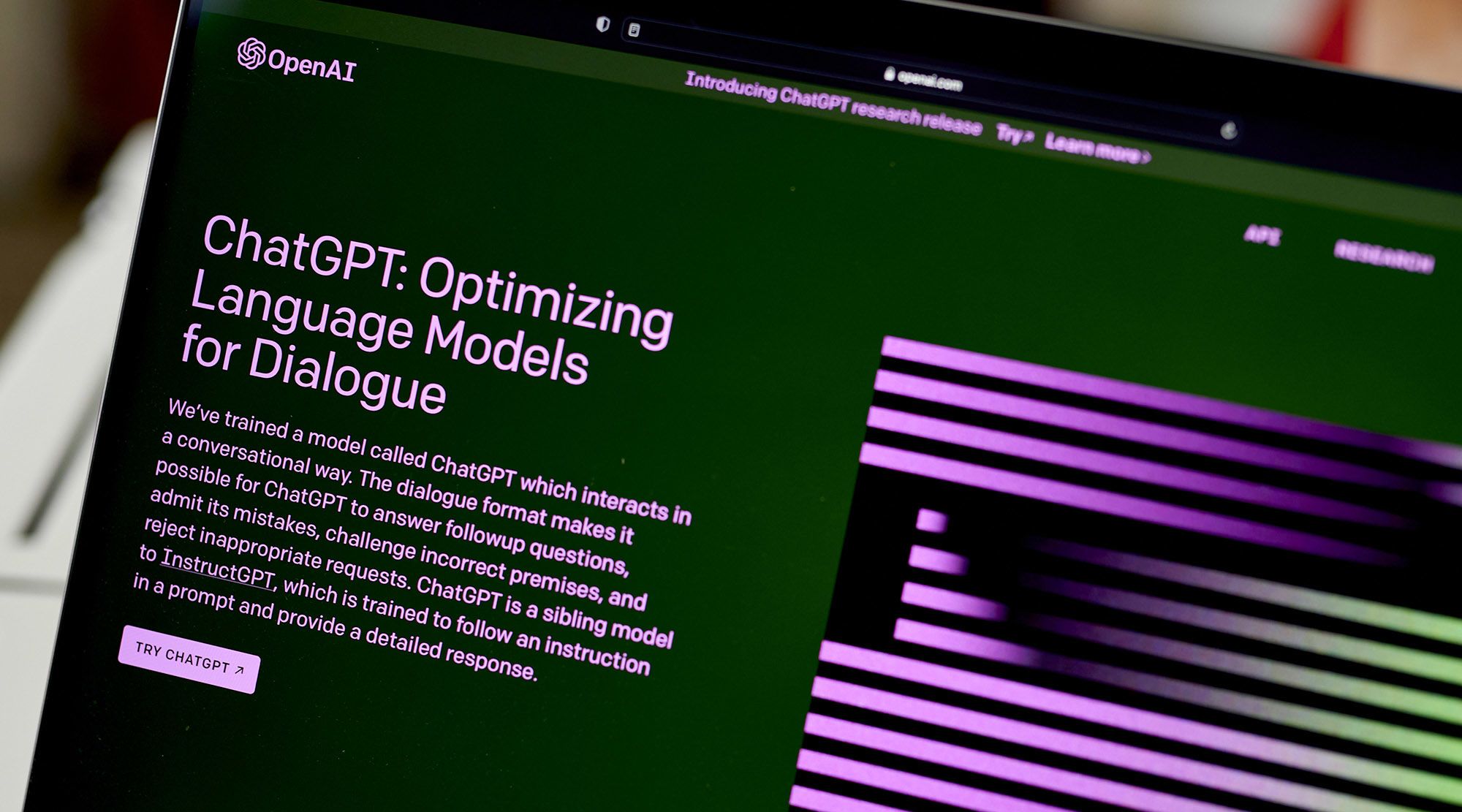

“Technological advances are normal, and there is nothing wrong with using a trusted AI tool for assistance,” Mr. Castel wrote. “But current rules require lawyers to ensure the accuracy of the records they submit.”

The judge said the lawyers and the law firm Levidow & Oberman “abdicated their responsibility by issuing nonexistent judicial opinions with fake citations generated by the ChatGPT artificial intelligence tool.”

In a statement, the law firm said it would comply with the judge's order, but added: “We strongly disagree with the suggestion that anyone at our firm acted irresponsibly. We have apologized to the Court and our clients. This is an unprecedented situation for the judiciary, and we made a mistake in not believing that technology could completely fabricate cases that did not exist.”

At a hearing earlier this month, a lawyer said he used an artificial intelligence-powered chatbot to help find legal precedents supporting a client's lawsuit against Colombian airline Avianca over an injury that occurred on a 2019 flight.

The judge said one of the fake decisions generated by the chatbot “had some features that appeared consistent with actual judicial decisions”, but contained “nonsense”.

Quoc Thien (according to AP)

Source

![[Photo] Nghe An: Provincial Road 543D seriously eroded due to floods](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/8/5/5759d3837c26428799f6d929fa274493)

![[Photo] Discover the "wonder" under the sea of Gia Lai](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/8/6/befd4a58bb1245419e86ebe353525f97)

Comment (0)