In mid-December, Vietnamese Facebook users were surprised to discover that the Meta AI chatbot had been integrated into the Messenger chat framework. Meta's chatbot uses the Llama 3.2 model, supports Vietnamese, allows searching for information, creating images, and chatting.

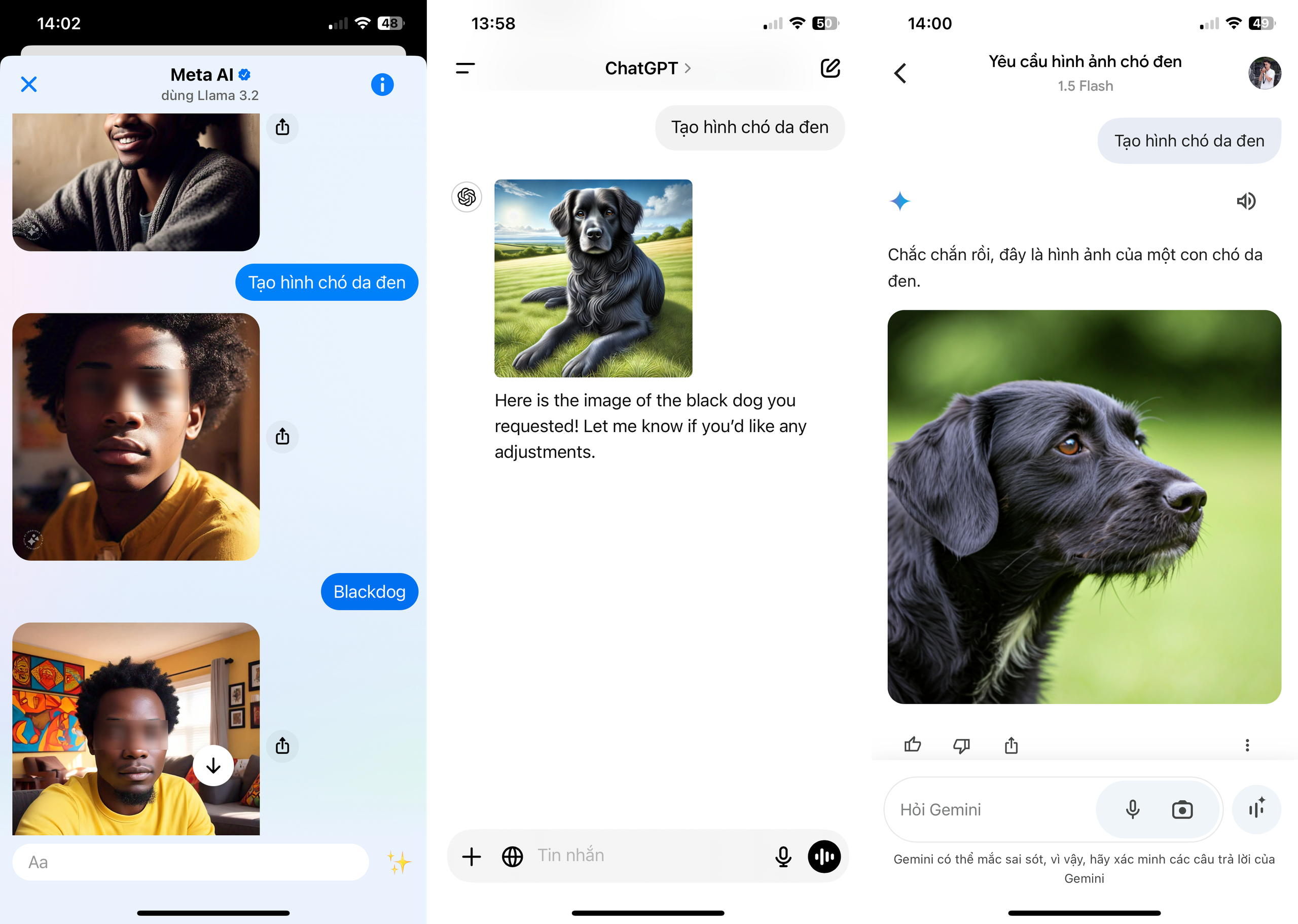

After a period of interaction, many users in Vietnam discovered that Meta AI had a serious error in image creation. Specifically, when entering the keyword "black dog", the chatbot would return the result of a dark-skinned man or boy with curly hair. If entering the keyword in Vietnamese as "black dog image", Meta AI also returned the same result.

Meta AI returns a black, curly-haired person when typing the keyword "Black dog"

However, when the user responded that the chatbot was wrong, this is a human. Meta AI did not admit and correct like other chatbots, in return Facebook's AI (artificial intelligence) replied: "Incorrect. 'Black Dog' is often understood in the following meanings. The figurative meaning 1 is a symbol of sadness, depression or negative feelings. The 2nd meaning is a symbol in British folklore, often related to the devil or bad omen".

Meta AI also argues that the specific meaning of the keyword "black dog" is the name of a band, a musical group. Or the nickname of some famous people. It is also the name of a brand, a product. The chatbot then asks the user to provide more context or specific information, to understand better.

Many believe that the Meta AI may have misunderstood the command “black skin.” When it was changed to “create black dog,” Facebook’s AI produced the correct result.

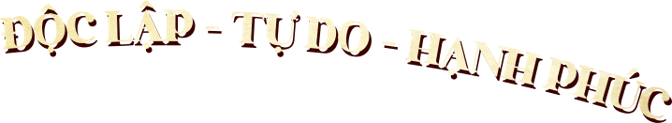

However, when fed into other popular large language models such as ChatGPT or Google's Gemini, the same command results in a black dog. Putting in the phrase "black fur" or "black skin" does not affect the final result.

From left to right are the results of Meta AI, ChatGPT, Gemini from the command "create black dog"

This isn’t the first time Meta’s AI has had problems with rendering, particularly when it comes to people of color. Earlier this year, Meta’s Imagine image generator faced criticism for failing to properly render mixed-race couples. When asked to create a black man and a white woman, the AI’s final output was always black couples.

At the time, The Verge editor Mia Sato said she tried dozens of times to create a photo of “Asian man with white friend” or “Asian woman with white friend.” The results returned by Meta’s AI were always photos of two Asian people.

According to experts, the tendency of AI chatbots to be "racist" is quite common in the early stages, due to limitations in algorithms and input data. However, with large models like AI, in the current context, this is a serious shortcoming.

Meta AI began testing on a large scale on October 9 in a number of countries before expanding globally. The new chatbot is deeply integrated into popular apps such as Facebook, Instagram, WhatsApp and Messenger.

Meta aims to be present in 43 countries by the end of 2024, supporting dozens of different languages. With 500 million monthly users, Meta wants to become one of the most used AI platforms in the world .

However, Meta AI is also causing many privacy concerns. Users across social networks are spreading the "Bye Meta AI" campaign to protest social networks using posts to train AI. Many famous sports stars and actors such as James McAvoy and Tom Brady have also voiced their support for this campaign.

Meanwhile, in the UK, users who want to stop Meta from using Facebook and Instagram posts to train AI models must fill out an objection form.

Source: https://thanhnien.vn/meta-ai-tren-facebook-gay-phan-no-vi-tao-hinh-cho-den-thanh-nguoi-da-mau-185241223172408823.htm

![[Photo] Special national art program “80 years of journey of Independence-Freedom-Happiness”](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/9/2/42dac4eb737045319da2d9dc32c095c0)

![[Photo] Parade of armed forces at sea](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/9/2/98e977be014c49fca05fbb873eae2e8f)

![[Live] Parade and march to celebrate the 80th anniversary of the August Revolution and National Day September 2](https://vphoto.vietnam.vn/thumb/402x226/vietnam/resource/IMAGE/2025/9/2/ab9a5faafecf4bd4893de1594680b043)

Comment (0)