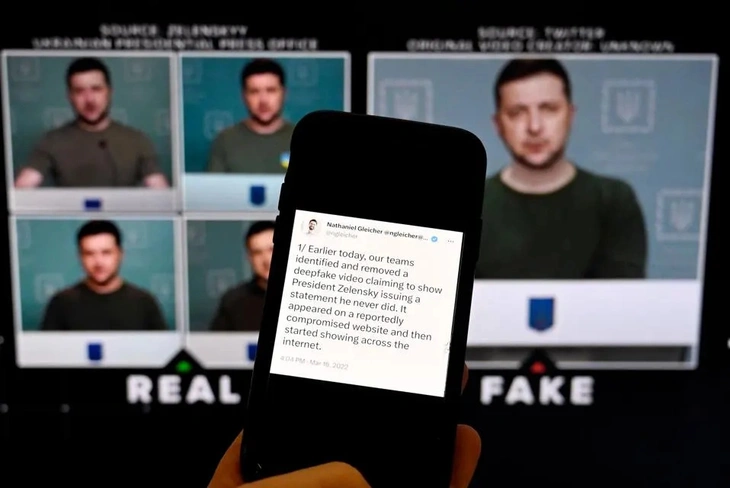

A fake video of Ukrainian President Volodymyr Zelensky's speech using deepfake technology - Photo: AFP

Variety reported from Resemble AI's Q1-2025 report that the amount of damage caused by deepfakes has exceeded $200 million, mainly from financial fraud, political manipulation, extortion, and the dissemination of sensitive content created by AI for profit.

The report describes this as an “escalating crisis” with increasingly sophisticated attacks that go beyond the detection capabilities of ordinary users.

Who are the victims of deepfake?

Deepfakes started out as a fun technological gimmick. But in just a few years, they have become a powerful tool in cross-border fraud campaigns.

According to Resemble AI, deepfakes are currently used primarily in videos (46%), followed by images (32%) and audio (22%).

Worryingly, with just 3 to 5 seconds of audio recording, bad guys can create a fake voice that is powerful enough to fool relatives, partners, or even automatic verification systems.

In the world , Korea is certainly one of the places where this situation is most complicated, with many chat groups specializing in pairing idols with pornographic content for sale - Photo: X

Not only that, 68% of current deepfake products are rated as "nearly indistinguishable from real content", making detection extremely difficult, even for technology experts.

The combination of images and sound in a synchronized video makes the impersonations so believable that real people have difficulty realizing they are being exploited.

The report found that 41% of the victims were public figures, mainly politicians and celebrities, but the remaining 34% were ordinary citizens.

The threat of deepfakes is no longer limited to celebrities but has spread to all walks of life. Financial institutions, businesses, and government agencies are also being targeted.

North America accounted for 38% of the cases, most of which involved political figures. Asia accounted for 27%, and Europe for 21%.

63% of attacks had a cross-border element, proving that deepfakes are not just a domestic problem, but have become a global threat.

Fraud is getting more sophisticated globally

Among deepfake scams, sensitive content is the most common form, causing severe damage to the reputation and spirit of the victim.

Next are financial scams, such as impersonating a boss to request money transfers, pretending to be a relative in need of urgent help, or even staging a video call to verify a fake identity.

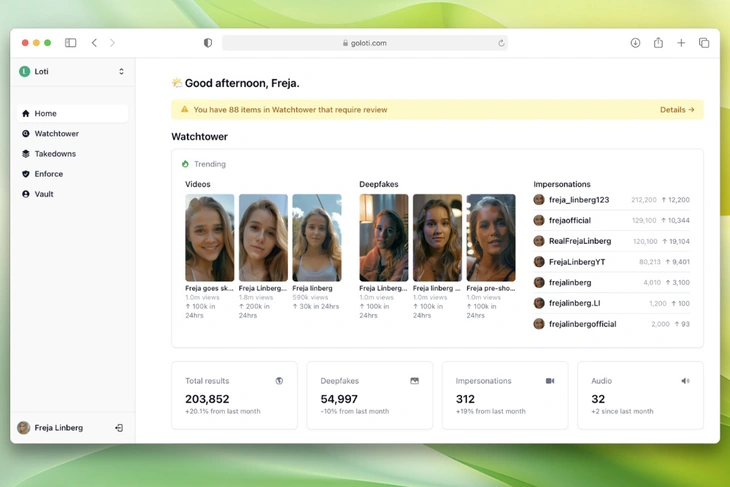

On the other hand, anti-deepfake applications are also being developed more and more strongly, in the photo is Loti AI, which has just successfully raised more than 16 million USD - Photo: Variety

Another area hit hard by deepfakes is politics, with videos of leaders being edited to confuse public opinion or manipulate information during election season.

Additionally, fake news and misinformation are also amplified by this technology, accelerating the spread of the content due to its convincing appearance.

“The fight against deepfakes requires a multi-layered and global response,” a Resemble AI representative emphasized in the announcement.

In particular, technological solutions must take the lead, including: developing more effective deepfake detection systems, standardizing content authentication protocols using watermarks, and traceable digital verification mechanisms.

The report also calls for governments to step up efforts to create a unified legal framework, clearly define harmful deepfakes, hold platforms that host them accountable, and establish effective sanctions.

Raising public awareness, especially through digital literacy and media education, is also seen as key to better public protection.

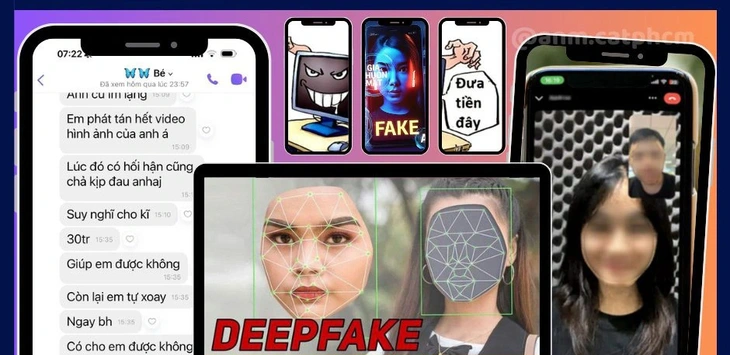

In Vietnam, although the methods are not as sophisticated, there have been countless victims who have fallen into the trap. The Department of Cyber Security and High-Tech Crime Prevention has continuously warned - Photo: PA05

In Vietnam, scams using deepfake are also showing signs of increasing significantly, with many situations causing confusion in the online community.

Some victims said they even received video calls with voices and speech patterns identical to those of their relatives, leaving them unsuspecting.

Once trust is gained, the scammer continues to ask for "urgent help" such as transferring money, providing authentication codes, or borrowing bank accounts.

Faced with this situation, technology experts warn: deepfake is becoming an extremely dangerous fraud weapon, and Vietnam needs to soon standardize the legal framework to protect users.

Read moreBack to Topic Page

TO CUONG

Source: https://tuoitre.vn/deepfake-gay-thiet-hai-hon-200-trieu-usd-3-thang-dau-nam-2025-ai-cung-co-the-la-nan-nhan-20250419185910057.htm

Comment (0)