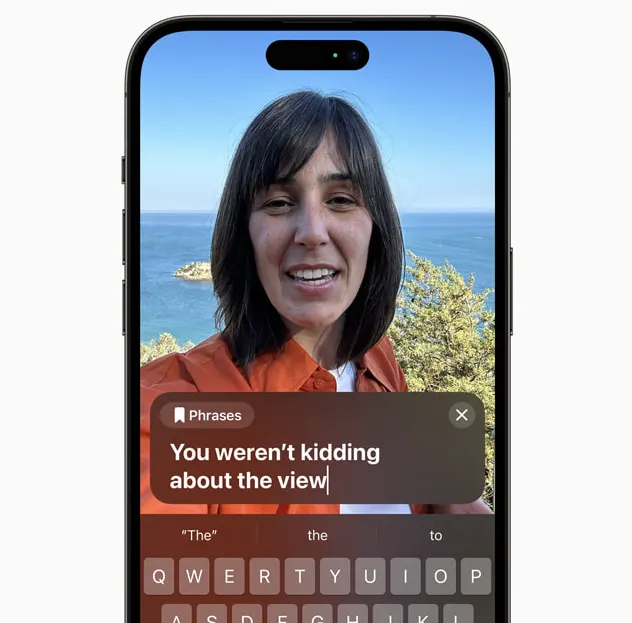

Ahead of WWDC 2023, Apple previewed some of the features on iOS 17. The “Personal Voice” feature allows iPhone and iPad users to create a digital version of their own voice to use in live conversations or calls, FaceTime.

According to Apple, Personal Voice creates a synthesized voice that sounds like the user’s and can be used to communicate with family and friends. It helps people whose speech is affected by age.

To use it, users need to record their voice for 15 minutes. Machine learning technology on iPhone and iPad will synthesize the voice to create a digital version, while protecting maximum privacy.

This is part of the accessibility improvements on iOS devices. There’s also a new Assistive Access feature that makes it easier for people with cognitive disabilities and their caregivers to use their devices. Apple also announced another feature that combines camera input, LiDAR sensors, and machine learning to read characters on the screen.

Apple typically releases beta versions of its new iOS operating system at WWDC for developers and early adopters. They stay in beta through the summer and are released in the fall alongside new iPhones.

WWDC 2023 starts on May 6. Apple is expected to introduce its first virtual reality headset alongside other software and hardware updates.

(According to CNBC)

Source

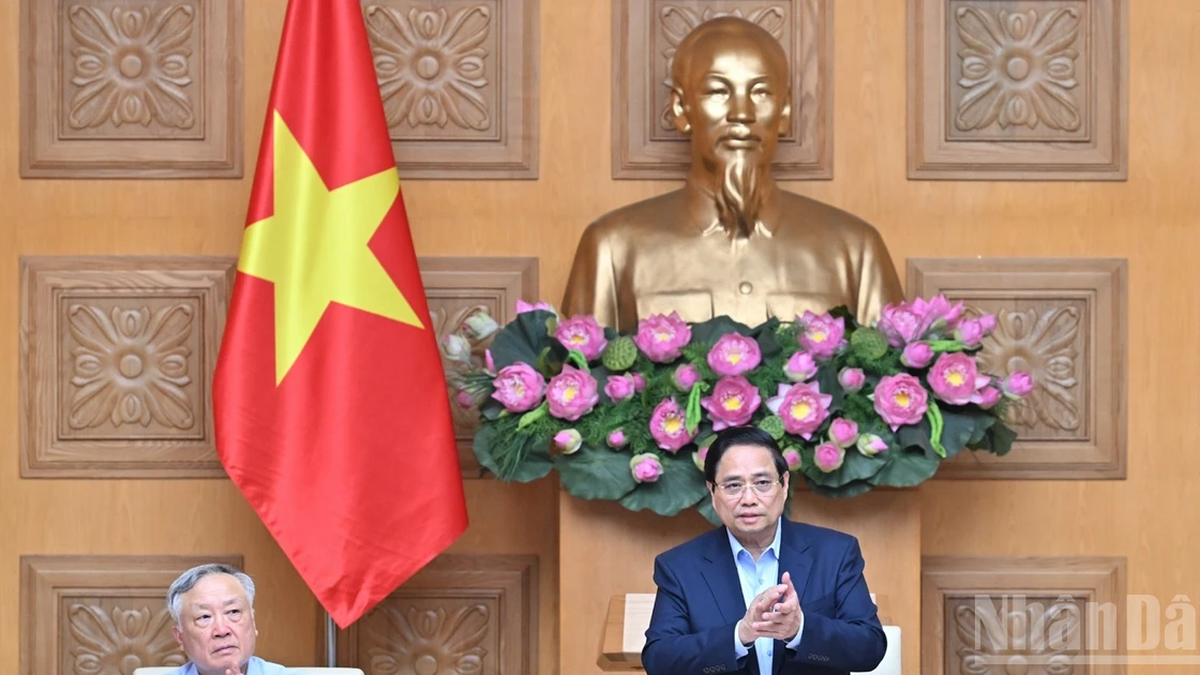

![[Photo] National Assembly Chairman attends the seminar "Building and operating an international financial center and recommendations for Vietnam"](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/7/28/76393436936e457db31ec84433289f72)

Comment (0)