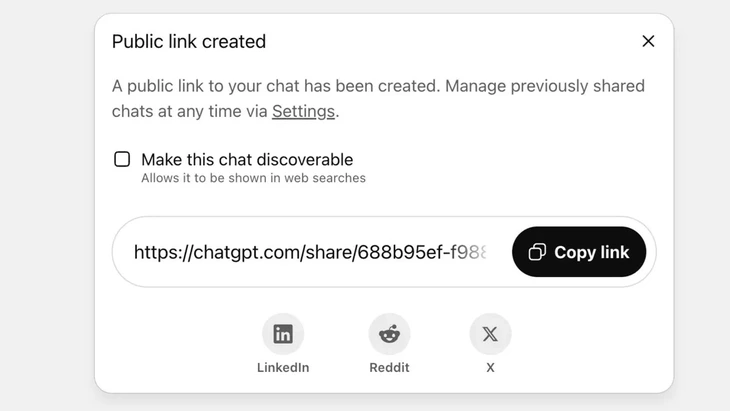

Feature to share chats via public link on ChatGPT - Photo: Techradar

ChatGPT now reaches over 2.5 billion queries from global users every day, showing the growing popularity of this chatbot.

However, this tool is facing strong backlash from users, as the “sharing” feature has caused thousands of conversations to leak sensitive information to Google and some search engines on the Internet.

High security risk

Mr. Vu Ngoc Son - head of technology department of National Cyber Security Association (NCA) - assessed that the above incident shows a high level of security risk for ChatGPT users.

“The above incident is not necessarily a technical flaw as there is a certain initiative of the user in clicking the share button. However, it can be said that the problem lies in the design of the AI chatbot product, when it has confused users and there are not strong enough warning measures about the risk of personal data being leaked if users share,” Mr. Vu Ngoc Son analyzed.

On ChatGPT, this feature is implemented after the user chooses to share the chat via a public link, the content will be stored on OpenAI's server as a public website (chatgpt.com/share/...), no login or password required to access.

Google's crawlers automatically scan and index these pages, making them appear in search results, including sensitive text, images, or chat data.

Many users were unaware of the risk, thinking they were sharing the chat with friends or contacts. This led to thousands of conversations being exposed, in some cases containing sensitive personal information.

Although OpenAI quickly removed this feature in late July 2025 after community backlash, it still took time to coordinate with Google to remove the old indexes. Especially with the complex storage system, which includes Google's cache servers, this cannot be done quickly.

Don't treat AI chatbots as a "safety black box"

Data security expert Vu Ngoc Son - head of technology at the National Cyber Security Association (NCA) - Photo: CHI HIEU

The leak of thousands of chat logs can pose risks to users such as revealing personal and business secrets; damage to reputation, financial risks or even safety hazards due to revealing home addresses.

“AI-enabled chatbots are useful but not a ‘black box’, as shared data can exist forever on the web if left unchecked.

The above incident is certainly a lesson for both providers and users. Other AI service providers can learn from this experience and design features with clearer and more transparent warnings.

At the same time, users also need to proactively limit the uncontrolled posting of identifying or personal information on AI platforms," security expert Vu Ngoc Son recommended.

According to data security experts, the above incident shows the need for legal corridors and cybersecurity standards for AI. AI suppliers and developers also need to design systems that ensure security, avoiding the risk of data leakage such as: leakage through bad vulnerabilities; software vulnerabilities leading to database attacks; poor control leading to poisoning, abuse to answer false and distorted questions.

Users also need to control the sharing of personal information with AI, not sharing sensitive information. In case of real need, it is advisable to use anonymous mode or actively encrypt information to avoid data being directly linked to specific individuals.

Source: https://tuoitre.vn/hang-ngan-cuoc-tro-chuyen-voi-chatgpt-bi-lo-tren-google-nguoi-dung-luu-y-gi-20250805152646255.htm

![[Photo] Cutting hills to make way for people to travel on route 14E that suffered landslides](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/11/08/1762599969318_ndo_br_thiet-ke-chua-co-ten-2025-11-08t154639923-png.webp)

![[Video] Hue Monuments reopen to welcome visitors](https://vphoto.vietnam.vn/thumb/402x226/vietnam/resource/IMAGE/2025/11/05/1762301089171_dung01-05-43-09still013-jpg.webp)

Comment (0)