At the recent Google I/O event, Google launched Gemini 1.5 - an artificial intelligence (AI) model with many new features, including the ability to analyze code, text, audio recordings, and videos with longer durations than the previous version.

Detect suspicious words

In particular, Gemini 1.5 Pro is expected to be included in Gmail, Google Docs... in the near future, becoming a multi-purpose tool in Workspace, helping users collect any information from Drive. Another AI model is Gemini Live, which helps users interact with smartphones with natural voices... At this event, Google attracted attention when bringing an AI model called Gemini Nano to smartphones using the Android operating system with the feature of detecting suspicious words in conversations to help users be alert to scam calls. Currently, Gemini Nano has been integrated on Pixel 8 Pro and the Galaxy S24 series.

Sharing opinions on social networks, users expect Gemini Nano to gradually become more popular on Android lines on the market. However, some people expressed concern that call data could be exposed if permissions are granted to Google. Regarding this issue, Google said that call data is protected, only stored on the user's phone. Users can customize the feature to enable/disable the scam call warning. Gemini Nano operates independently, does not connect to the internet and even Google or third parties cannot access it.

Previously, OpenAI also announced a new AI model called GPT-4o, which increases the power of ChatGPT by 5 times. Accordingly, by optimizing algorithms and training methods, GPT-4o will provide more accurate answers and maintain high consistency in long conversations. Minimizing common errors and improving the ability to understand context, helping the model respond more accurately to complex questions... Open AI said that the GPT-4o interface ensures that interactions and personal information between users and AI are processed securely. Meta - the parent company of Facebook and Instagram - announced that it will stop developing the Workplace business platform to focus on developing AI products and the Metaverse virtual universe. According to the technology world, Meta's upcoming AI projects will help users have a better experience with a personalized advertising platform, suitable content recommendations, live translation in many languages... At the same time, build privacy policies to protect user information.

At a recent event, Microsoft introduced a new feature called "Recall" - which the company calls AI Explorer. Accordingly, this AI tool will be integrated on the Copilot Plus computer, helping users to track everything done on the computer, from web browsing to voice chat, creating a history stored on the computer that users can search when they need to remember what they did before. In addition, Recall also provides data in a visual timeline, allowing users to easily scroll through and explore all activities on the computer. The accompanying Live Captions feature helps users search for online meetings and videos, transcribe and translate speech conveniently... Microsoft promises that Recall will operate privately on the device so user data will be secure. Users can pause, stop or delete captured content or choose to exclude specific applications or websites...

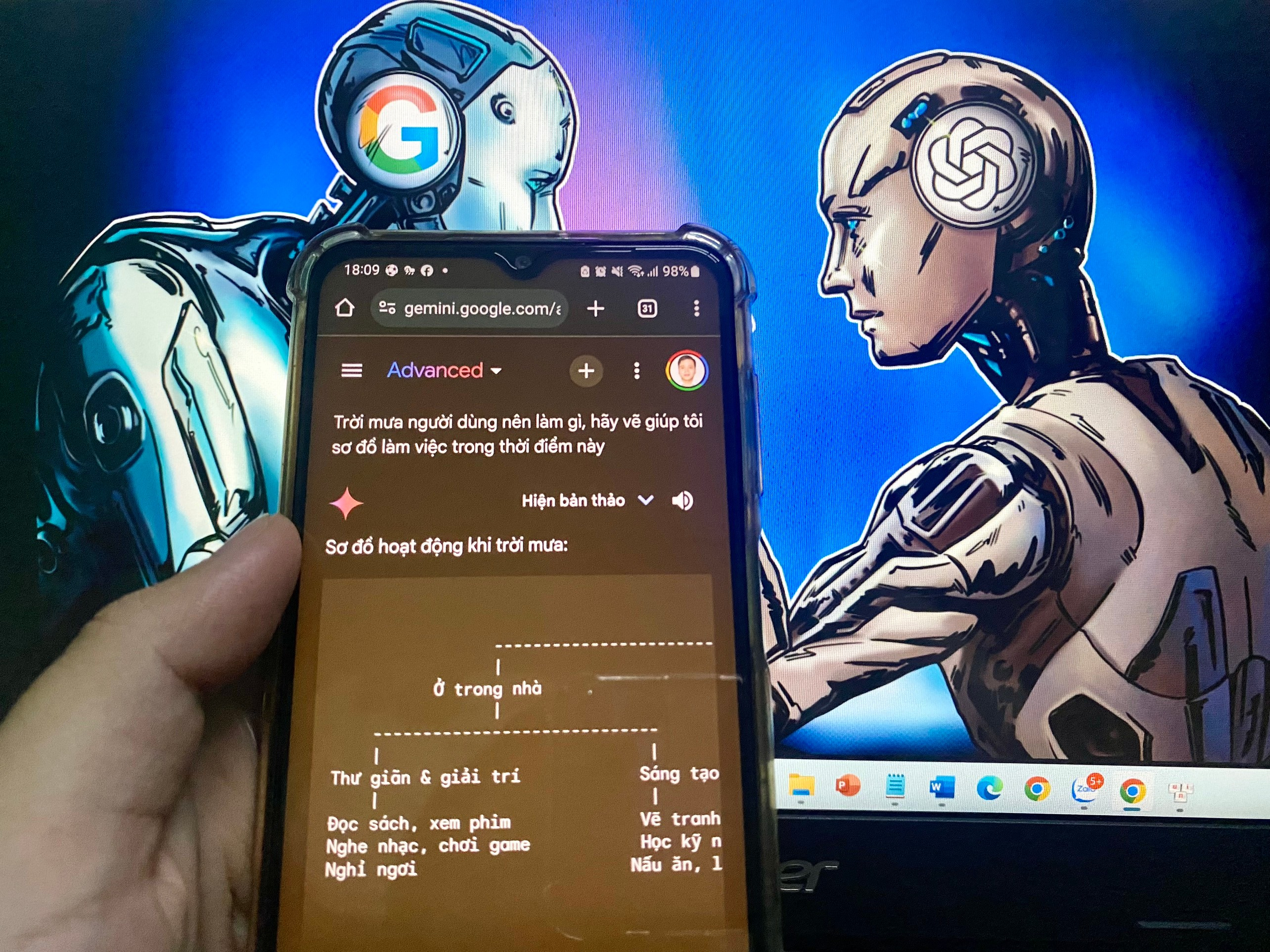

Gemini 1.5pro answers user questions in the form of diagrams in just seconds Photo AI: Le Tinh

Remind, warn users

According to security expert Pham Dinh Thang, the process of interacting with users has increased the level of intelligence of AI. Therefore, the AI model will continuously change strongly and become more streamlined, but this also increases the risk to users such as facial falsification (deepfake), and the voice will be more difficult to detect because of its high authenticity.

Therefore, technology companies such as Google or ChatGPT... must focus on protecting users' data security. "You cannot put all your trust in the data security commitments of technology companies. Users need to protect themselves first. Do not visit strange AI websites of unknown origin because the rate of malware is very high. Do not access information that is not related to you. At the same time, regularly update news about AI fraud tricks" - Mr. Thang recommends. Meanwhile, Mr. Huynh Trong Thua, an information security expert, said that when using AI products, users should not worry too much about their data because the personal data collection of companies is for analysis to provide warnings and appropriate keywords to personalize the experience. "The rate of fraud will decrease a lot after Google and OpenAI apply new models. The fraud warning is a strong reminder that will make users stop before the sweet invitation of the scammer" - Mr. Thua said.

According to technology experts, technology companies tend to use more modern encryption to protect user data. At the same time, with upgraded versions, users have more control over personal data, including the ability to manage, edit and delete data by agreeing or disagreeing to allow the platform to use it.

According to experts, in response to concerns about the use of AI tools for fraud, regulators need to carefully consider developing policies and flexible control and management mechanisms for the operation of AI tools. This will create conditions for AI applications to develop sustainably and responsibly.

Source: https://nld.com.vn/ai-nang-cap-giup-phat-hien-bat-thuong-196240521203710228.htm

![[Photo] The 9th Congress of the Party Committee of the Office of the President, term 2025-2030](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/6/20/78e7f27e8c4b4edc8859f09572409ad3)

![[Maritime News] Wan Hai Lines invests $150 million to buy 48,000 containers](https://vphoto.vietnam.vn/thumb/402x226/vietnam/resource/IMAGE/2025/6/20/c945a62aff624b4bb5c25e67e9bcc1cb)

Comment (0)