Nvidia's revenue rose to $44.1 billion last quarter, but one of the chip giant's most important metrics isn't money.

Instead, throughout May, industry-leading tech CEOs, who are also Nvidia’s biggest customers, have expressed excitement about the token’s growth.

“OpenAI, Microsoft, and Google are seeing a quantum leap in token creation capabilities. Microsoft processed over 100 trillion tokens in Q1, a five-fold increase year-over-year,” said Colette Kress, Nvidia’s chief financial officer.

The most basic unit in AI

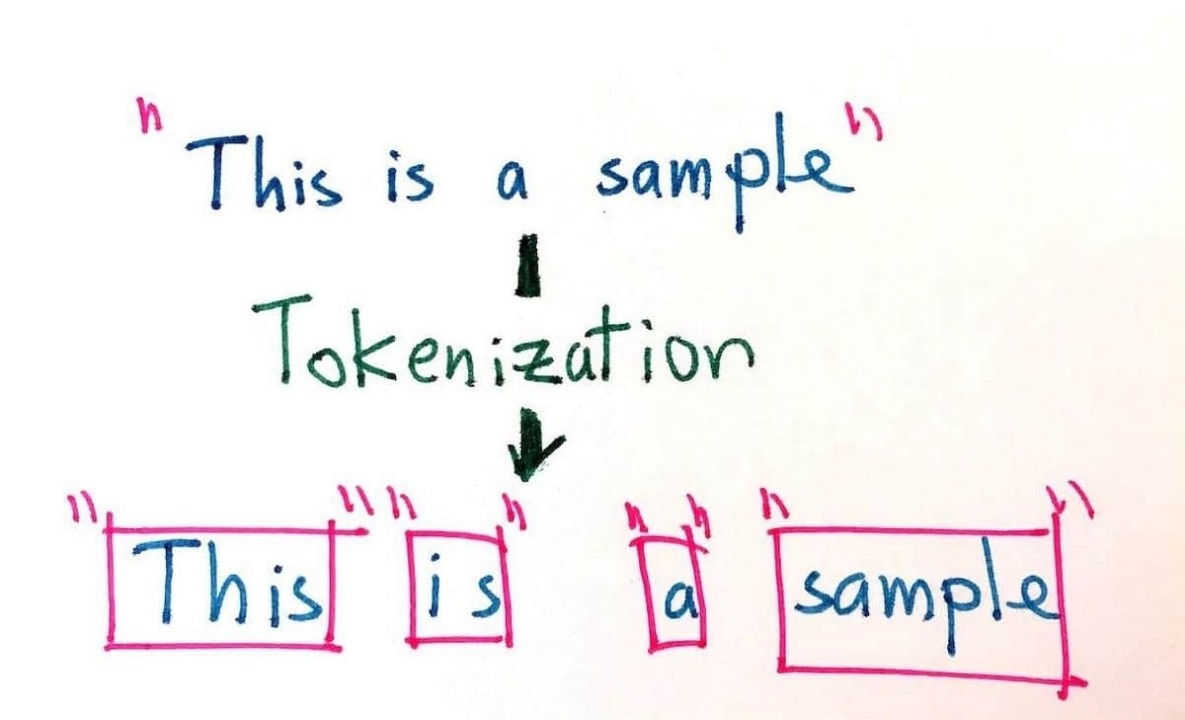

In the world of artificial intelligence (AI), tokens are one of the most fundamental building blocks behind computers' ability to process language. Tokens can be thought of as Lego pieces that help AI models construct valuable sentences, ideas, and interactions.

Whether it's a word, a punctuation mark, or even a snippet of audio in speech recognition, tokens are the tiny building blocks that allow AI to understand and create content. In other words, they're the behind-the-scenes team that makes everything from text generation to sentiment analysis work.

The magic of tokenization comes from its flexibility. For simple tasks, large language models (LLMs) can treat each word as its own token. But when things get more complicated, for example with unusual or new words, LLMs can break them down into smaller pieces (subwords). This way, the AI still works smoothly, even with unfamiliar terms.

|

Tokens are one of the most fundamental building blocks behind computers' ability to process language. Photo: CCN. |

Modern models, like GPT-4, work with huge vocabularies, around 50,000 tokens. Each piece of input text has to go through a lexical segmentation process before being processed.

This step is important because it helps the AI model standardize the way it interprets and generates text, making everything go as smoothly as possible. By breaking language into smaller pieces, tokenization gives the AI everything it needs to handle linguistic tasks with high accuracy and speed.

Without this process, modern AI would not be able to reach its full potential. As AI tools continue to evolve, the number of tokens generated for output, or inference, is growing faster than many expected.

“Explosive token growth is what really matters, long term,” Morgan Stanley analysts said.

Why are tokens important?

Speaking to senior industry figures, NVIDIA CEO Jensen Huang sees the token's rise as a sign that AI tools are delivering value.

“Companies are starting to talk about how many tokens they created last quarter and how many tokens they created last month. Very soon we will be talking about how many tokens are created per hour, just like every factory does,” Huang said at Computex 2025, one of the world’s largest tech events, especially in the computer and peripherals space.

Tokens help AI systems analyze and understand language, powering everything from text generation to sentiment analysis. Google Translate is a prime example of the importance of this unit.

Specifically, when AI translates text from one language to another, the system first breaks the text down into tokens. These tokens help AI understand the meaning behind each word or phrase, ensuring that the translation is not only literal but also contextually accurate.

|

Whether it's a word, a punctuation mark, or even a snippet of audio in speech recognition, tokens are the tiny building blocks that allow AI to understand and create content. Photo: Tony Grayson. |

Additionally, tokens are also quite effective in helping AI read the sentiment of text. With sentiment analysis, AI looks at how text impacts the user's emotions, whether it's a positive product review, negative feedback, or a neutral comment.

By breaking down text into tokens, AI can determine whether a piece of text has a positive, negative, or neutral tone. This is especially useful in marketing or customer service, where understanding how users feel about a product or service can shape future strategies.

Additionally, tokens enable AI to capture subtle emotional cues in language, helping businesses act quickly based on feedback or emerging trends.

As AI systems become more powerful, tokenization techniques will also need to evolve to meet the growing demands for efficiency, accuracy, and flexibility.

A key focus is speed. Accordingly, future tokenization methods should aim to process tokens faster, allowing AI models to respond in real-time while managing larger datasets.

More importantly, the future of the system is not limited to text. The application of multimodal tokenization will bring scalability to AI by integrating diverse data types such as images, videos , and audio.

Imagine an AI that can seamlessly analyze a photo, extract key details, and create a narrative. To do this, the system needs an improved tokenization process. This innovation could transform areas likeeducation , healthcare, and entertainment with more comprehensive insights.

Source: https://znews.vn/khong-phai-tien-day-moi-la-chi-so-quan-trong-nhat-voi-nvidia-post1557810.html

Comment (0)