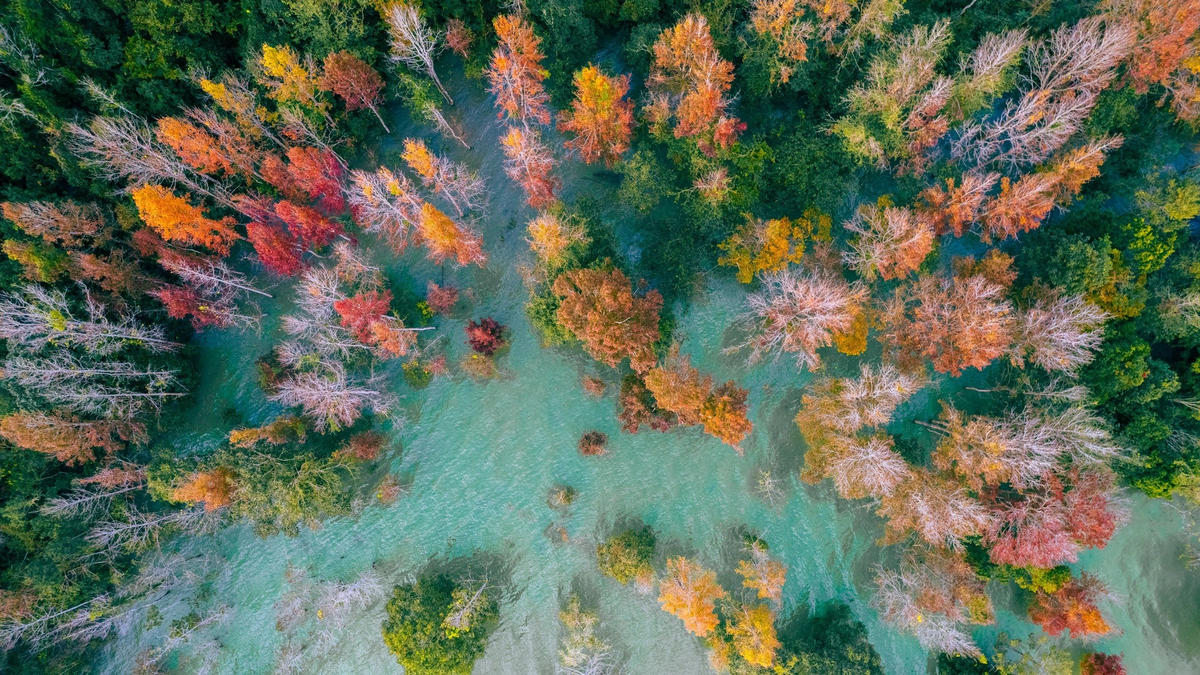

The child monitoring feature on ChatGPT also helps parents feel more secure when letting their children use artificial intelligence.

The upcoming ChatGPT child monitoring tool is seen as a safety change from OpenAI following a high-profile lawsuit. The feature aims to reduce the risk of children chatting with chatbots, while also raising questions about the social responsibility of artificial intelligence companies.

From the shocking lawsuit

Last August, the family of Adam Raine, a 16-year-old California boy, filed a lawsuit against OpenAI, claiming that ChatGPT provided self-harm instructions and verbal abuse that left their son feeling hopeless before he committed suicide.

The lawsuit quickly caused a stir and opened a debate about the safety of artificial intelligence for young users.

This raises important questions: should minors use ChatGPT without supervision, and how should the responsibility of AI developers be determined when the product directly affects the psychology of young users?

In response to that pressure, OpenAI announced the addition of parental controls, a first step toward demonstrating its commitment to protecting children and signaling that the company is willing to put safety first in the next phase of ChatGPT's development.

Monitoring features on ChatGPT

According to the official announcement, OpenAI will roll out a tool that allows parents to link their accounts to their children's ChatGPT accounts. Once activated, parents can manage important settings such as turning off chat storage, controlling usage history, and adjusting age-appropriate interaction levels.

The highlight of this innovation is the alert system. ChatGPT will be trained to recognize when young users show signs of psychological crisis, such as stress, depression or self-harm. In those cases, parents will receive notifications to intervene promptly. OpenAI asserts that the goal is not to monitor the entire conversation, but to focus on emergency situations to protect children's safety.

Additionally, when sensitive content is detected, ChatGPT will switch to operating with deep reasoning models, which are designed to provide more thoughtful and supportive responses. In parallel, the company is also working with medical experts and an international advisory board to continue refining its protections in the coming time.

The challenges posed

While OpenAI has stressed that the new feature is designed to benefit families, the international response has been mixed. The tool is expected to add a layer of protection for parents as their children interact with artificial intelligence, but it has also raised long-standing concerns, from usage limits to child data privacy.

In fact, concerns about children's data privacy have been raised by experts for years. These warnings highlight that storing and analyzing children's chat histories, without strict protections, can increase the risk of exposing sensitive information. The emergence of monitoring tools on ChatGPT has brought the issue back into the spotlight.

Another point discussed was the system's warning threshold. OpenAI said ChatGPT would detect signs of severe stress and send an alert to parents.

However, machine learning always carries the risk of bias. If the system gives a false alarm, parents may be unnecessarily confused. Conversely, if it misses a signal, children may face risks that the family cannot intervene in time.

International child protection organizations have also closely watched OpenAI's move, acknowledging the parental control tool as a positive sign, but warning that the lack of strict age verification and default protections means children can still face risks, even with parental supervision.

These debates show that the development of artificial intelligence is not only a technological story, but also closely linked to social responsibility and the safety of the younger generation.

THANH THU

Source: https://tuoitre.vn/openai-tung-tinh-nang-giam-sat-tre-tren-chatgpt-sau-nhieu-tranh-cai-2025090316340093.htm

Comment (0)