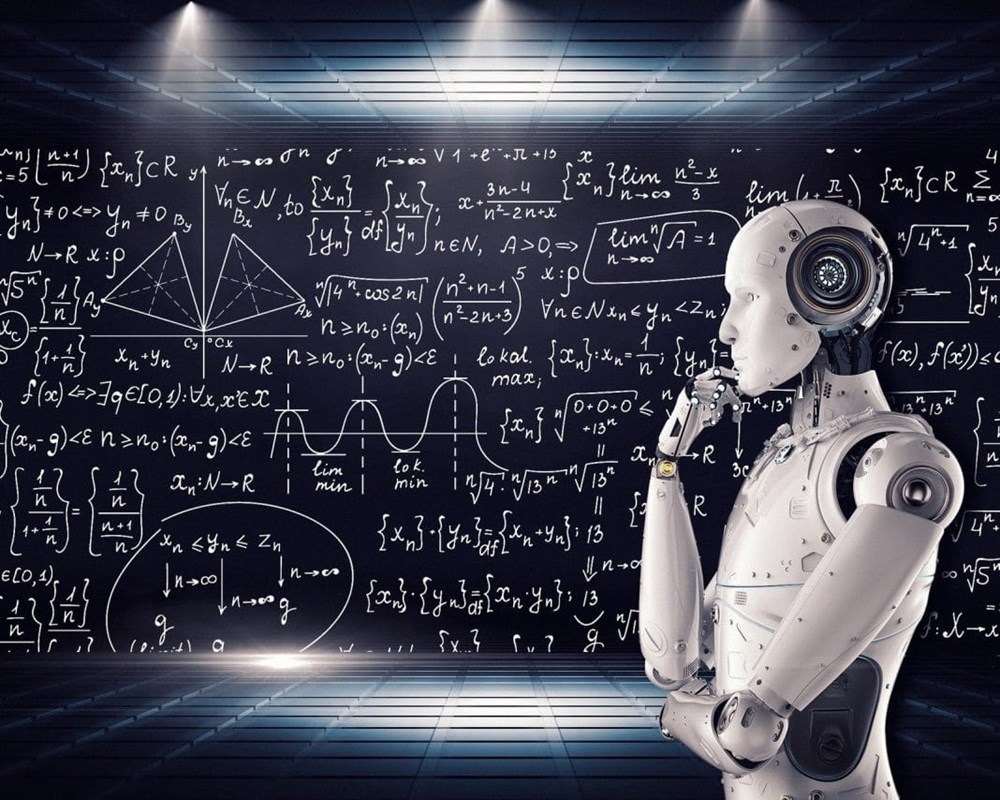

The stronger the model, the weaker the "thinking"?

In a newly published report, Apple researchers evaluated the performance of Large Reasoning Models (LRMs) when dealing with logic problems of increasing difficulty, such as the Tower of Hanoi or the River Crossing problem.

The results were shocking: when faced with highly complex problems, the accuracy of advanced AI models not only declined, but “collapsed completely.”

What's more worrying is that before the performance decline, the models start to... reduce their reasoning effort, a counterintuitive behavior that should require more thinking when the problem is harder.

In many cases, even when given the right algorithm, models fail to produce a solution. This shows a profound limit to their ability to adapt and apply rules to new environments.

The challenge of “general theory”

Reacting to the study, American scholar Gary Marcus, one of the voices skeptical about the true capabilities of AI, called Apple's findings "quite devastating".

“Anyone who thinks that large language models (LLMs) are a direct path to AGI is deluding themselves,” he wrote on his personal Substack newsletter.

Sharing the same view, Mr. Andrew Rogoyski, an expert at the Institute for Human-Centered AI (University of Surrey, UK), said that this discovery indicates the possibility that the technology industry is entering a "dead end": "When models only work well with simple and average problems, but completely fail when the difficulty increases, it is clear that there is a problem with the current approach."

One particular point Apple highlighted was a lack of “general reasoning,” which is the ability to extend understanding from a specific situation to similar situations.

When unable to transfer knowledge in the way humans typically do, current models are prone to “rote learning”: strong at repeating patterns, but weak at logical or deductive thinking.

In fact, the study found that large theoretical models waste computational resources by repeatedly solving simple problems correctly, but choosing the wrong solution from the start for slightly more complex problems.

The report tested a range of leading models, including OpenAI’s o3, Google’s Gemini Thinking, Claude 3.7 Sonnet-Thinking, and DeepSeek-R1. While Anthropic, Google, and DeepSeek did not respond to requests for comment, OpenAI declined to comment.

Apple’s research doesn’t negate AI’s achievements in language, vision, or big data. But it highlights a blind spot that’s been overlooked: the ability to reason meaningfully, which is the core of achieving true intelligence.

Source: https://baovanhoa.vn/nhip-song-so/ai-suy-luan-kem-dan-khi-gap-bai-toan-phuc-tap-141602.html

Comment (0)