OpenAI's new language model builds on the previous GPT-4, but it has been expanded and refined during training. While not the most advanced, GPT-4.5 boasts more knowledge, improved writing skills, and a more refined personality than its predecessor.

According to benchmarks, GPT-4.5 is a modest upgrade over GPT-4. On the SWE-bench Verified, the model scored 38%, up 2-7% from GPT-4, but still 30% behind OpenAI’s o3-based deep learning. For comparison, Anthropic’s Claude 3.7 Sonnet model scored 62.3% on the same benchmark. On SimpleQA’s Accuracy benchmark, GPT-4.5 scored 62.5%, compared to GPT-4’s 38.2%. However, on SimpleQA’s Hallucination Rate benchmark, GPT-4.5 scored the lowest among OpenAI’s major language models.

Meeting OpenAI's New Standards

Recently, OpenAI's Preparedness team developed a new benchmark called SWE-Lancer to evaluate the performance of large language models on real-world software engineering tasks such as feature development and debugging. In this benchmark, GPT-4.5 was able to solve 20% of the IC SWE tasks and 44% of the SWE Manager tasks, a slight improvement over the previous model.

In terms of safety, OpenAI's safety advisory group classified GPT-4.5 as having a medium risk, with low scores in the areas of cybersecurity and model autonomy.

ChatGPT Pro users can now experience a preview of the GPT-4.5 model via the model picker on web, mobile, and desktop. The model supports searching, uploading files, images, and canvas features on ChatGPT. Multimodal features such as Voice Mode, video, and screen sharing will be added in the future.

GPT-4.5 will be officially available next week for ChatGPT Plus and Teams users, as well as for all paid developers via the Chat Completions API, Assistants API, and Batch API, with notable features such as function calls, Structured Outputs, Streaming, and system messaging.

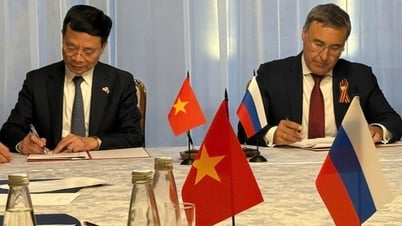

![[Photo] General Secretary To Lam begins official visit to Russia and attends the 80th Anniversary of Victory over Fascism](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/5/8/5d2566d7f67d4a1e9b88bc677831ec9d)

![[Photo] Prime Minister Pham Minh Chinh meets with the Policy Advisory Council on Private Economic Development](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/5/8/387da60b85cc489ab2aed8442fc3b14a)

Comment (0)