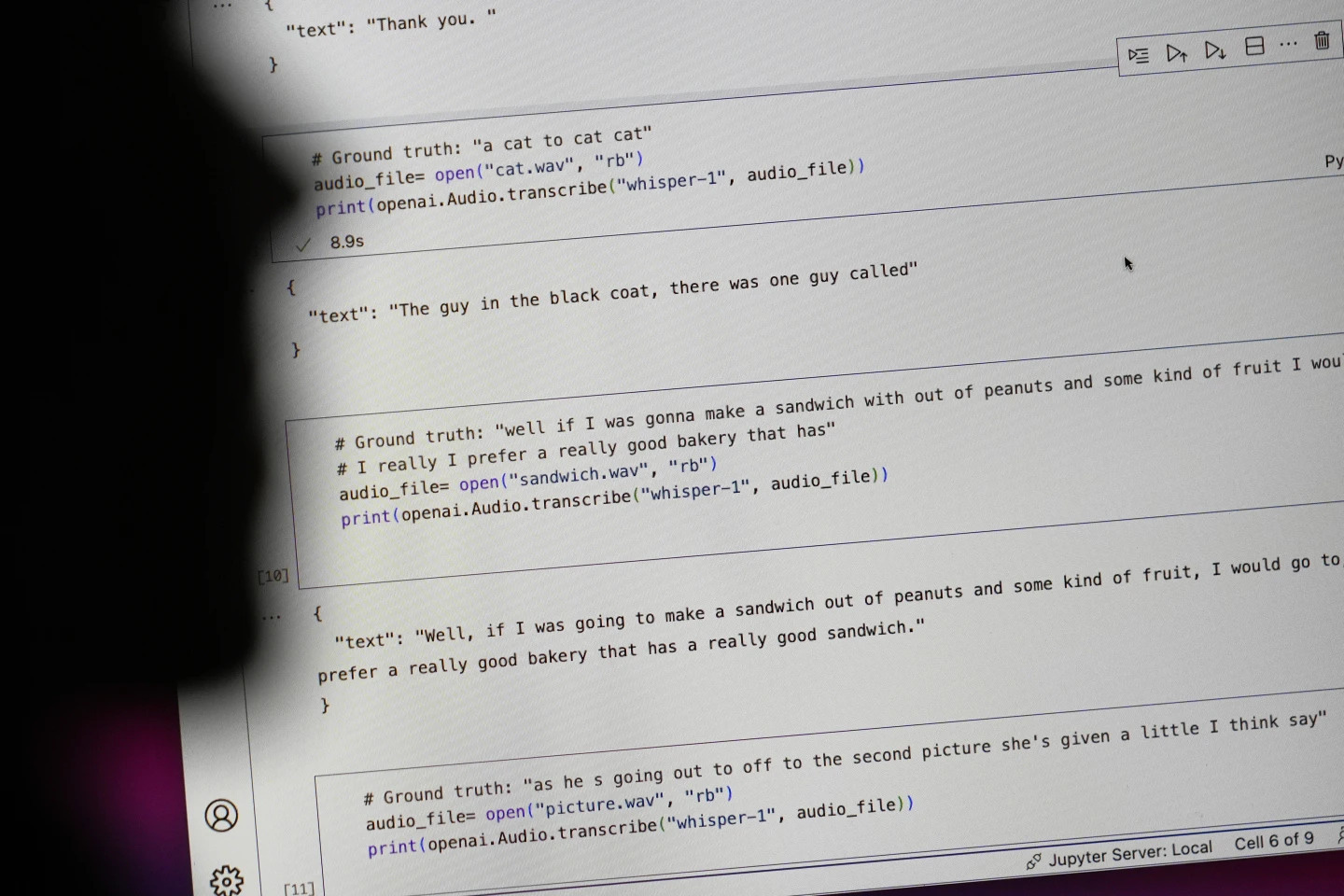

Tech giant OpenAI touted its speech-to-text tool, Whisper, as an AI with 'human-like accuracy and robustness.' But Whisper had one major flaw: It generated text and sentences that were completely bogus.

Some of the AI-generated text – called “hallucinations” – can include racial comments, violent language and even imaginary medical treatments – Photo: AP

Some of the AI-generated text is so-called “hallucinatory,” experts say, according to AP, including racial comments, violent language and even imaginary medical treatments.

High rate of "illusion" in AI-generated texts

Experts are particularly concerned because Whisper is widely used in many industries around the world to translate and transcribe interviews, generate text in popular consumer technologies and create subtitles for videos.

More worryingly, many medical centers are using Whisper to transfer consultations between doctors and patients, although OpenAI has warned that the tool should not be used in “high-risk” areas.

The full extent of the problem is difficult to determine, but researchers and engineers say they regularly encounter Whisper "hallucinations" in their work.

A researcher at the University of Michigan said he found “hallucinations” in eight out of ten audio transcriptions he examined. A computer engineer found “hallucinations” in about half of the transcriptions of more than 100 hours of audio he analyzed. Another developer said he found “hallucinations” in nearly all of the 26,000 recordings he created using Whisper.

The problem persists even with short, clearly recorded audio samples. A recent study by computer scientists found 187 “illusions” in more than 13,000 clear audio clips they examined. This tendency would lead to tens of thousands of false transcriptions in millions of recordings, the researchers said.

Such errors can have “very serious consequences,” especially in hospital settings, according to Alondra Nelson, who headed the White House Office of Science and Technology in the Biden administration until last year.

“No one wants a misdiagnosis,” said Nelson, now a professor at the Institute for Advanced Study in Princeton, New Jersey. “There needs to be a higher standard.”

Whisper is also used to create captions for the deaf and hard of hearing—a population that is particularly at risk for mistranslations. This is because deaf and hard of hearing people have no way to identify fabricated passages “hidden in all the other text,” says Christian Vogler, who is deaf and director of the Technology Accessibility Program at Gallaudet University.

OpenAI is called upon to solve the problem

The prevalence of such “hallucinations” has led experts, advocates, and former OpenAI employees to call on the federal government to consider AI regulations. At a minimum, OpenAI needs to address this flaw.

“This problem is solvable if the company is willing to prioritize it,” said William Saunders, a research engineer in San Francisco who left OpenAI in February over concerns about the company’s direction.

“It would be a problem if you put it out there and people get so confident about what it can do that they integrate it into all these other systems.” An OpenAI spokesperson said the company is constantly working on ways to mitigate the “illusions” and appreciates researchers’ findings, adding that OpenAI incorporates feedback into model updates.

While most developers assume that text-to-speech tools can make typos or other mistakes, engineers and researchers say they've never seen an AI-powered text-to-speech tool that "hallucinates" as much as Whisper.

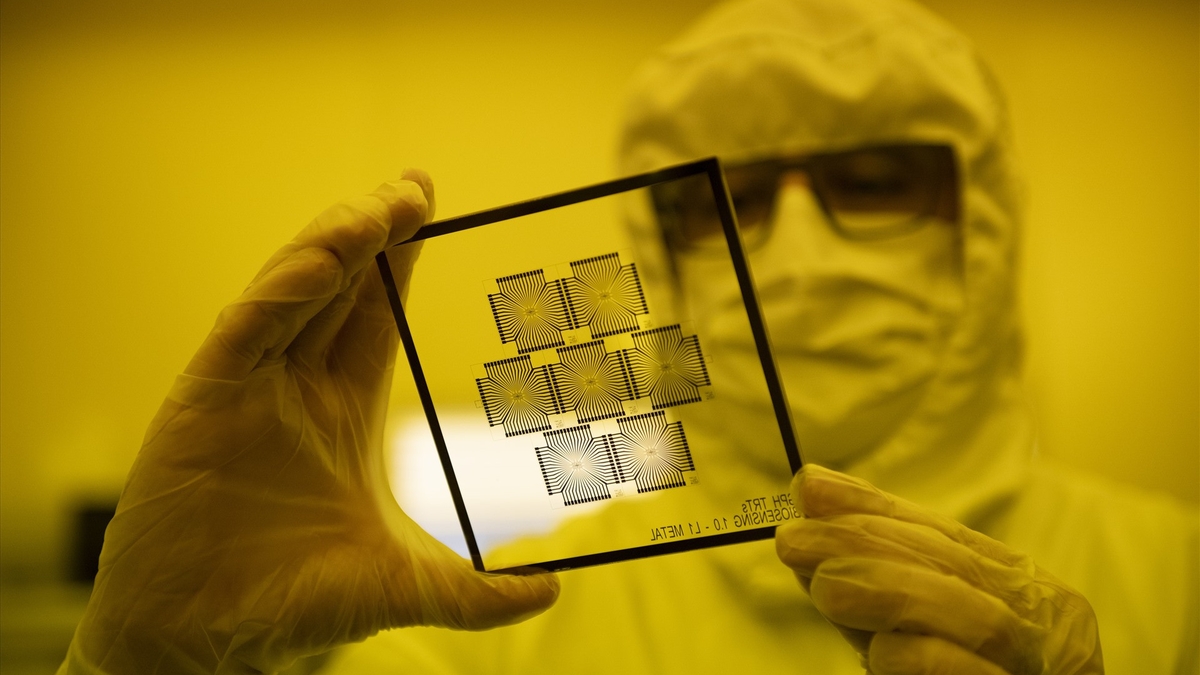

Nobel Prize in Physics 2024: The people who laid the foundations for AI

Nobel Prize in Physics 2024: The people who laid the foundations for AISource: https://tuoitre.vn/cong-cu-ai-chuyen-loi-noi-thanh-van-ban-cua-openai-bi-phat-hien-bia-chuyen-20241031144507089.htm

![[INFOGRAPHIC] Portrait of the newly inaugurated President of Cameroon at the age of 92](https://vphoto.vietnam.vn/thumb/402x226/vietnam/resource/IMAGE/2025/11/08/1762560057791_info-tongthong-cameroon-anh-thumb-jpg.webp)

![[Video] Hue Monuments reopen to welcome visitors](https://vphoto.vietnam.vn/thumb/402x226/vietnam/resource/IMAGE/2025/11/05/1762301089171_dung01-05-43-09still013-jpg.webp)

Comment (0)