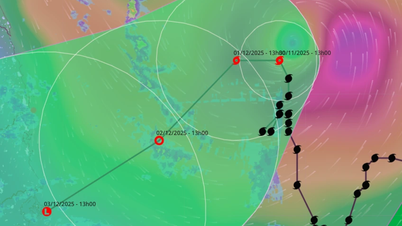

To reach Nobel level, AI needs the ability to self-evaluate and adjust its own reasoning process - Photo: VNU

According to Nature magazine, in recent years, artificial intelligence (AI) has demonstrated the ability to analyze data, design experiments and come up with new scientific hypotheses, leading many researchers to believe that AI could one day rival the most brilliant minds in the scientific community, even making discoveries worthy of a Nobel Prize.

"AI could win the Nobel Prize by 2030"

In 2016, biologist Hiroaki Kitano, CEO of Sony AI, initiated the “Nobel Turing Challenge” – a call for the development of an AI system smart enough to make a Nobel-level scientific discovery on its own. The project’s goal is that by 2050, an “AI scientist” will be able to formulate hypotheses, plan experiments, and analyze data without human intervention.

Researcher Ross King, University of Cambridge (UK), believes that milestone may come sooner: "It is almost certain that AI systems will reach the level of winning the Nobel Prize. The question is only in the next 50 years or 10 years."

However, many experts are cautious. According to them, current AI models rely mainly on available data and knowledge, and have not really created new understanding. Researcher Yolanda Gil (University of Southern California, USA) commented: "If the government invests 1 billion USD in basic research tomorrow, progress can accelerate, but it is still very far from that goal."

To date, only people and organizations have been awarded Nobel Prizes. However, AI has contributed indirectly: in 2024, the Nobel Prize in Physics went to pioneers in machine learning; that same year, half of the Chemistry Prize went to the team behind AlphaFold, Google DeepMind’s AI system that predicts the 3D structure of proteins. But these prizes honor the AI’s creators, not the AI’s discoveries.

To be worthy of a Nobel Prize, according to the Nobel Committee's criteria, a discovery must be useful, have a far-reaching impact, and open up new directions of understanding. An "AI scientist" who wants to meet this requirement must operate almost completely autonomously - from asking questions, choosing experiments to analyzing results.

In fact, AI is already involved in almost every stage of research. New tools help decipher animal sounds, predict collisions between stars, and identify immune cells vulnerable to COVID-19. At Carnegie Mellon University, chemist Gabe Gomes’s team developed “Coscientist,” a system that uses large language models (LLMs) to autonomously plan and execute chemical reactions using robotic devices.

Companies like Sakana AI in Tokyo are looking to automate machine learning research using LLM, while Google is experimenting with chatbots that collaborate in groups to generate scientific ideas. In the US, FutureHouse Labs in San Francisco is developing a step-by-step “thinking” model to help AI ask questions, test hypotheses, and design experiments—a step-by-step approach to the third generation of “scientific AI.”

The final generation will be AI that can ask questions and conduct experiments on its own, without human supervision, according to FutureHouse director Sam Rodriques. He predicts: "AI could make Nobel Prize-worthy discoveries by 2030." The areas with the most potential are materials science and the study of Parkinson's or Alzheimer's disease.

AI deprives young scientists of learning opportunities?

Other scientists are skeptical. Doug Downey of the Allen Institute for AI in Seattle says a test of 57 “AI agents” found that only 1% could complete a research project completely—from idea to report. “Automated scientific discovery from start to finish remains a huge challenge,” he says.

In addition, AI models still do not truly understand the laws of nature. One study found that a model can predict planetary orbits but not the laws of physics that govern them; or can navigate a city but cannot create an accurate map. According to expert Subbarao Kambhampati (Arizona State University), this shows that AI lacks the real-world experience that humans have.

Yolanda Gil argues that to reach Nobel status, AI needs to be able to “think about thinking” – that is, to self-evaluate and adjust its own reasoning processes. Without investing in this foundational research, “Nobel-worthy discoveries will remain a long way off,” Gil says.

Meanwhile, some scholars warn of the dangers of over-reliance on AI in science. A 2024 paper by Lisa Messeri (Yale University) and Molly Crockett (Princeton University) argues that overuse of AI could increase errors and reduce creativity, as scientists “produce more but understand less.”

“AI could deprive young scientists who might otherwise be awarded big prizes in the future from learning,” Messeri added. “As research budgets shrink, it’s a worrying time to consider the cost of that future.”

Source: https://tuoitre.vn/ngay-ai-gianh-giai-nobel-se-khong-con-xa-20251007123831679.htm

![[Photo] Dan Mountain Ginseng, a precious gift from nature to Kinh Bac land](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F11%2F30%2F1764493588163_ndo_br_anh-longform-jpg.webp&w=3840&q=75)

Comment (0)