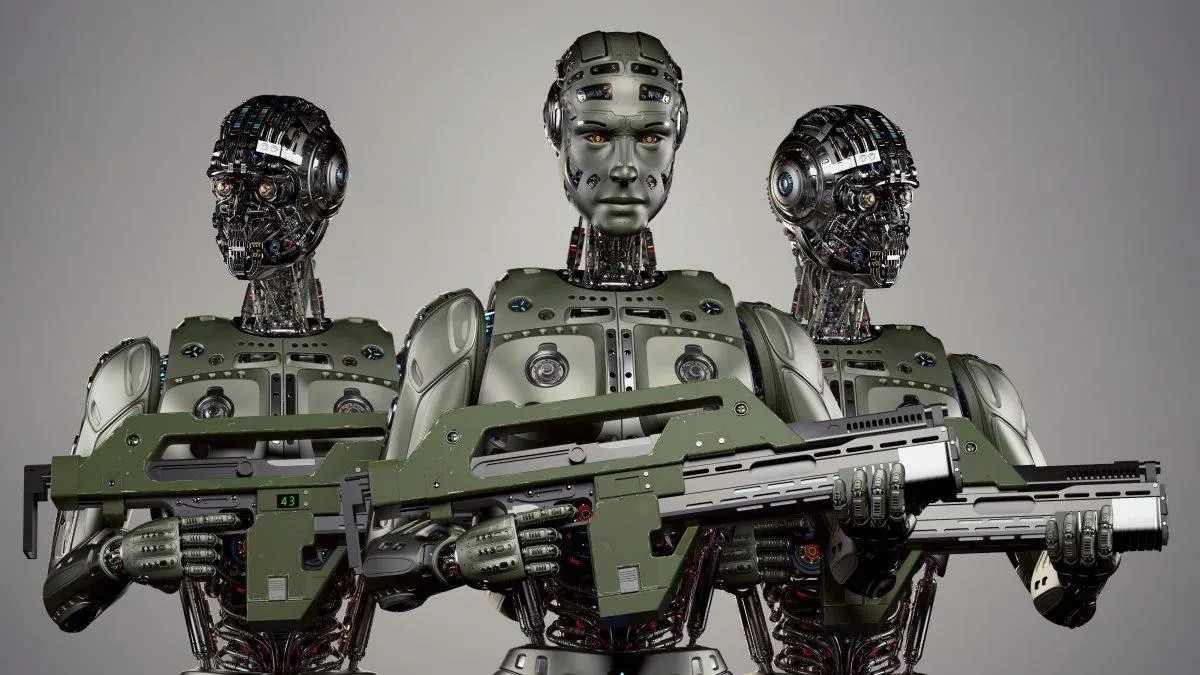

The complex picture of using AI killer robots.

Allowing AI to control weapon systems could mean targets are identified, attacked, and destroyed without human intervention. This raises serious legal and ethical questions.

Emphasizing the seriousness of the situation, Austrian Foreign Minister Alexander Schallenberg stated: "This is our generation's Oppenheimer moment."

Robots and weapons powered by artificial intelligence are beginning to be widely used in the militaries of many countries. Photo: Forbes

Indeed, the extent to which the "genie has escaped the jar" has become a pressing question, given the widespread use of drones and artificial intelligence (AI) by militaries around the world .

GlobalData defense analyst Wilson Jones shared: “The use of drones by Russia and Ukraine in modern conflict, the US use of drones in targeted strike operations in Afghanistan and Pakistan, and, as recently revealed last month, as part of Israel’s Lavender program, demonstrates how AI’s information processing capabilities have been actively used by the world’s militaries to enhance their offensive power.”

Investigations by the London-based Office of War Investigations found that the Israeli military's Lavender AI system had a 90% accuracy rate in identifying individuals linked to Hamas, meaning 10% were not. This resulted in civilian casualties due to the AI's identification and decision-making capabilities.

A threat to global security.

The use of AI in this way underscores the need for technology management in weapons systems.

Dr. Alexander Blanchard, a senior research fellow in the Artificial Intelligence Governance program at the Stockholm International Peace Research Institute (SIPRI), an independent research group focused on global security, explained to Army Technology: “The use of AI in weapons systems, particularly when used for targeting, raises fundamental questions about who we are – humans – and our relationship with war, and more specifically, our assumptions about how we might use violence in armed conflicts.”

When used in chaotic environments, AI systems can operate unpredictably and may not accurately identify targets. (Image: MES)

“Will AI change the way militaries select targets and apply force to them? These changes, in turn, raise a range of legal, ethical, and operational questions. The biggest concern is humanitarian,” Dr. Blanchard added.

The SIPRI expert explained: “Many people worry that, depending on how automated systems are designed and used, they could put civilians and others protected by international law at greater risk of harm. This is because AI systems, especially when used in chaotic environments, can operate unpredictably and may fail to accurately identify targets and attack civilians, or fail to identify combatants who are out of the line of fire.”

Elaborating on this issue, GlobalData defense analyst Wilson Jones notes that the question of how guilt is defined may be called into question.

“Under current laws of war, there is the concept of command responsibility,” Jones said. “This means that an officer, general, or other leader is legally responsible for the actions of the troops under their command. If the troops commit war crimes, the officer is held responsible even if they did not give the order; the burden of proof rests with them, demonstrating that they did everything possible to prevent the war crimes.”

“With AI systems, this complicates things. Is an IT technician responsible? A system designer? It’s not clear. If it’s not clear, then it creates a moral hazard if actors think their actions aren’t protected by existing laws,” Jones emphasized.

A U.S. soldier patrols with a robotic dog. Photo: Forbes

Arms control conventions: Several major international agreements restrict and regulate certain uses of weapons. These include the chemical weapons ban, the nuclear non-proliferation treaties, and the Convention on Certain Conventional Weapons, which prohibit or restrict the use of specific weapons deemed to cause unnecessary or unjustifiable suffering to combatants or to indiscriminately affect civilians.

“Nuclear arms control requires decades of international cooperation and subsequent treaties to be enforceable,” defense analyst Wilson Jones explains. “Even then, we continued atmospheric testing until the 1990s. A major reason for the success of nuclear non-proliferation was the cooperation between the United States and the Soviet Union in the bipolar world order. That no longer exists, and AI technology has become more accessible to more countries than atomic energy.”

“A binding treaty would have to bring all parties involved to a table to agree not to use a tool that enhances their military power. That is unlikely to be effective because AI can improve military effectiveness with minimal financial and material cost.”

Current geopolitical perspective

Although countries at the United Nations have acknowledged the need for the responsible use of AI by the military, much work remains to be done.

Laura Petrone, principal analyst at GlobalData, told Army Technology: “Due to the lack of a clear governing framework, these statements remain largely ambitious. It’s not surprising that some countries want to retain their own sovereignty when deciding on domestic defense and national security matters, especially in the context of current geopolitical tensions.”

Ms. Petrone added that, while the EU's AI Act sets some requirements for AI systems, it does not address AI systems for military purposes.

“I think that despite this exclusion, the AI Act is an important effort to establish a long-overdue framework for AI applications, which could lead to a certain degree of harmonization of relevant standards in the future,” she commented. “This harmonization will also be crucial for AI in the military.”

Nguyen Khanh

Source: https://www.congluan.vn/moi-nguy-robot-sat-thu-ai-dang-de-doa-an-ninh-toan-cau-post304170.html

![[Photo] Prime Minister Pham Minh Chinh presides over a meeting on private sector economic development.](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F12%2F20%2F1766237501876_thiet-ke-chua-co-ten-40-png.webp&w=3840&q=75)

Comment (0)