Classifying artificial intelligence systems into four risk levels

On the morning of November 21, the National Assembly listened to the presentation and report on the examination of the draft Law on Artificial Intelligence.

Presenting the report, Minister of Science and Technology Nguyen Manh Hung said the draft law was built to create a breakthrough legal corridor for artificial intelligence, creating a favorable legal environment to promote innovation, enhance national competitiveness, and at the same time manage risks, protect national interests, human rights and digital sovereignty.

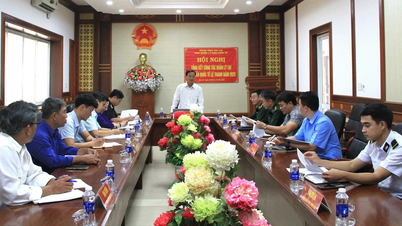

Minister of Science and Technology Nguyen Manh Hung presented the report.

To regulate artificial intelligence systems, the draft law has adopted a risk-based management approach, classifying artificial intelligence into four levels and imposing corresponding obligations.

Artificial intelligence systems are classified into four levels of risk: Unacceptable risk: the system has the potential to cause serious, irreparable harm.

High risk is the system that can cause damage to life, health, rights, and legitimate interests; Medium risk is the system that has the risk of confusing, manipulating, or deceiving users. Low risk is the remaining cases.

The supplier must self-classify the system before circulation and is responsible for the classification results.

For medium and high risk systems, the supplier must notify the Ministry of Science and Technology via the One-Stop Portal. The competent authority has the right to review and re-evaluate the classification.

The law stipulates the responsibility for transparency, labeling and accountability. Specifically, the implementing party must clearly notify and label content created or edited with fake elements, simulate real people (deepfake) that can cause misunderstanding, or content created by Artificial Intelligence for communication and advertising purposes.

Suppliers and implementers must explain the results of high-risk system handling when requested by affected parties.

In case of an incident, the parties are responsible for promptly correcting, suspending or withdrawing the system and reporting it via the One-Stop Portal. For risk management purposes, with unacceptable risk, these systems are prohibited from being developed, supplied, deployed or used in any form.

The prohibited list includes systems used for acts prohibited by law, using fake elements to deceive, manipulate and cause serious harm, exploiting the weaknesses of vulnerable groups (children, the elderly, etc.), or creating fake content that seriously harms national security.

For high risk systems, the high risk system must be assessed for suitability before being circulated or put into use.

Assessment can be in the form of certification of conformity (performed by an accredited body) or surveillance of conformity (self-assessment by the supplier).

The Prime Minister prescribes the List of high-risk artificial intelligence systems corresponding to each form of assessment.

Detailed obligations are set for the supplier (establishing risk management measures, managing training data, preparing technical documentation, ensuring human oversight) and the implementer (operating for the intended purpose, ensuring safety, fulfilling transparency obligations).

Foreign suppliers with high-risk systems must have an authorized representative in Vietnam, and must establish a legal entity in Vietnam if the system is subject to mandatory conformity certification.

For medium, low risk and multi-purpose models, the medium risk system must ensure transparency and labeling.

The draft also stipulates the responsibilities of developers and users of general-purpose artificial intelligence models to ensure compliance with Vietnamese law. Enhanced obligations apply to GPAI models with systemic risk (potential for far-reaching impacts), including impact assessment, technical record keeping and notification to the Ministry of Science and Technology.

However, open source general-purpose AI models are exempt from these enhanced obligations; where an open source model is used to develop an AI system, the using organization must undertake risk management of that system.

Consider using invisible labeling to reduce costs

From an examination perspective, Chairman of the Committee on Science, Technology and Environment Nguyen Thanh Hai said that the Committee finds that labeling AI products is a binding ethical and legal responsibility to build trust in the AI era.

Chairman of the Committee on Science, Technology and Environment Nguyen Thanh Hai.

Basically agreeing with the labeling provisions in the draft Law, however, the Committee recommends referring to international experience on AI-generated products to ensure suitability with Vietnam's practical conditions.

For products and hardware devices with AI applications (such as refrigerators, TVs, washing machines, etc.), it is recommended to research and consider applying invisible watermarks to reduce costs and procedures while still ensuring management and traceability.

At the same time, it is proposed to stipulate in the draft Law the principles for the Government to provide detailed instructions on forms, technical standards and exemptions.

There is a proposal to change the labeling regulation from mandatory to recommended, with minimum technical guidance.

At the same time, it is necessary to pilot a voluntary labeling mechanism in a number of fields; at the same time, strengthen communication to avoid the misunderstanding that "no label means not an AI product".

Regarding risk classification, the Committee considers that the four-level classification while the Law on Product and Goods Quality classifies products and goods into three types is inconsistent if AI is considered as products and goods.

Regulations on certification of conformity for high-risk artificial intelligence systems are not consistent with the principles of management of high-risk products and goods as prescribed in the Law on Product and Goods Quality.

Therefore, it is recommended to continue to review and carefully compare the provisions of the draft Law with current laws.

Source: https://mst.gov.vn/de-xuat-dung-ai-de-truyen-thong-quang-cao-phai-dan-nhan-thong-bao-ro-197251125140252967.htm

![[Photo] 60th Anniversary of the Founding of the Vietnam Association of Photographic Artists](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F12%2F05%2F1764935864512_a1-bnd-0841-9740-jpg.webp&w=3840&q=75)

![[Photo] National Assembly Chairman Tran Thanh Man attends the VinFuture 2025 Award Ceremony](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F12%2F05%2F1764951162416_2628509768338816493-6995-jpg.webp&w=3840&q=75)

Comment (0)