The explosion of artificial intelligence (AI) brings great benefits to humanity but also entails countless consequences: from racial prejudice, sophisticated online fraud to the risk of developing countries becoming cheap "data mines".

Given that reality, should the responsible use of AI be voluntary or mandatory? What should Vietnam do when building a legal corridor for this field?

On the sidelines of the VinFuture Science and Technology Week event, the reporter had a conversation with Professor Toby Walsh - University of New South Wales (Australia), Academician of the American Computer Society.

The professor offers insights into how we "behave" with AI, from holding "machines" accountable to tips for families to protect themselves from the wave of high-tech scams.

Who is responsible for AI?

Professor, should the responsible use of AI be voluntary or mandatory? And how should we actually behave towards AI?

- I firmly believe that responsible use of AI should be mandatory . There are perverse incentives at play , with huge amounts of money being made with AI, and the only way to ensure proper behavior is to impose strict regulations , so that public interest is always balanced with commercial interests .

When AI makes a mistake, who is responsible? Especially with AI agents, do we have the ability to fix their operating mechanisms?

The core problem with AI making mistakes is that we cannot hold AI accountable. AI is not human – and this is a flaw in every legal system in the world . Only humans can be held accountable for their decisions and actions.

Suddenly, we have a new “agent,” AI, that can—if we allow it—make decisions and take actions in our world, which poses a challenge: Who will we hold accountable?

The answer is: Companies that deploy and operate AI systems must be held responsible for the consequences these “machines” cause.

Many companies also talk about responsible AI. How can we trust them? How do we know they are serious and comprehensive, and not just using “responsible AI” as a marketing gimmick?

- We need to increase transparency. It is important to understand the capabilities and limitations of AI systems. We should also “vote by doing”, that is, choose to use services responsibly.

I truly believe that how businesses use AI responsibly will become a differentiator in the marketplace, giving them a commercial advantage.

If a company respects customer data, it will benefit and attract customers. Businesses will realize that doing the right thing is not only ethical, but will also help them be more successful. I see this as a way to differentiate between businesses, and responsible businesses are the ones we can feel comfortable doing business with.

Vietnam needs to proactively protect cultural values.

Vietnam is one of the few countries considering promulgating the Law on Artificial Intelligence. What is your assessment of this? In your opinion, for developing countries like Vietnam, what are the challenges regarding ethics and safety in AI development?

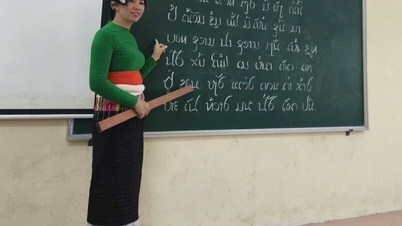

- I am very happy that Vietnam is one of the pioneering countries that will have a specialized Law on Artificial Intelligence. This is important because each country has its own values and culture, and needs laws to protect those values.

Vietnamese values and culture are different from Australia, China and the United States. We cannot expect technology companies from China or the United States to automatically protect Vietnamese culture and language. Vietnam must take the initiative to protect these things.

I am mindful that in the past many developing countries have gone through a period of physical colonization. If we are not careful, we could go through a period of “digital colonization”. Your data will be exploited and you will become a cheap resource.

This is at risk if developing countries develop the AI industry in a way that only exploits data without controlling or protecting their own interests.

So how to overcome this situation?

- It's simple: Invest in people. Upskill people, make sure they understand AI. Support entrepreneurs, AI companies, support universities. Be proactive. Instead of waiting for other countries to transfer technology or guide us, we must be proactive and master the technology.

Professor Toby Walsh shares at VinFuture Science and Technology Week (Photo: Organizing Committee).

Second, we need to strongly advocate for social media platforms to create a safe environment for users in Vietnam, while not affecting the country's democracy.

In fact, there are numerous examples of how social media content has influenced election results, divided countries, and even incited terrorism.

I have spent 40 years working on artificial intelligence. For the first 30 years, I was concerned with how to make AI more powerful. In the last 10 years, I have become increasingly interested and vocal about developing AI responsibly.

Can you give some advice as Vietnam is in the process of drafting the Law on Artificial Intelligence?

- There are many cases where new laws are not needed. We already have privacy laws, we already have competition laws. These existing laws apply to the digital space, just as they apply to the physical world.

It is important to enforce these laws as strictly in the digital environment as we do in the physical environment.

However, there are some new risks that are emerging and we are starting to see that. People are starting to form relationships with AI, using AI as therapists and sometimes it will cause harm to the users, so we need to be careful.

We always hold manufacturers responsible when they release products. For example, if AI companies release AI products that harm humans, they should also be held responsible.

So how can we prevent AI from causing harmful effects to children and create a safe environment for children whenever AI appears, sir?

- I think we have not learned many lessons from social media. Many countries are now beginning to realize that social media has great benefits but also many disadvantages, especially the impact on mental health, anxiety levels and body image of young people, especially girls.

Many countries have already started to implement measures to address this issue. In Australia, the age limit for using social media will come into effect on December 10. I think we need similar measures for AI.

We are building tools that can have positive impacts but can also cause harm, and we need to protect our children's minds and hearts from these negative impacts.

In addition, I hope that countries will also issue strict regulations to manage social networks, thereby creating a truly safe environment for everyone when using AI.

In fact, not only children but also adults are facing the risks caused by AI itself. So how can people use AI to improve their lives and not be a danger?

- AI is applied in many fields, helping to improve the quality of our lives. In medicine, AI helps to discover new drugs. In education , AI also brings many positive benefits.

However, giving access to AI without adequate safeguards is too risky. We have seen lawsuits in the US where parents sued tech companies that drove their children to suicide after interacting with AI chatbots.

Although the percentage of ChatGPT users experiencing mental health issues is only a few percent, with hundreds of millions of users, the actual number is in the hundreds of thousands.

Data is very important for AI development, but from a management perspective, if data management is too tight, it will hinder the development of AI. In your opinion, what dangers can loosening data management cause? How to balance data collection, safe data management with creating development for AI?

- I absolutely agree. Data is at the heart of AI progress , because it is data that drives it. Without data, we will not make any progress. High-quality data is at the core of everything we do. In fact, the commercial value and competitive advantage that companies gain comes from data. Whether we have quality data or not, that is the key issue.

It is infuriating to see the books of authors around the world - including mine - being used without permission and without compensation. It is an act of stealing the fruits of other people's labor. If this situation continues, authors will lose the motivation to write books, and we will lose the cultural values that we cherish.

We need to find a more equitable solution than what we have now.

Right now, “Silicon Valley” takes content and creativity from authors without paying for it – this is unsustainable. I compare the current situation to Napster and the early days of online music in the 2000s. Back then, online music was all “stolen”, but that couldn’t last forever, because musicians needed income to continue creating.

Finally, we have paid streaming systems , like Spotify or Apple Music, or ad-supported music, with a portion of the revenue going back to the artist. We need to do the same with books, so that authors get value from their data. Otherwise, no one will be motivated to write books, and the world will be a lot worse off without new books being written.

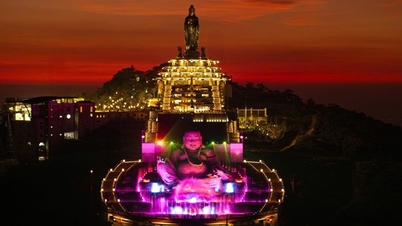

Within the framework of VinFuture Science and Technology Week, the exhibition "Toa V - Touchpoint of Science" will also take place at Vincom Royal City Contemporary Art Center (Photo: Organizing Committee).

AI is very developed in Vietnam. In recent times, Vietnam has had many policies to promote AI, but Vietnam is also facing a problem, which is fraud caused by AI. So, according to the Professor, how should Vietnam handle this situation? What is the Professor's advice for those who are using AI today to ensure safety and protect their data?

- For each individual, I think the simplest way is to verify the information. For example, when receiving a phone call or email, for example from a bank, we need to check again: We can call that subscriber number back or contact the bank directly to verify the information.

There are a lot of fake emails, fake phone numbers, even fake Zoom calls these days. These scams are simple, inexpensive, and don’t take much time.

In my family, we also have our own security measure: a “secret question” that only family members know, such as the name of our pet rabbit. This ensures that important information stays within the family and is not leaked out.

Vietnam has also included AI education in general education, so what should be noted in that process?

- AI changes what is taught and how it is taught. It affects important skills of the future, such as critical thinking, communication skills, social intelligence and emotional intelligence. These skills will become essential.

AI can assist in teaching these skills, for example as a personal tutor, personal review assistant, providing very effective educational tools through AI applications.

Thank you Professor for taking the time for our chat!

Source: https://dantri.com.vn/cong-nghe/viet-nam-can-lam-gi-de-tranh-nguy-co-tro-thanh-nguon-luc-re-tien-20251203093314154.htm

![[Photo] Worshiping the Tuyet Son statue - a nearly 400-year-old treasure at Keo Pagoda](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F12%2F02%2F1764679323086_ndo_br_tempimageomw0hi-4884-jpg.webp&w=3840&q=75)

![[Photo] Parade to celebrate the 50th anniversary of Laos' National Day](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F12%2F02%2F1764691918289_ndo_br_0-jpg.webp&w=3840&q=75)

![[Infographic] 3 timelines of the "Quang Trung Campaign"](https://vphoto.vietnam.vn/thumb/402x226/vietnam/resource/IMAGE/2025/12/04/1764800248456_fb_ava-2.jpeg)

Comment (0)