Dr. Le Duy Tan, lecturer at the Faculty of Information Technology, International University, Vietnam National University Ho Chi Minh City, and co-founder of AIoT Lab VN, said that the use of AI by students to support their studies, assignments, reports, and theses is a common trend worldwide .

According to a 2024 global survey by the Digital Education Council, approximately 86% of students reported using AI tools in their studies; of those, about 54% use them weekly. A June 2025 survey by Save My Exams also showed that 75% of students use AI for homework, with 24% using it daily and 44% using it weekly.

The use of AI by students to support their learning and research is becoming increasingly common.

PHOTO: NGOC LONG

Alongside the positive aspects, it is inevitable that some students will misuse AI tools.

Using AI to conceal AI

According to Dr. Le Duy Tan, some students use AI for assignments, reports, and theses without fully understanding how the tool works and its limitations. This causes them to gradually lose their critical thinking abilities, writing skills, and independent research skills.

Speaking at an online program hosted by Thanh Nien Newspaper, Dr. Dinh Ngoc Thanh, Technical Director at OpenEdu, stated that many students use tools like ChatGPT to solve homework problems instead of studying independently, leading to an "easier" learning experience. However, this is a dangerous approach that goes against educational principles, as the goal of education is not just to complete assignments but to cultivate critical thinking and problem-solving skills.

Mr. Pham Tan Anh Vu, Head of the Southern Region Representative Office of Vietnam Artificial Intelligence Solutions Company (VAIS), stated that from 2022-2024, AI-generated texts often bear easily recognizable "digital fingerprints." The writing style is uniform, lacking emotion, and repeats familiar structures like "not only… but also…", with formulaic transitional phrases like "in addition," "moreover," and always ending with a forced "in short." The content is impeccably clean, with perfect spelling, and the tendency to list items using bullet points are all indicators of AI's influence.

A more serious weakness lies in the content generated by AI. This is the phenomenon of "hallucination," where AI fabricates information, data, or even sources that don't exist. "Many articles generated by AI are like a compilation of different code snippets, resulting in inconsistent writing style and illogical paragraphs," Mr. Vu observed.

By 2025, to overcome AI-powered plagiarism detection tools, students will become increasingly sophisticated. A new technology industry has emerged: AI-powered text personification tools.

Students created a process: using ChatGPT to draft, transferring to Quillbot to rephrase, and finally using "personification" tools like Undetectable AI to erase all traces. Some students even intentionally added small errors to make the text look more "natural."

"At this point, detecting whether an essay uses AI and comparing it to one written entirely by a student is probably impossible because humans have been using many sophisticated tricks to deceive both machines and teachers when grading papers," Mr. Vu asserted.

From the same perspective, Dr. Le Duy Tan argues that articles that are "too clean," free of spelling errors, lacking personal experience or evidence, and with a monotonous writing style are highly suspicious.

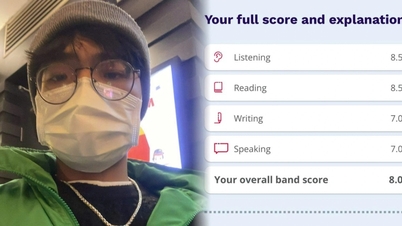

It is very difficult for instructors to accurately assess students' true abilities when learners use AI for assignments, tests, research, etc.

Photo: TN created using AI

The gap between "virtual competence" and real skills.

According to experts, students' misuse of AI is not just cheating; it's undermining the very foundation of education.

Mr. Pham Tan Anh Vu expressed his biggest concern: the risk of AI diminishing students' independent and critical thinking abilities. Because once they become accustomed to solving problems by receiving immediate answers from AI, they will gradually lose the patience to read and understand original documents or synthesize information on their own.

"As Associate Professor Dr. Nguyen Chi Thanh, Head of the Faculty of Education at the University of Education, Vietnam National University , Hanoi , once warned of the risk of users becoming 'digital slaves,' a state of dependence that stifles the three strongest human abilities: problem-solving, creativity, and self-learning," Mr. Vu said.

More dangerously, AI can create an "illusion of competence." A literature teacher in Ho Chi Minh City shared that she once discovered many students using the same AI tool to complete assignments, but when questioned about the content, they couldn't explain what they had written. This shows that the widening gap between perceived competence and real-world knowledge will be a "ticking time bomb," poised to explode when students graduate and enter the labor market.

"The overuse of AI also renders traditional assessment methods ineffective. Homework assignments such as essays and group reports, which were designed to measure research and reasoning abilities, suddenly become meaningless, making it very difficult for instructors to accurately assess students' true capabilities," this teacher emphasized.

Therefore, Dr. Le Duy Tan believes that the most important solution is to redesign the assessment method. Assignments should require students to start with a personal outline, documenting their work process, analyzing their experiences, presenting how they found and verified information, or include a direct question-and-answer session. Research shows that assessments focused on analysis, evaluation, and creativity, rather than just summarizing, help reduce the reliance on AI for the entire process.

"To assess whether a student is serious about their studies or using AI effectively, teachers must change their teaching methods by introducing the topic to students through three key elements: knowing clearly, grasping firmly, and understanding deeply. Only then can they truly develop the students' capabilities when applying AI tools," Mr. Vu said.

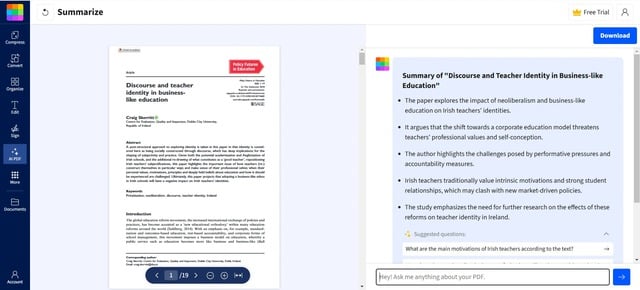

AI tools help students create research reviews, summarize scientific papers, and more.

Photo: Screenshot

The line between assistance and fraud

In the context of AI becoming an essential tool, the question of "should students be allowed to use AI?" is no longer relevant. What is more important is defining the boundaries of AI use in academia.

According to Mr. Pham Tan Anh Vu, that boundary lies in purpose, method, and attitude. AI is only a reasonable tool when used to generate ideas, assist in summarizing documents, check for errors, or explain complex terminology. Students need to be "slow readers," re-evaluate information, and take ultimate responsibility for the content. Conversely, if students copy verbatim all or most of the content generated by AI and submit it as their own work, that constitutes academic fraud.

Mr. Vu also argued that AI should be recognized as an essential competency for students; instead of suppressing it, AI should be acknowledged and its use integrated into the training program.

Regarding solutions, Dr. Duy Tan suggested that universities and lecturers need to develop and clearly announce policies on the use of AI in courses, assignments, and theses. For example, the assignment should clearly state: "Students are/are not allowed to use AI tools; if used, the tools must be clearly specified, which parts are AI-powered, and which parts are student-powered"; students should be trained in the responsible, ethical, and effective use of AI, not just saying "not allowed" but guiding them on how to use AI to assist in checking results, verifying data, analyzing, and further developing AI ideas.

A common legal framework at the national level is needed.

According to Mr. Pham Tan Anh Vu, the pioneering efforts of universities are very important, but to create synchronized change, we need a common code of conduct and legal framework at the national level. We cannot allow a situation where an act of AI abuse might be considered cheating and result in a zero grade at one university, but be accepted at another.

Vietnam needs to build a clear legal framework based on core principles: Adherence to law and ethics; Fairness and non-discrimination; Transparency and accountability (who is responsible when AI makes mistakes); and a human-centered approach (humans always retain ultimate control).

Source: https://thanhnien.vn/sinh-vien-dung-thu-thuat-de-che-dau-ai-185251211185713308.htm

![[Photo] Closing Ceremony of the 10th Session of the 15th National Assembly](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F12%2F11%2F1765448959967_image-1437-jpg.webp&w=3840&q=75)

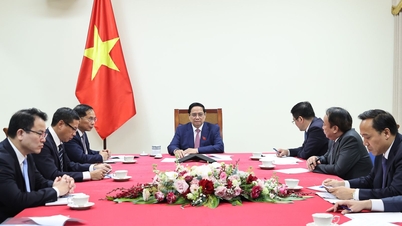

![[Photo] Prime Minister Pham Minh Chinh holds a phone call with the CEO of Russia's Rosatom Corporation.](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F12%2F11%2F1765464552365_dsc-5295-jpg.webp&w=3840&q=75)

![[OFFICIAL] MISA GROUP ANNOUNCES ITS PIONEERING BRAND POSITIONING IN BUILDING AGENTIC AI FOR BUSINESSES, HOUSEHOLDS, AND THE GOVERNMENT](https://vphoto.vietnam.vn/thumb/402x226/vietnam/resource/IMAGE/2025/12/11/1765444754256_agentic-ai_postfb-scaled.png)

Comment (0)