|

Anthropic has just updated its new policy. Photo: GK Images . |

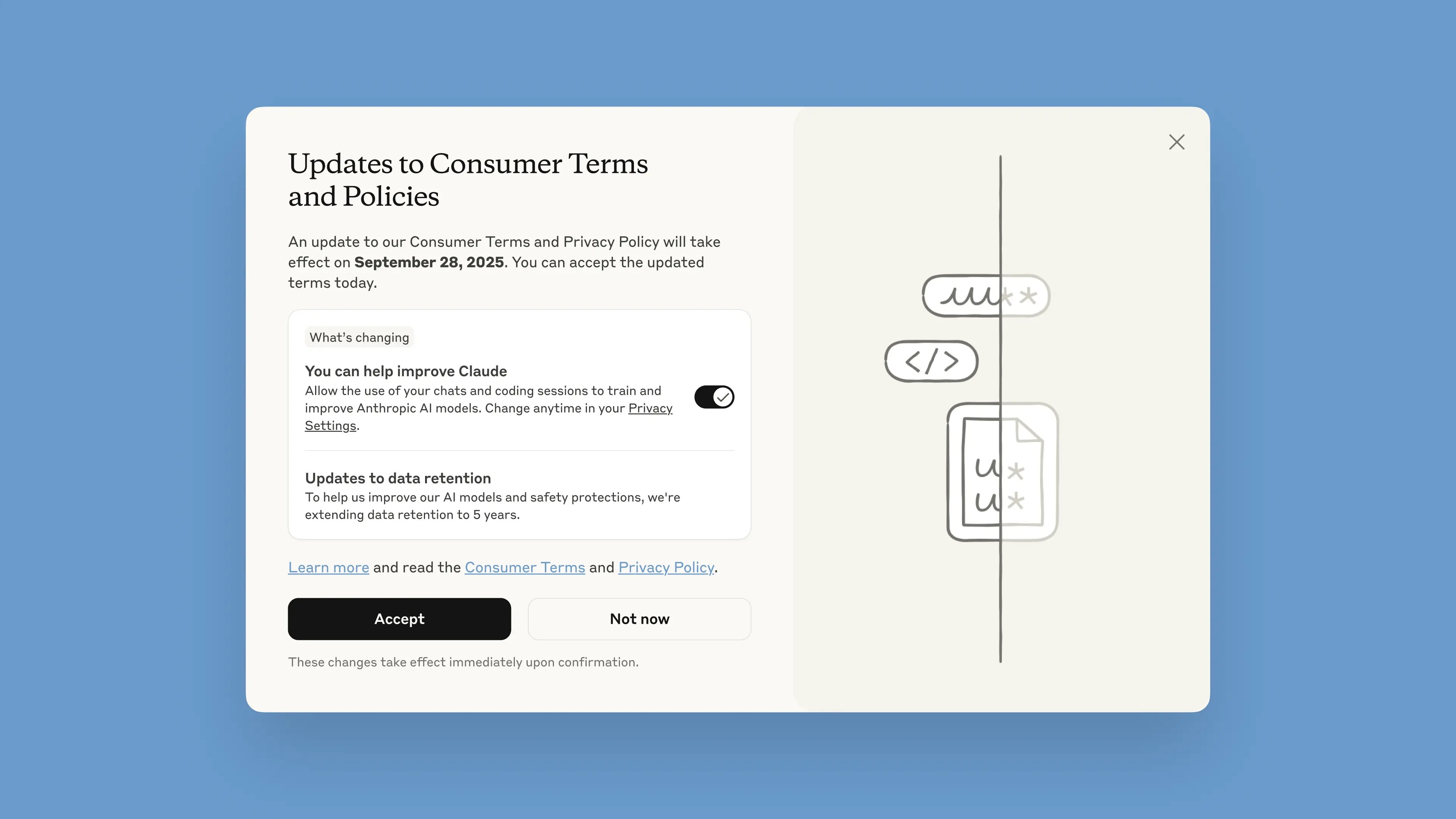

On August 28, AI company Anthropic announced the launch of an update to its User Terms of Service and Privacy Policy. Users can now choose to allow their data to be used to improve Claude and strengthen protections against abuses like scams and fraud.

This notice will be rolled out in the chatbot starting August 28. Users have one month to accept or decline the terms. The new policies will take effect immediately upon acceptance. After September 28, users will be required to opt in to continue using Claude.

According to the company, the updates are intended to help deliver more powerful and useful AI models. Adjusting this option is simple and can be done at any time in Privacy Settings.

These changes apply to the Claude Free, Pro, and Max plans, which include Claude Code, Anthropic's programming tool. Services covered by the Commercial Terms will not apply, including Claude for Work, Claude Gov, Claude for Education, or the use of APIs, including through third parties like Amazon Bedrock and Google Cloud's Vertex AI.

By participating, users will help improve the model's safety, increasing its ability to accurately detect harmful content and reducing the risk of mistakenly flagging harmless conversations, Anthropic said in its blog post. Future versions of Claude will also be enhanced with skills like programming, analysis, and reasoning.

It is important that users have full control over their data, and whether or not they allow the platform to use it in a pop-up window. New accounts can optionally set this up during the registration process.

|

New Terms pop-up window in chatbot. Photo: Anthropic. |

The data retention period has been increased to five years, which applies to new or continued conversations or coding sessions, as well as responses to chatbot responses. Deleted data will not be used to train the model in the future. If users do not opt in to providing data for training, they will continue with the current 30-day retention policy.

Explaining the policy, Anthropic said that AI development cycles typically take years, and keeping data consistent throughout the training process also helps models stay consistent. Longer data retention also improves classifiers, systems used to identify abusive behavior, to detect harmful patterns.

“To protect user privacy, we use a combination of automated tools and processes to filter or mask sensitive data. We do not sell user data to third parties,” the company wrote.

Anthropic is often cited as one of the leaders in safe AI. Anthropic has developed an approach called Constitutional AI, which sets out ethical principles and guidelines that models must follow. Anthropic is also one of the companies that has signed the Safe AI Commitments with the US, UK and G7 governments .

Source: https://znews.vn/cong-ty-ai-ra-han-cuoi-cho-nguoi-dung-post1580997.html

![[Photo] Urgently help people soon have a place to live and stabilize their lives](/_next/image?url=https%3A%2F%2Fvphoto.vietnam.vn%2Fthumb%2F1200x675%2Fvietnam%2Fresource%2FIMAGE%2F2025%2F12%2F09%2F1765248230297_c-jpg.webp&w=3840&q=75)

![[Photo] General Secretary To Lam works with the Standing Committees of the 14th Party Congress Subcommittees](https://vphoto.vietnam.vn/thumb/402x226/vietnam/resource/IMAGE/2025/12/09/1765265023554_image.jpeg)

Comment (0)